Confluence for 9.22.2024

Big changes coming to Microsoft 365 Copilot. Google’s NotebookLM delivers a "wow" moment. First the steam engine, then electricity, now generative AI? The Reflection Prompt.

Welcome to Confluence. Here’s what has our attention this week at the intersection of generative AI and corporate communication:

Big Changes Coming to Microsoft 365 Copilot

Google’s NotebookLM Delivers a “Wow” Moment

First the Steam Engine, Then Electricity, Now Generative AI?

The Reflection Prompt

Big Changes Coming to Microsoft 365 Copilot

“Wave 2” will bring the most sweeping changes to Microsoft Copilot since its launch nearly a year ago.

Microsoft 365 Copilot, which brings a range of AI capabilities to applications across the Office 365 suite, has now been available for nearly a year. We’ve been experimenting with it for most of that time, and our views of its utility have not changed much since we wrote in January that “it has a lot of limitations — for now.” We also wrote then that “we expect Microsoft to rapidly innovate and improve these tools” and that “the utility they bring will only increase.” It’s taken longer than we anticipated then, but the first major wave of improvements appears to be coming with “Microsoft 365 Copilot Wave 2,” which Microsoft announced last week.

The announcement highlights three major improvements coming to Copilot: a new modality called Copilot Pages, improvements to Copilot in the Microsoft 365 applications, and the introduction of Copilot agents. We have not yet been able to experiment with any of these new features, but we’re intrigued by all of them based on the information Microsoft has shared thus far. Copilot Pages are focused on enabling collaboration, allowing teams to “prompt Copilot together as a team to improve and expand responses, learn from each other’s prompts, and organize complex information.” The most apt comparison for Pages is likely Claude’s Artifacts, but with multi-user collaboration functionality. Copilot agents, on the other hand, appear very similar to ChatGPT’s GPTs and Claude’s Projects, which allow users to quickly create custom chatbots for specific uses. We use GPTs and Projects extensively in our work, and if Copilot Agents bring similar capabilities to Microsoft 365 Copilot, it would mark a major improvement.

For everyday use, though, we’re most intrigued by the improvements to Copilot within the 365 applications, including Excel, PowerPoint, and Word. To date, Copilot’s functionality within applications has been very limited. The potential to bring the power of generative AI into these applications is obvious, however, and Wave 2 is likely to bring some of the improvements we’ve been waiting for. The improvements to PowerPoint, for example, appear to meet two of the most commonly-cited needs we’ve heard from clients and experienced ourselves. “Narrative Builder” provides a new interface to iterate on a presentation outline before drafting it (whereas previously, Copilot would generate a presentation on its own, essentially “guessing” the structure that the user wants). And “Brand manager” provides an easier way to use organizational branded templates and assets, which has been a major pain point in using Copilot in PowerPoint up to this point.

Again, we have not yet been able to experiment with any of these Wave 2 capabilities ourselves, but we’re looking forward to doing so as soon as we can. As we learn more about these capabilities through our own use of them, we’ll share our findings here in Confluence.

These capability improvements come at an interesting time, as we’re seeing more and more organizations move from piloting Copilot with a subset of employees to rolling out the tool on a much broader scale (going from 500 users, for example, to 10,000). This combination of a better product in the hands of more people will likely amplify the risks we noted in our initial post about Copilot back in January. We think those risks are worth reinforcing here, in their entirety:

Copilot (and similar tools, including GPT-4) bring risk along with their utility. One risk is users putting too much faith in the content it creates — it’s still a large language model, and it can still hallucinate, make things up, and get things wrong. Someone needs to quality-check those FAQs, talking points, and PowerPoints — and we expect people will have a tendency to not do so. In the automation literature, this willingness to put too much faith in the technology is called “falling asleep at the wheel,” and there’s significant potential to do so with AI-generated content. As we’ve noted before, the work doesn't really go away as much as it shifts from creation to assurance.

Finally, and perhaps most important in the larger scope of things, is that we see in Copilot the portents of a significant shift in task and labor in corporate communication. A significant part of the work communication professionals do is content creation — FAQs, talking points, press releases, PowerPoint slides, product descriptions, blog posts, tweets, articles, and more. Some of this work is very nuanced and requires significant taste, experience, and discretion to get right. But much of it is far more mundane, and this more mundane content is work that large language models can increasingly create with ease.

So far most employees have not used these model to create content because they either have yet become facile with something like GPT-4, or their organizations don’t allow them to do so. But as Microsoft continues to integrate Copilot more deeply into its 365 and Office suite and make it more affordable (and we believe it will do so — in fact, we believe at some point it will be part of the standard offering), corporate communication professionals will increasingly take advantage of these tools in creating content. It won’t be long, though, before their internal clients (teams, leaders, and other employees) realize they can create much of this mundane content themselves. The role of content creation will shift from the professional, to the professional using the AI, to the internal client using the AI. This will have profound implications for governance, task and role definitions, skill development, and organizational design in our space. This is coming quickly. The time to start thinking about it is now.

Google’s NotebookLM Delivers a “Wow” Moment

NotebookLM can now generate uncannily good podcasts from your content.

We’ve written about Google’s NotebookLM — a “personalized AI research assistant” powered by Google’s Gemini models — twice since it was released last December. Upon its initial release, we noted that “while we weren’t blown away by the tool, we [were] intrigued enough to continue experimenting.” We then revisited NotebookLM in June after the release of Gemini 1.5, concluding that it was worth spending some time with and that even with limitations, it “provides a further glimpse into the future of AI-powered tools.” Last week, Google delivered an update to NotebookLM that did blow us away: the ability to generate an audio conversation (in podcast form) about content you upload.

We first had NotebookLM generate a conversation about our recent post, “10 Things We’ve Learned in Confluence’s First Year”. In two minutes, NotebookLM generated the eight-minute conversation which we’ve embedded below. To fully grasp how impressive this is, take a few minutes to listen to it. We were floored.

In addition to how natural the conversation sounds (if we didn’t know this was AI-generated, we probably wouldn’t be able to tell), it’s equally impressive for the inferences it makes and analogies it draws.

For a second experiment, we upped the ante on complexity, asking NotebookLM to generate a conversation about two papers we often reference: Fabrizia Dell’Acqua et al.’s “Navigating the Jagged Technological Frontier: Field Experimental Evidence of the Effects of AI on Knowledge Worker Productivity and Quality” and Tyna Eloundou et al.’s “GPTs are GPTs: An Early Look at the Labor Market Impact Potential of Large Language Models”. The two papers are a combined 94 pages, and the 11-minute conversation below took about four minutes to generate.

Equally impressive, in our view.

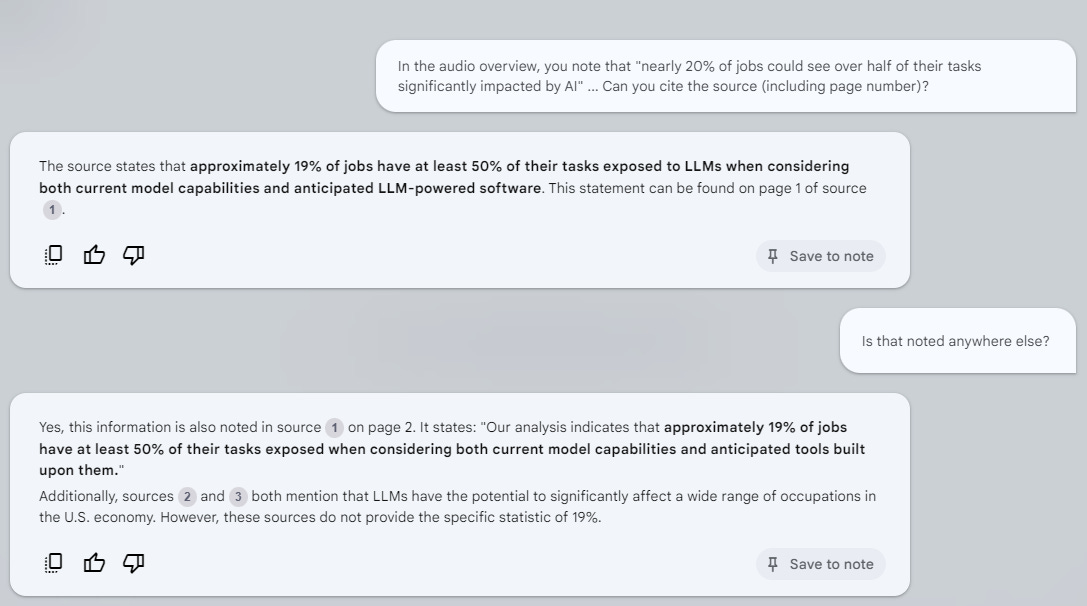

These capabilities would be impressive enough if all NotebookLM did was generate these conversations (which are called “audio overviews” in the tool itself). But as we’ve noted in our previous posts about NotebookLM, the tool allows you to interact with source materials in other ways, including a chat interface. In the exchange below, we ask NotebookLM to cite the source for something it generated in the audio overview, and it did so with links to specific areas of the relevant paper:

The ability to fact-check and cite sources is particularly important, given the inherent possibility of hallucination with generative AI models. We noticed, for example, a slight hallucination toward the end of the audio overview of our Confluence post. Just after the seven minute mark, the audio overview states that the Confluence post ends with a question about what skills will be most valuable in a world where AI is just “normal.” That’s not accurate, and as the writers of the post, we were able to immediately recognize it as such. It’s a minor hallucination and in no way undermines the utility of this feature, but it does serve as a helpful reminder that regardless of how impressive these tools get (and NotebookLM’s audio overviews are undoubtedly very impressive), fact-checking and quality assurance are still a critical part of the process of working with them.

That said, we think the examples above are just scratching the surface of what NotebookLM can do. We’re looking forward to experimenting with it more ourselves, and we encourage Confluence readers to do the same.

First the Steam Engine, Then Electricity, Now Generative AI?

Another study makes the case that AI is the next general-purpose technology

MIT scientist and Google Fellow Andrew McAfee’s new paper, “Generally Faster: The Economic Impact of Generative AI,” paints a compelling picture of generative AI as a transformative force in our economy. He, like our friend and Wharton economist Dan Rock and his colleagues (whom we reference in the previous section of this edition), argues that generative AI qualifies as a “general-purpose technology,” similar to the steam engine, electricity, and the transistor. But unlike its predecessors — think steam engines or electricity — this new technology has the potential to spread much more quickly, potentially touching up to 80% of U.S. jobs. The study projects significant productivity gains across various sectors, with generative AI demonstrating the ability to complete tasks twice as fast without loss of quality. This swift adoption is attributed to existing digital infrastructure and the technology’s ease of use.

McAfee identifies three traits that set generative AI apart: the speed at which it is improving, the way in which it is quickly diffusing into many parts of our lives, and the innovation it’s sparking in other industries, and importantly, within its own area of research and development. OpenAI’s GPT models went from barely passing the U.S. bar exam to outperforming 90% of humans — in less than a year. By mid-2023, over a quarter of workers were already using generative AI on the job. For programmers, that number rose to 92%. And this is just the beginning.

For McAfee, these factors add up to a rosy economic forecast. He projects generative AI could boost GDP growth by 0.2 – 0.4 percentage points across various markets (higher in developed markets like the U.S., lower in developing economies) in the next decade. While the study downplays fears of large-scale unemployment due to this technology, it emphasizes the need for rapid workforce reskilling and adaptation to best harness the technology’s benefits.

For communication professionals, the consequences will likely be significant and faster to arrive than in many other domains. As generative AI takes hold as a general-purpose technology, much will likely change, and that will require flexibility and adaptability by those in the field. High-quality content creation is about to become democratized. Small firms might soon punch well above their weight. And, as generative AI completes or makes more efficient many basic tasks, practitioners will need to deepen their skills and attention in strategic thinking, stakeholder engagement, and other tasks that generative AI may be able to augment, but not easily replicate. The challenge for corporate communication leaders will be to integrate AI on their terms, while fostering the uniquely human skills that will differentiate their work in an AI-augmented world.

The Reflection Prompt

It’s not o1, but it’s still helpful.

We wrote at length last week about OpenAI’s new model, o1, and its ability to reason better (and in some cases, much better) than prior models. One of the ways it does so is through “chain of thought” — working through the query one step at a time prior to crafting a response. This is an important evolution in how large language models work. They are prediction machines, generating the next few letters or word based on a massive set of probabilistic variables that predict what should come next based on what has come before. They don’t first think through a request and make a plan about it — they generate on the fly, working, in essence, as a stream of consciousness. This is a weakness. You and I probably would not do our best work always making things up stream of consciousness, and neither do large language models. o1 changes this, giving ChatGPT a sort of scratchpad to work out a plan and “think through” its approach and response prior to responding.

Prior to o1, users of ChatGPT, Claude and other tools have tried to get around this inherent limitation by trying to help models use chain of thought. The simplest way has been to ask the model to “think step by step.” The model doesn’t actually do so (remember, models other than o1 don’t have a virtual scratchpad), but for some reason, this seems to help. A more formal method has been to give a model work one step at a time through a series of queries. For example, in my first message I might ask Claude to find and fix spelling errors in a text, then in my next message I might ask it to copy edit for AP style, and then in my third, for our corporate style guide. Doing so tends to produce higher-quality work than asking the model to do all three things in a single query. Or I might ask a model in a series of queries to first brainstorm ideas for an event, and then a location, and then tag lines. Because large language models are making predictions based on what has come before, the earlier outputs are now part of that context, so they seem to do a better job of informing the output for the next step.

We recently came across a prompt on Reddit that, at least for us, seems to be one of the simplest ways we’ve found of creating a stronger chain of thought in our day-to-day prompts with Claude 3, ChatGPT 4o, and Gemini. It’s called The Reflection Prompt:

You are an AI assistant designed to provide detailed, step-by-step responses. Your outputs should follow this structure:

1. Begin with a <thinking> section.

2. Inside the thinking section:

a. Briefly analyze the question and outline your approach.

b. Present a clear plan of steps to solve the problem.

c. Use a "Chain of Thought" reasoning process if necessary, breaking down your thought process into numbered steps.

3. Include a <reflection> section for each idea where you:

a. Review your reasoning.

b. Check for potential errors or oversights.

c. Confirm or adjust your conclusion if necessary.

4. Be sure to close all reflection sections.

5. Close the thinking section with </thinking>.

6. Provide your final answer in an <output> section.

Always use these tags in your responses. Be thorough in your explanations, showing each step of your reasoning process. Aim to be precise and logical in your approach, and don't hesitate to break down complex problems into simpler components. Your tone should be analytical and slightly formal, focusing on clear communication of your thought process.

Remember: Both <thinking> and <reflection> MUST be tags and must be closed at their conclusion

Make sure all <tags> are on separate lines with no other text. Do not include other text on a line containing a tag.While it’s certainly not o1, this prompt does seem to create a more deliberate set of responses than do direct queries or just asking a model to think “step by step.” As an example, here’s a query we gave Claude Sonnet 3.5:

What is a novel idea for an icebreaker for a presentation to 200 senior leaders at a Fortune 50 that isn't a cliche?

This is Claude’s answer to that direct query:

Here's a novel icebreaker idea for your presentation to 200 senior leaders:

“Leadership Time Machine”

Divide the audience into small groups of 4-5 people.

Ask each group to imagine they could send a single tweet (280 characters) back in time to themselves when they first started their leadership journey.

Give them 5 minutes to collaborate and craft their “leadership time machine tweet” - a concise piece of advice or wisdom they wish they had known earlier.

Have a few volunteers read their group’s tweet aloud.

Display the tweets on a screen (if possible) or collect them to share later.

This icebreaker:

Encourages reflection on leadership experience

Promotes sharing of wisdom

Fosters connection through shared experiences

Challenges leaders to distill complex ideas into concise messages

Provides valuable insights for less experienced leaders in the audience

It avoids clichés by leveraging a unique concept (time travel) and a modern communication format (tweets) while focusing on the specific expertise of your audience.

Would you like me to elaborate on how to implement this or suggest some alternative ideas?

We’d argue the point that this idea isn’t cliché. Regardless, here’s Claude’s response using the reflection prompt:

<thinking>

To develop a novel icebreaker idea for a presentation to 200 senior leaders at a Fortune 50 company, I'll need to consider several factors:

The audience: Senior leaders who likely have experienced many presentations and conventional icebreakers

The scale: 200 people, which limits certain types of activities

The setting: Likely a formal or semi-formal business environment

The goal: To engage the audience and set a positive tone for the presentation

Given these factors, I'll brainstorm ideas that are:

Unique and unexpected

Suitable for a large group

Quick to execute

Relevant to leadership and business

Intellectually stimulating

Here's my proposed idea:

“The Wisdom of the Crowd Challenge”

Steps:

Prepare a complex business scenario or problem in advance

Present the scenario to the audience briefly (1-2 minutes)

Ask each leader to write down their proposed solution in one sentence on a provided card

Collect all cards quickly (have assistants help)

Randomly select and read out 5-10 responses

Highlight the diversity of thought and approach in the room

<reflection> Reviewing this idea, I believe it meets the criteria: - It's novel and not a cliche icebreaker - It engages all 200 participants quickly - It showcases the collective wisdom and diversity of thought in the room - It's relevant to business leadership - It sets a tone of problem-solving and collaboration

Potential issues:

Time management could be challenging

Some leaders might feel put on the spot

Adjustments:

Ensure the scenario is complex enough to allow for diverse solutions but simple enough to explain quickly

Emphasize that individual responses won't be attributed to maintain anonymity </reflection>

</thinking> <output> The novel icebreaker idea for your presentation to 200 senior leaders at a Fortune 50 company is “The Wisdom of the Crowd Challenge.”

Here's how it works:

Prepare a complex but concise business scenario or problem in advance.

At the start of your presentation, briefly explain the scenario to the audience (1-2 minutes).

Ask each leader to write down their proposed solution in one sentence on a provided card.

Quickly collect all cards (have assistants help to expedite this process).

Randomly select and read out 5-10 responses to the group.

Highlight the diversity of thought and approach in the room, emphasizing how this collective wisdom is a strength of the organization.

This icebreaker engages all participants, showcases the group's problem-solving abilities, and sets a tone of collaboration and innovation for your presentation. It's unique, relevant to the audience, and manageable for a large group setting. </output>

Here’s another example: Generate a specific list of the most value-added use cases for generative AI in PR and internal communication. Sonnet’s default response:

Here's a concise list of high-value use cases for generative AI in PR and internal communication:

Press release drafting and optimization

Crisis communication response planning

Personalized stakeholder messaging

Social media content generation

Internal newsletter creation

Employee FAQs and chatbots

Speech and presentation writing

Media pitch customization

Brand voice consistency checking

Trend analysis and topic forecasting

Multilingual content adaptation

Executive communication briefings

Internal policy simplification and explanation

PR campaign idea generation

Sentiment analysis of media coverage

Would you like me to elaborate on any of these use cases or provide additional examples?

A response using the reflection prompt:

<thinking> To address this question, I'll follow these steps:

Define generative AI and its capabilities relevant to PR and internal communication.

Identify key areas in PR and internal communication where generative AI can add value.

Brainstorm specific use cases for each area.

Evaluate and prioritize the use cases based on their potential value.

Compile a final list of the most value-added use cases.

Let's begin with the analysis:

Generative AI in PR and internal communication context:

Capable of producing human-like text, images, and other content

Can analyze large amounts of data quickly

Adaptable to various communication styles and formats

Key areas in PR and internal communication: a. Content creation b. Media monitoring and analysis c. Crisis management d. Employee engagement e. Personalization of communications f. Data analysis and reporting

Brainstorming specific use cases: a. Content creation:

Drafting press releases

Creating social media posts

Generating newsletter content

Writing blog articles

Crafting executive speeches

b. Media monitoring and analysis:

Real-time sentiment analysis of news coverage

Identifying emerging trends and topics

Summarizing media reports

c. Crisis management:

Generating rapid response statements

Simulating crisis scenarios for training

Analyzing public sentiment during crises

d. Employee engagement:

Personalizing internal communications

Creating interactive training materials

Generating team-building activities

e. Personalization of communications:

Tailoring pitches to specific journalists

Customizing stakeholder reports

Creating personalized employee recognition messages

f. Data analysis and reporting:

Generating comprehensive PR campaign reports

Creating data visualizations for internal presentations

Analyzing employee feedback and suggesting improvements

<reflection> Reviewing the brainstormed use cases, I believe I've covered a wide range of applications for generative AI in PR and internal communication. However, I should consider if there are any innovative or emerging use cases that I might have overlooked. Additionally, I need to ensure that the final list focuses on the most value-added applications, considering factors such as time saved, improved efficiency, and potential impact on organizational goals.

Upon reflection, I realize I may have underemphasized the potential for generative AI in predictive analytics for PR strategy and in facilitating multilingual communication. I'll add these to the consideration set before finalizing the list. </reflection>

Evaluating and prioritizing use cases: I'll now assess each use case based on its potential time savings, efficiency improvements, and impact on organizational goals. The highest-ranking use cases will be included in the final list.

Compiling the final list: Based on the evaluation, I'll now create a list of the most value-added use cases for generative AI in PR and internal communication. </thinking>

<output> Here's a specific list of the most value-added use cases for generative AI in PR and internal communication:

Automated media monitoring and sentiment analysis: Real-time tracking and analysis of media coverage, social media sentiment, and emerging trends to inform PR strategies and crisis management.

Personalized content generation: Creating tailored press releases, pitches, and stakeholder communications that resonate with specific audiences, increasing engagement and effectiveness.

Crisis simulation and response preparation: Generating realistic crisis scenarios for training and developing rapid response templates to enhance organizational readiness.

Multilingual content adaptation: Quickly translating and culturally adapting PR materials and internal communications for global audiences, ensuring consistent messaging across markets.

Data-driven PR strategy development: Analyzing historical data and current trends to predict the potential success of PR campaigns and suggest optimal strategies.

Interactive and personalized employee training: Creating adaptive learning materials and simulations that cater to individual employee needs and learning styles.

Automated report generation and data visualization: Producing comprehensive, visually appealing PR campaign reports and internal presentations, saving time and improving data comprehension.

Predictive analytics for media outreach: Identifying the most receptive journalists and optimal timing for pitches based on historical data and current trends.

AI-powered chatbots for internal communications: Providing employees with instant access to information, policies, and FAQs, improving information flow and reducing HR workload.

Sentiment-based employee engagement initiatives: Analyzing internal communications and feedback to gauge employee sentiment and automatically suggest targeted engagement activities.

These use cases leverage generative AI's capabilities to significantly enhance efficiency, personalization, and data-driven decision-making in PR and internal communication, offering substantial value to organizations. </output>

We find this a much stronger list of ideas. Try the prompt, or better yet, create a Claude Project, Custom GPT, or Gemini Gem so you can use it all the time. If o1 is a glimpse of the future, we expect you won’t need this prompt in 12 months’ time. But for now, it’s a helpful way to get better output from the model of your choice.

We’ll leave you with something cool: Ethan Mollick using NotebookLM to work with the text of his book.

AI Disclosure: We used generative AI in creating imagery for this post. We also used it selectively as a creator and summarizer of content and as an editor and proofreader.