Confluence Focus: Your Employees Are Probably Using GPT-4 At Work Already

The genie might already be out of the bottle.

In several client organizations this week, we had a conversation that went a bit like this:

Client: “Nobody here has access to [insert name of AI tool here]. It’s not supported.”

Us: “Do your employees have Edge browsers on their PCs or the ability to get to www.bing.com through another browser?”

Client: “Sure, Edge is our default browser” or “Sure, I can get to Bing on my Mac.”

Us: “You … and everyone else … probably has access to GPT-4 right now.”

Client: “Say again?”

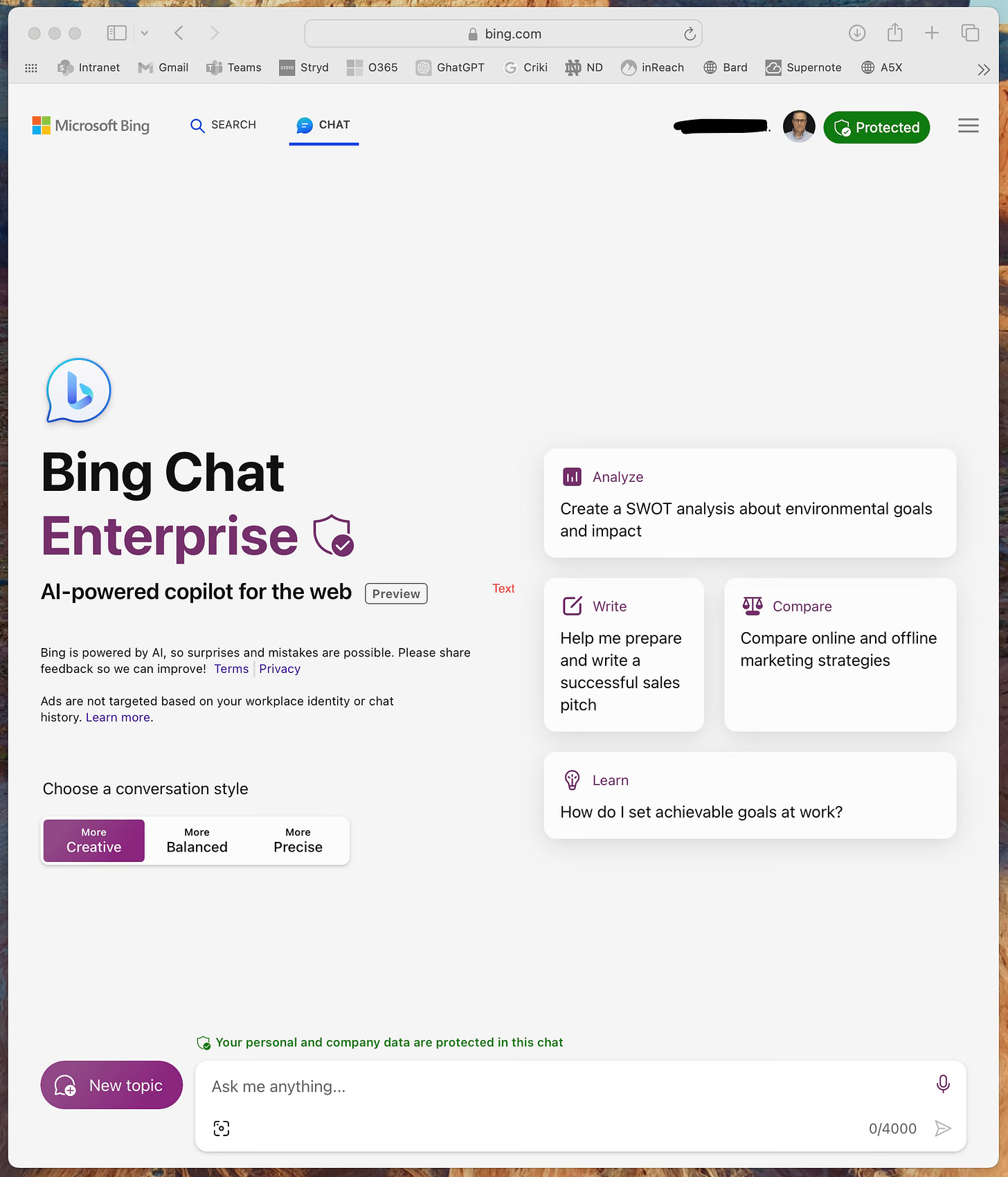

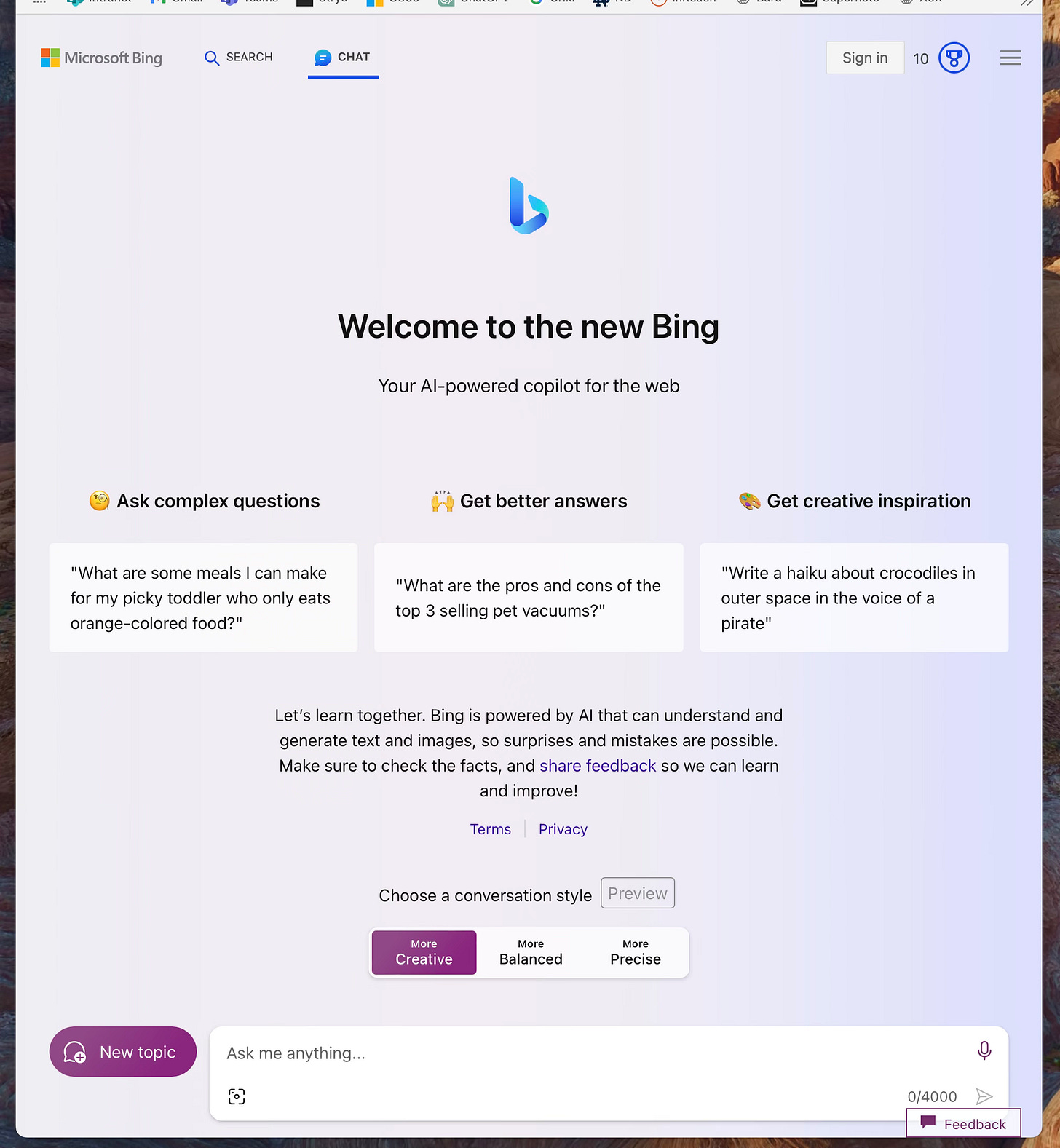

That access comes via the “Chat” feature at Bing.com, or via the Copilot feature in Edge. Both tools utilize GPT-4, permit users to upload images, and have the capability to generate images using DALL-E (although at times, DALL-E might be unavailable due to high demand). You can see the chat feature at Bing.com in the image below, which gives users direct access to GPT-4, including the ability to upload images and create images using DALL-E.

So far, we haven’t found many clients who are aware of this, and from this we can speculate that many employees also aren’t aware of it — yet. But some certainly are, and we can presume that the number of people in organizations who realize this will only grow. This presents at least two challenges.

The first involves privacy and security. At least in our conversations this week, it was clear that the version of Bing Chat our clients could access was the consumer version, and not the enterprise version. This is an important distinction, as the enterprise version offers significantly greater data protection and security than the consumer version. You can read more about those differences here, but the short version is that Microsoft can see and keep whatever you exchange with the consumer version, while the enterprise version offers data security as part of the enterprise Office 365 suite. This means that whomever in your organization happens to be using Bing chat may well be doing so without security and privacy measures in place. You can tell the difference visually: when the user is logged into a corporate Microsoft account, the enterprise version indicates “Protected” next to the user profile at top-right, and declares itself as the enterprise edition right up front when first loaded. The consumer version does neither.

The second challenge is use without governance. Very few of our clients, regardless of size, have any principles or guidelines in place for the use of generative AI, in many instances because they simply don’t believe people have access to such tools inside the corporate technology environment. But at least for organizations where people can access Bing via a browser, they in fact do, which means employees may be using the tools to create communication or assist work in other ways without principles of use in place.

What to do about this? Our first recommendation is to check for yourself and see what’s available in your environment. See if you, or others, can access the primary generative AI tools on the market via your corporate computers and devices: GPT-4 via OpenAI, Claude 2 (Anthropic AI), Bard (Google), and Bing Chat (Microsoft). If so — and you will very likely be able to access Bing — determine if you have access to the consumer or enterprise version. If the answer is “consumer,” it’s time to have a conversation with IT to see if the organization can activate the enterprise edition and the security and privacy measures it brings with it. Regardless of the answer to that question, if employees DO broadly have access to at least one of these tools from their company devices, it’s time to work with IT and your governance / compliance structure to get a set of generative AI principles in place, and ensure they are very clear about how much use is sanctioned (which may well be none), and any other key principles you hope people will live by. At the very least, stating them will put your organization in a position to hold people accountable should they use these tools in a manner not in the organization’s or communication function’s interest. But acting like this use isn’t happening probably won’t help.

We’ve had principles in place at our firm since earlier this year, and we’ve included them below as an example. They work for us, and may not work for others, but they might be helpful. We intend to revisit them every six months, and perhaps more often based on the pace of change.

Generative AI Principles for CRA | Admired Leadership®

Generative AI has the potential to transform how we work and add value to members of the firm and our clients. Rather than be subject to the advancement of this technology we will seek to understand it and embrace its use on our terms and in ways consistent with our values. Primary among these are relationships, excellence, making our own people and our clients better, and fulfillment in our work.

Overall, always be deliberate and thoughtful in the use of generative AI and do so with the intention of bringing greater value to clients. When doing so:

Honor client confidentiality agreements and master services agreements, and redact all information than can identify organizations and individuals, including those in our firm, prior to submitting queries to an AI tool unless that tool offers full data security (e.g., it is part of our Microsoft Office suite).

Submit no CRA | Admired Leadership propriety content to AI tools unless the tool offers full data security (e.g., it is part of our Microsoft Office suite).

Fact-check all AI output (names, dates, citations, etc.).

Subject all AI-generated content to human quality review and revision prior to distribution outside the firm.

Prioritize excellence over efficiency. While these tools can and should save us time, we should not overestimate the time they will save nor trade doing it well for doing it fast.

Prioritize skill development over efficiency. In cases where AI ability overlaps tasks that are part of a colleague’s developmental path (proofreading, editing, thematic analysis, etc.), we should avoid the use of AI and keep those opportunities as part of our developmental method as they teach core skills beyond that of the task itself (attention to detail, writing skillfulness, attention to context, etc.).

Once a colleague has reached a high level of proficiency in a specific skill, consider using AI on overlapping tasks as a means of freeing that talent to add higher levels of value.

When we do use generative AI, we will disclose it. Examples of such disclosures:

“Generative AI was an editorial and proofreading resource in the creation of this content. All use protected client confidentiality.”

“Generative AI was a resource in creating the initial drafts of the following content in this document: [LIST]. All content has benefited from human review and revision, and all use protected client confidentiality.”

“Generative AI was used as a secondary research resource in identifying literature for this document. All use protected client confidentiality.”

“Generative AI was used as a qualitative analytic tool for this work. All output was subjected to human review and verification, and all use protected client confidentiality.”

Again, those work for us, and reflect our value system. They may or may not be right for other organizations, but they offer at least one example. But the larger point is this: some, many, or perhaps even all of the employees in your organization probably have immediate access to generative AI technology today, tools that they can use in all manner of ways to make them more efficient and effective. But those tools also come with significant second-order consequences, for security, privacy, talent, and accuracy. As leaders and as communication professionals, it’s likely time to start the conversation on their principled use.

AI Disclosure: We used generative AI in creating imagery for this post. We also used it selectively as a creator and summarizer of content and as an editor and proofreader.