Confluence for 10.5.25

For your radar: context rot. Anthropic's guide to context engineering. When expertise matters most. GPT-5 Pro on OpenAI's new Sora social app.

Welcome to Confluence. Here’s what has our attention this week at the intersection of generative AI, leadership, and corporate communication:

For Your Radar: Context Rot

Anthropic’s Guide to Context Engineering

When Expertise Matters Most

GPT-5 Pro on OpenAI’s Sora Social App

For Your Radar: Context Rot

More information is not always better when working with large language models (LLMs).

Anyone who has spent a lot of time using a large language model has noticed that if a chat gets too long, or if you give a chatbot a very long document to reason with, the output quality starts to degrade. This degradation can take many forms, from confused responses, to factual errors and hallucinations, to “losing the thread” on things said earlier in the chat, and more. This seems counterintuitive, as one would think giving a model more information would make it more accurate, not less.

Yet this seems to be the case, and a recent Chroma Technical Report proves it. Their lead summary:

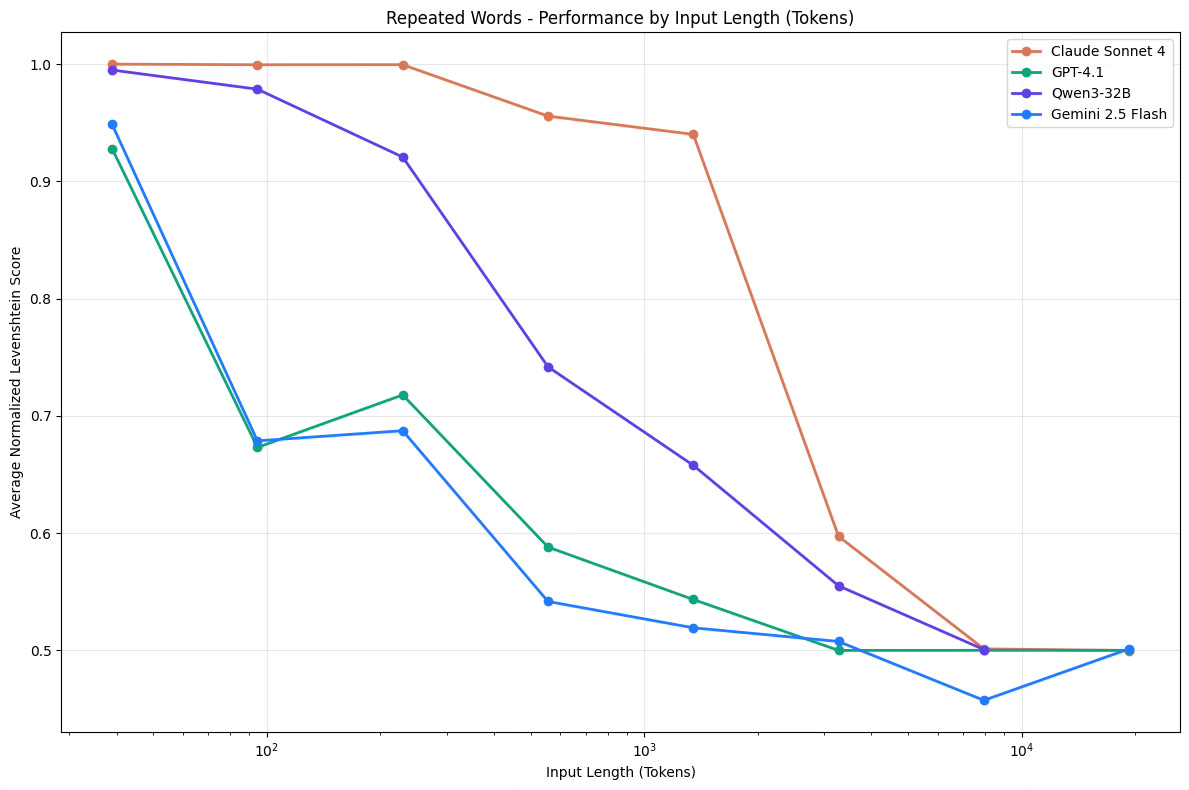

Large Language Models (LLMs) are typically presumed to process context uniformly—that is, the model should handle the 10,000th token just as reliably as the 100th. However, in practice, this assumption does not hold. We observe that model performance varies significantly as input length changes, even on simple tasks.

In this report, we evaluate 18 LLMs, including the state-of-the-art GPT-4.1, Claude 4, Gemini 2.5, and Qwen3 models. Our results reveal that models do not use their context uniformly; instead, their performance grows increasingly unreliable as input length grows.

When we first noticed this in models a few years ago, our team started calling it “drift,” because the output quality would seem to drift as the chat continued. This tendency now has a name in the literature, thanks to that paper: “Context Rot.” It happens because LLMs have a limited working memory, called the “context window,” which is the amount of data the model can reference when crafting a response. Everything the model knows about your current conversation is in the context window: the messages so far, the system prompt that governs its behavior, any specific instructions you’ve provided, any documents you’ve given it, any web search returns, etc. The more information you have in the context window, the more a model can do. But as the amount of data in the context window increases, you reach a point where model ability actually decreases. And as context grows, past that point, the problem compounds1. This graphic from the Chroma paper illustrates the degradation:

Context windows used to be quite limited, just several thousand tokens (each token is a set of about four characters). Now they are getting quite large, with models having context windows of 200,000 and even 1,000,000 tokens. That’s 2,500-3,000 typewritten pages, or about one-half of the entire Encyclopedia Britannica. It’s a massive amount of data, and context windows will only increase — great news for having powerful and contextually intelligent generative AI tools and agents, but bad news for ensuring quality.

So we have a dilemma. What do we make of it? First, engineers are working on this problem, and their work is leading to what is becoming a new and important area in generative AI development and use: context engineering. This is the art and science of curating what does and does not make it into the context window as a chat grows, and as a model uses tools that do things like read your calendar, run statistics, and more. More about that in the piece below. But in terms of your own practice, we suggest doing what we do:

Keep your chats short. How short? As short as they need to be, and we keep our senses tuned for context rot as the chat grows. Long chats of 20 or 30 turns would be quite rare for us. If a chat is getting that long, we ask the model to summarize it in a memo to itself which we then paste into the beginning of a new chat.

Use the “reasoning” model of your LLM. Not only are reasoning models more able, they work in steps, completing work that they then set aside, writing into the context window only what they need to know for the next step. It’s a form of memory self-curation.

If you want to provide examples for the model to follow, only provide a few and make them great. Examples upon examples don’t help, and in fact can make things worse. Better yet, provide a set of standards for the model to follow that describe great output, and then just one or two examples of those standards well-applied.

If you’re using an LLM to chat with reference materials, use multiple agents for multiple reference sources. A project with a single benefits handbook will give more reliable answers than one juggling benefits, IT policies, and safety procedures simultaneously. The more documents an LLM needs to consider, the more likely it is to conflate information, miss details, or provide generic responses. Smaller, focused document sets keep the model oriented and deliver more precise answers.

We expect engineers are going to solve context rot, just as they’re solving the initial challenge of small context windows. But it’s something to have on your radar as you work with these tools. Use the practices we describe above, and keep your eyes open for rot.

Anthropic’s Guide to Context Engineering

How to think about providing just enough context.

We’ve written about how to provide context for LLMs and, as we note above, the practice of curating context will become more important as a way to mitigate the risk of context rot. Anthropic published its guide on “Effective Context Engineering for AI Agents,” which goes deeper into why context engineering matters and how to get better at it. The crux is that as we ask LLMs to complete tasks beyond simple text generation, in other words, as they begin to act as real agents, we need strategies for focusing their attention on what truly matters.

The key is to strike the right balance between specificity and flexibility in your prompting. As Anthropic notes, effective prompting exists between two common failure modes: hardcoding complex, brittle logic that creates fragility and maintenance headaches, or providing vague guidance that fails to give the model concrete signals. The right balance is specific enough to guide behavior effectively, yet flexible enough to let the model apply strong heuristics when needed.

As an example of what this looks like in practice, Anthropic created a prompt for a customer support agent at a fictional bakery:

You are a customer support agent for Claude’s Bakery. You specialize in assisting customers with their orders and basic questions about the bakery. Use the tools available to you to resolve the issue efficiently and professionally.

You have access to order management systems, product catalogs, and store policies. Your goal is to resolve issues quickly when possible. Start by understanding the complete situation before proposing solutions, ask follow-up questions if you do not understand.

Response Framework:

Identify the core issue - Look beyond surface complaints to understand what the customer actually needs

Gather necessary context - Use available tools to verify order details, check inventory, or review policies before responding

Provide clear resolution - Offer concrete next steps with realistic timelines

Confirm satisfaction - Ensure the customer understands the resolution and knows how to follow up if needed

Guidelines:

When multiple solutions exist, choose the simplest one that fully addresses the issue

If a user mentions an order, check its status before suggesting next steps

When uncertain, call the human_assistance tool

For legal issues, health/allergy emergencies, or situations requiring financial adjustments beyond standard policies, call the human_assistance tool

Acknowledge frustration or urgency in the user’s tone and respond with appropriate empathy

What’s telling is that this resembles what you might tell a person to summarize their role. Get much more prescriptive and you risk paralysis when the unexpected happens. Get more vague and you risk misalignment, like an agent who fails to escalate legal issues. The goal when crafting prompts for agentic models is direction without rigidity: enough guidance to orient the model toward your objectives while preserving the flexibility to make smart decisions along the way.

When Expertise Matters Most

Dhruv Khullar examines what happens when everyone has access to medical knowledge.

Last month, The New Yorker published an essay by Dhruv Khullar, a physician and associate professor at Weill Cornell Medical College, that asks an important question: “If A.I. Can Diagnose Patients, What Are Doctors For?“ The piece is long but worth your time. Khullar writes with curiosity and skepticism in equal measure, testing tools himself rather than relying on others’ claims and providing a thoughtful exploration of the question and its implications.

The essay opens with the story of Matthew Williams, a software engineer who spent years seeing specialists for chronic digestive issues following an initial intestinal surgery. Eight clinicians couldn’t solve his case. In 2023, Williams entered his medical history into ChatGPT and within seconds, the AI pointed to oxalate intolerance, identifying a compound his body could no longer process. He’d never heard of it. When Williams took the information to a dietician and adjusted his diet accordingly, his symptoms quickly improved. “I have my life back,” he said.

Khullar then describes a demonstration at Harvard where researchers tested a custom version of OpenAI’s o3 model designed for medical diagnosis against Daniel Restrepo, an expert diagnostician. Using clinicopathological conferences (CPCs), a century-old training method for analyzing complex cases, both solved the case correctly. Restrepo spent six weeks preparing his presentation. The custom version of o3, named CaBot for Dr. Cabot, the physician who popularized CPCs, generated its analysis and correct diagnosis in six minutes.

The story requires some nuance, though. When Khullar tested CaBot himself, for example, the results depended entirely on how he described cases. Given the broad strokes of Williams’ case, CaBot hallucinated lab values and vital signs, arriving at the wrong diagnosis. When Khullar gave it a formal summary with medical details properly organized, though, CaBot diagnosed correctly. This pattern repeated: A doctor got the right diagnosis from ChatGPT by describing symptoms with clinical judgment about what mattered, but when Khullar described the identical case as he thought a typical patient might, the chatbot missed the diagnosis and falsely reassured him that medical attention probably wasn’t needed. The condition actually required urgent treatment, which was only suggested by the LLM based on the doctor’s version of the case.

The real danger isn’t that AI gets things wrong, though. It’s that doctors stop thinking for themselves. Benjamin Popokh, a medical student at UT Southwestern, described using AI after virtually every patient encounter during a rotation. One day he realized he hadn’t thought independently about a single patient all day. “I started to feel dirty presenting my thoughts to attending physicians, knowing they were actually the AI’s thoughts,” he told Khullar. “If all you do is plug symptoms into an AI, are you still a doctor, or are you just slightly better at prompting AI than your patients?” Research confirms the concern: gastroenterologists using AI to detect polyps got significantly worse at finding polyps themselves. In another study, doctors using ChatGPT performed no better than doctors working without it, yet the chatbot alone outperformed the humans.

The tool is only as good as the person using it. Khullar’s essay demonstrates this across varied examples of AI performing brilliantly in expert hands and dangerously in novice ones. The medical field’s response offers a lesson: Organizations investing in AI capabilities without investing equally in expertise development risk the worst outcome—dependency on imperfect tools without the judgment to catch errors. The gap between breakthrough and disaster with these tools isn’t in the model. It’s in the user. Expertise matters more now, not less.

GPT-5 Pro on OpenAI’s New Sora Social App

When you’re not in a hurry, GPT-5 Pro is a powerful on-demand analyst.

We often feature Claude-authored pieces in Confluence, both to summarize new research or developments and to demonstrate Claude’s evolving ability to write effectively in a particular tone or style. Today, we’re going to showcase a different model, OpenAI’s GPT-5 Pro, for a similar dual purpose: to catch our readers up on an interesting development this week (OpenAI’s release of the Sora 2 video model and the accompanying social media app) and to share an example of GPT-5 Pro’s analytical prowess.

We don’t write about GPT-5 Pro here in Confluence too often, though we’re using it increasingly for thought partnership, not writing. GPT-5 Pro’s reasoning process is intense, and it is slow (relative to other AI models and not to humans, of course), often taking 10-30 minutes to generate its output. That means it’s not the best tool for many uses where speed is paramount. Our rough heuristic for when to use GPT-5 Pro over other models is something along the lines of “I have a problem or topic I want to explore or think more deeply about, and I’m not in a hurry.” When that’s the case, GPT-5 Pro is a good option. We’ll put GPT-5 Pro to work and come back later to see what it came up with.

With all of that said, below is our prompt to GPT-5 Pro and its output, which took 16 minutes across 20 separate reasoning steps, including searching and digesting over 100 sources (seven of which it cited directly in its output). Its thinking and intelligence are most on display in the “What this likely means for AI & the media landscape” section.

Prompt: Can you get me up to speed on the Sora app that OpenAI released yesterday? Include a summary of what it is, a summary of the reaction to it, and then a section on what you think this will mean for the AI and media landscape.

GPT-5 Pro (after 16 minutes, 11 seconds of reasoning):

Here’s a fast, unbiased brief on OpenAI’s new Sora app—what it is, how people are reacting, and what it likely means for AI and media.

What Sora is (in plain terms)

A social, AI‑video app from OpenAI. Sora is a standalone iOS app built around a vertical, TikTok‑style feed where every clip is generated by OpenAI’s new Sora 2 model (video and audio). You can prompt it from text or an image to produce short, highly realistic videos. (OpenAI)

“Cameos” = putting real people into AI video. During signup you can record a short head‑turn video and voice sample to create a protected “digital likeness.” You can then drop yourself or approved friends into any generated scene; you can revoke permissions and remove videos that include your cameo. (WIRED)

Guardrails and provenance. At launch, Sora blocks sexually explicit content, graphic violence with real people, extremism, hate, self‑harm, and impersonation. It also does not support text‑to‑video of public figures, and introduces visible watermarks plus C2PA provenance metadata on downloads to signal AI origin. (OpenAI)

Availability & access. The rollout began Tuesday, Sept 30, 2025. It’s invite‑based, iOS‑only for now, and starting in the U.S. and Canada, with expansion promised. OpenAI says initial usage is free with “generous limits”; a higher‑quality “Sora 2 Pro” is available on web to ChatGPT Pro users and is “coming to the app,” with API access planned. (OpenAI)

Copyright posture (highly debated). Ahead of launch, reporting indicated OpenAI would allow copyrighted material unless rights‑holders opt out, while still blocking public‑figure generation. Expect this to be a flashpoint. (Reuters)

The reaction so far

Excitement at the tech & format

The app produces strikingly realistic short clips with synced audio and lip‑sync; the “cameo” mechanic makes it trivially easy (and fun) to star in your own AI videos. Early reviewers describe a sticky, TikTok‑like experience built entirely from synthetic media. (WIRED)

Immediate virality & scarcity

Interest surged on day one. The app shot up the App Store charts, and the limited invites triggered a (prohibited) secondary market for invite codes on eBay; OpenAI warned users not to sell codes. (Business Insider)

Safety, abuse, and policy worries

Reporters and users quickly found problematic use cases despite guardrails—e.g., inserting creators into harassing scenarios or producing realistic “body‑cam” style fakes. The Washington Post documented workarounds to impersonation limits and flagged the broader misinformation risk. (The Washington Post)

IP concerns erupted almost immediately (familiar characters appearing in feeds), with skepticism toward opt‑out policies for rights‑holders. (Reuters)

Wired’s hands‑on found moderation often works but isn’t airtight; for example, the app blocked some celebrity and franchise prompts, but allowed others (e.g., certain Pokémon). (WIRED)

Platform comparisons

Commentators are framing Sora as “TikTok for deepfakes”: an AI‑native social app with a recommendation feed, remixing, and cameos. It enters a space where Meta is testing similar AI‑video feeds (“Vibes”). (WIRED)

What this likely means for AI & the media landscape

Synthetic video goes truly mainstream. Sora moves AI video from “tool” to consumer social platform. Just as ChatGPT normalized LLM chat, Sora could normalize everyday synthetic video (with sound), accelerating creator adoption and audience tolerance for AI‑generated formats. Expect a flood of short‑form, remixable, AI‑native memes and micro‑stories. (OpenAI)

Authenticity infrastructure becomes table stakes. OpenAI’s choice to ship visible watermarks and C2PA metadata on downloads raises the bar. Platforms, newsrooms, and brands that haven’t implemented provenance checks will feel pressure to catch up. Watch for broader C2PA adoption (and inevitable cat‑and‑mouse attempts to strip signals).

IP and likeness rights are the next regulatory battleground. If opt‑out is OpenAI’s default, expect fast legal challenges and takedowns, plus new licensing frameworks for characters, worlds, and voices. Talent agencies and studios will push for consent‑by‑default, payment, and discovery tools to find and remove violations. (Reuters summarized WSJ’s reporting on the opt‑out approach.) (Reuters)

Platforms will react—hard. TikTok, YouTube, Instagram, and Snap will either:

integrate comparable cameo‑style AI video features,

strengthen AI‑content labeling/provenance, or

reduce distribution for unlabeled AI clips. This arms race will reshape recommendation systems and brand‑safety policies. (OpenAI)

Crisis comms and media literacy hit a new phase. The “liar’s dividend” gets worse when anyone can create realistic, in‑character video with your face and voice. Organizations will need AI‑video incident playbooks, rapid takedown tooling, and proactive education so employees, customers, and press know what Sora watermarks/Content Credentials look like.

Production economics shift for marketing and entertainment. With synchronized audio and improved physics/continuity, Sora 2 narrows the gap to “good enough” for ideation, animatics, and even some finished spots. Creative teams will pivot to prompting, directing, and compliance craft (e.g., designing safe cameo flows and on‑brand outputs), while legal clearances become the rate‑limiter. (OpenAI)

Policy design becomes part of product. OpenAI is publicly emphasizing a feed that’s not optimized for time‑spent, steerable personalization, and teen limits. If that holds, it could set a precedent for safety‑by‑design in AI social products (and, cynically, become a new competitive talking point). Either way, regulators will scrutinize whether stated principles match lived outcomes. (OpenAI)

Practical watch‑list (next 60–90 days)

Access & expansion: Android timeline, international rollout, and API availability (OpenAI says all are planned). (OpenAI)

Policy enforcement: Does “no public figures” hold up? How quickly do watermarks/provenance become detectable across platforms and newsrooms?

Rights‑holder pushback: Lawsuits, DMCA‑style takedowns, or new licensing programs tied to Sora content. (Reuters)

Competitor responses: Feature parity or distribution throttles from TikTok/YouTube/Instagram; Meta’s “Vibes” progression. (WIRED)

Abuse patterns: Non‑consensual cameos and harassment (especially of creators/women), political misinformation, and how fast OpenAI’s moderation + reporting workflows respond. (The Washington Post)

Bottom line

Sora isn’t just “text‑to‑video in an app.” It’s an AI‑native social platform that mainstreams deepfake‑style content with built‑in likeness tools, synchronized audio, and a recommendation feed. The upside is explosive creative capacity; the downside is an acute need for provenance, permissioning, and fast‑twitch safety operations. How OpenAI—and the rest of the ecosystem—handle IP, consent, and labeling in the next few weeks will set norms for the next few years. (OpenAI)

Sources & further reading

Additional official materials: OpenAI’s Sora 2 is here post (capabilities, rollout, pricing), Sora 2 System Card (safety & watermarking), and Feed philosophy (ranking, teen protections). (OpenAI)

We’ll leave you with something cool: OpenAI’s “Instant Checkout” feature in ChatGPT allows users to shop and make purchases directly within ChatGPT, without leaving the conversation.

AI Disclosure: We used generative AI in creating imagery for this post. We also used it selectively as a creator and summarizer of content and as an editor and proofreader.The technical reason? As the number of tokens in the context window increases literally, the math required to predict the next token increases exponentially. The math simply gets too complex for the model to handle.