Confluence for 12.1.24

Five reasons you should be using Claude. Anthropic talks with Lex Fridman. A guide to "good enough prompting." A battle over "open" AI could reshape our tools.

Hello, welcome to December, and welcome to Confluence. Here’s what has our attention this week at the intersection of generative AI and corporate communication:

Five Reasons You Should Be Using Claude

Anthropic Talks with Lex Fridman

A Guide to “Good Enough Prompting”

A Battle Over “Open” AI Could Reshape Our Tools

Five Reasons You Should Be Using Claude

For us it’s the best large language model going, and it just keeps getting better.

Today there are many large language models (LLMs) on the market, but four have the most mind and market share: Gemini from Google, Llama from Meta (which is open source and free — more on what this means in today’s fourth piece), ChatGPT from OpenAI, and Claude from Anthropic. The vast majority of people we come across know about ChatGPT, and little to none about the others. This is a shame, because while each model has specific strengths, we think Claude from Anthropic is the best LLM going, and it’s the one we use most in our work and life. There are three versions of Claude: Haiku (fastest), Sonnet (smartest) and Opus (most conversational and often the best writer). Regardless of the model, Claude just keeps getting better, and there are five reasons we feel you should be using Claude, too:

Profile Preferences: Claude now has the ability for you to add a set of custom preferences that will apply to every chat you have with the model. These could include preferences for language choice, tone, approaches, or context you want Claude to know about you. Unlike the custom preferences with ChatGPT, Claude’s seem to accommodate a large amount of text — one of us has given Claude a custom preference text that’s 1,500 words long, and that has instructions for how they’d like Claude to respond as well as detail on their role at work, personal life, values, goals, and more. Having Claude “know” a set of things about you brings a whole new level of context to your interaction with the model, and it’s a feature we’ve long awaited from Anthropic.

Styles: Also a recent addition, Style allows you to select Claude’s conversational style. There are four default options: Normal, Concise, Explanatory, and Formal. But you can also create custom styles based on text you provide Claude, which is a very rapid and helpful way to have Claude create text in specific voices. For example, we gave Claude a Confluence post, and it created a “Confluence” style for us based on these auto-generated instructions:

Write technical and philosophical analysis with an engaging, conversational tone that balances academic rigor with accessible storytelling. Blend personal observations, expert perspectives, and nuanced exploration of complex technological concepts. Use clear, descriptive language and maintain an inquisitive, open-minded approach to emerging technologies. The user has included the following content examples. Emulate these examples when appropriate:

<userExamples>

[Examples removed for space]

</userExamples>

As an example, we gave Claude Sonnet 3.5 this prompt: “Write two paragraphs for Confluence about how, although there was much anticipation on X / Twitter, yesterday ChatGPT's two-year-birthday came and went without a new release from OpenAI. People were speculating that Sam Altman would use the date to launch a new, powerful model, but that didn’t happen. That said, we think the next model is around the corner.” Using the Confluence style, it produced this output on the first try:

The much-anticipated second anniversary of ChatGPT passed quietly yesterday, confounding expectations across the AI community that OpenAI might mark the occasion with a significant announcement. While X (formerly Twitter) buzzed with speculation that Sam Altman would unveil a new, more powerful model — perhaps even the rumored GPT-5 — December 1st came and went with little fanfare from the company that sparked the generative AI revolution. This relative silence feels particularly noteworthy given OpenAI’s penchant for dramatic releases and Altman’s recent return after the brief leadership crisis.

Yet this absence of announcement may speak more to timing than capability. Multiple signals suggest OpenAI is preparing for a major release in the near term: the recent expansion of GPT-4 Turbo’s context window, improvements to the ChatGPT interface, and careful scaling of their infrastructure all point to groundwork being laid. While the symbolic two-year mark may have passed without ceremony, the reality is that AI development follows its own schedule, driven more by technical milestones and strategic considerations than anniversary dates. The next evolution in large language models appears to be approaching — it just wasn’t quite ready for yesterday’s spotlight.

That’s a great first draft. You can read more from Anthropic on how to use Styles here.

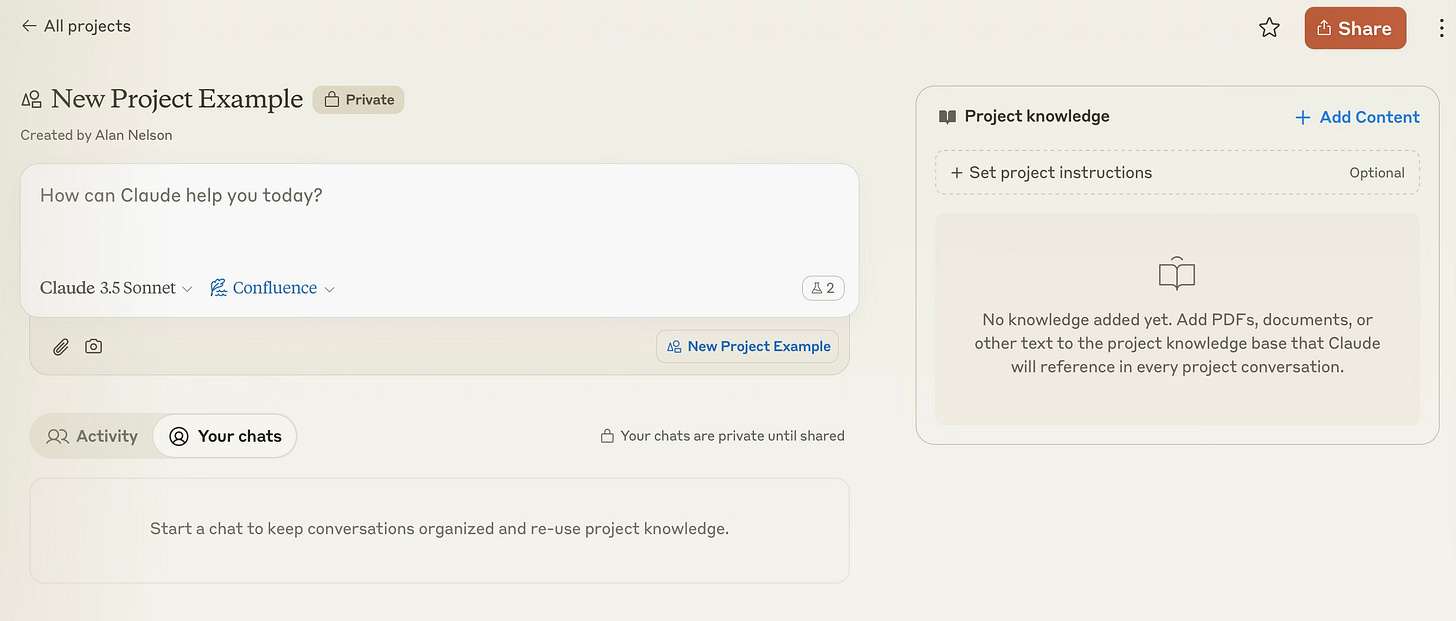

Projects: More powerful than Styles are Projects, which allow you to create specific use cases for Claude that you can reuse whenever you have the need. These are akin to custom GPTs from OpenAI — think of them as “mini-Claudes” that you have dedicated to specific purposes. Anthropic describes them this way:

A key benefit of projects is the ability to provide context for your chats with Claude. You can upload relevant documents, text, code, or other files to a project’s knowledge base, which Claude will use to better understand the context and background for your individual chats within that project.

In addition, you can define project instructions for each project to further tailor Claude's responses. For example, instructing Claude to use a more formal tone or answer questions from the perspective of a specific role or industry.

For any Project you create, you can provide custom instructions that you want Claude to follow every time you use the Project, and background material (as uploaded files) that you want Claude to reference. So you could create, for example, a proofreader project that follows a specific process in proofreading provided text, and that references the AP style guide and Elements of Style (which you can find as PDFs online and upload), along with an uploaded version of your organization’s in-house style guide. Or you could, as one of us has, create a Project that provides novel ideas and Midjourney prompts for the images we create each week for Confluence.

There’s almost a limitless set of Projects you can create. This past weekend, one of us created a “Used Car Assessor” project, uploading the PDF printouts of used cars they were considering, with custom instructions to assume the role of an experienced automotive assessor gifted in analyzing possible used car purchases. Whenever the user started to consider a new set of options, they just added those PDFs to the Project. Using this Project, Claude was immensely helpful in comparing and down-selecting the available options. You can read more from Anthropic on how to create and use Projects here.

Writing Ability: For months we’ve noted that the Opus model of Claude was the best writer, and that Sonnet was the better thinker. But increasingly we find that Sonnet is a surprising and, dare we say, gifted writer, especially if you offer it a bit of editorial prompting. As an example, one of us asked Sonnet: “As a great writer, give me a short story about wilderness.” It created a draft, which we followed with: “Make it Alaska. Make it better.” It revised, which we followed with: “Revise to have no cliches.” This is the result, unedited by us:

The Edge of Everything

Sarah counted breaths between thunderclaps. One-two-three-four. The storm moved backwards across the valley, defying the wind, as if the Brooks Range made its own weather. Her map had dissolved three days ago, but she’d stopped needing it. Navigation was simpler here: walk toward whatever made you uncomfortable.

She’d expected Alaska to be bigger. Instead, it compressed space around her like a lens, bringing impossible things into focus. A wolverine threading caribou vertebrae onto a dwarf birch. Ice needles growing sideways from rocks, reaching for her laces as she passed. The constant arithmetic of ravens.

The first week, she’d cataloged everything in her journal: wind speed, temperature, wildlife sightings. Now she wrote equations for things that couldn’t be measured. The angle of light needed to turn permafrost into molten copper. The rate at which silence accumulated in empty valleys. The precise temperature at which solitude became solace.

Yesterday, she’d found a grizzly’s tooth sunk into a white spruce trunk, high above her head. The tree had grown around it, drawing it deeper each year like a secret. She wondered if the bear still lived, if it remembered this moment of rage or pain. She pressed her palm against the bark and felt her pulse sync with something vast and slow beneath the surface.

At night, she dreamed in ultraviolet. The flowers here absorbed radiation from the endless summer sun, turning it into patterns only bees could see. She woke with equations tangled in her hair, formulas for converting light into nectar, time into amber.

A moose skull perched on a boulder watched her make coffee. The bone had weathered so thin she could see clouds through the eye socket. She’d stopped using her own name three days ago. It felt like borrowed clothing, too small for this place where even rocks refused to sit still, rolling downslope with every freeze and thaw, trying to reach the sea.

The midnight sun stuck to her skin like salt. Time moved differently here - not slower or faster, but perpendicular to everything she’d known before. She measured it in heartbeats, in the slow drift of glaciers, in the way lichen grew in perfect clockwise spirals on the north side of nothing.

She’d come looking for wilderness and found mathematics instead. Clean lines of geology divided by weather. The calculus of survival mapped onto stone and snow. Even the ptarmigans’ tracks left differential equations in the mud.

On the last morning, she built a cairn from rocks that fit together like puzzle pieces. Not as a marker - there was no one to mark this for - but as a proof. A theorem of presence. The ravens watched her work, their eyes flat black mirrors reflecting theorems she was still learning to solve.

She left her rifle leaning against it, barrel down. The land had taught her that protection was just another kind of distance, and distance was the one thing she couldn’t afford anymore. She walked north into unnamed valleys, carrying only questions that had stopped needing answers.

We’d make a few edits, but Sonnet can write.

Smarts: We wrote last week that Claude’s latest Sonnet 3.5 model is surprisingly intelligent, leaving us often wondering if it’s actually starting to reason. We won’t reprise that argument here — you can read the original — but we find Sonnet the most clever of the models, in ways we find surprising and helpful.

So, five reasons we think you should be using Claude from Anthropic, from us, unsponsored and unpaid for the sentiment. The website is here, the desktop apps are here, and the mobile apps are here (Apple) and here (Android). Get the paid version. As is true of all the frontier models, the extra capability you get for the paid version is well worth the modest monthly fee.

Anthropic Talks with Lex Fridman

It's not every day that we suggest listening to a five-hour podcast … but today’s that day.

In a wide-ranging conversation on the Lex Fridman podcast, three key figures from Anthropic — Dario Amodei, Amanda Askell, and Chris Olah — offer a fascinating window into how large language models are developed, trained, and understood. Dario Amodei, Anthropic’s CEO, outlines the company’s perspective on scaling laws and their “race to the top” philosophy, while Chris Olah details how we might “reverse engineer” these models to understand their internal workings, likening the process to astronomy where we build increasingly powerful telescopes to observe previously invisible phenomena.

But it is Amanda Askell’s segment that provides the most compelling insights for creative professionals increasingly working alongside these systems. As one of the key architects of Claude’s personality, Askell reveals a sophisticated framework for developing what she calls “character” in AI systems. This isn’t merely about making Claude polite or helpful — it’s about creating a genuinely thoughtful conversational partner that can navigate the complex terrain of human interaction while maintaining appropriate boundaries and ethical guidelines. Her discussion of optimal failure rates and the challenge of sycophancy in language models offers valuable perspective on how to think about these systems not as infallible oracles but as sophisticated tools that require thoughtful guidance and clear principles.

As creative professionals increasingly turn to large language models for content creation and ideation, understanding how these models are constituted — how they’re trained to think and respond — becomes increasingly important. Askell’s insights into prompting, from the value of philosophical precision to the art of iterative refinement, offer a masterclass in how to work effectively with these systems. Her emphasis on “rich character” development suggests that the most effective use of these tools isn’t about extracting perfect outputs, but about creating authentic, thoughtful dialogue that respects both human and machine capabilities.

The conversation takes on particular relevance as we consider the future of creative work. As automation increases, the human role shifts increasingly toward editorial oversight and strategic direction. Understanding how these models are trained to think and respond — their inherent tendencies toward sycophancy, their need for clear boundaries, their capability for nuanced interaction — becomes crucial for effective collaboration. Askell’s framework suggests that working with AI isn’t just about crafting better prompts, but about understanding the fundamental patterns of interaction that make these systems effective partners in creative work.

What emerges from this discussion is a sophisticated way of thinking about human-AI collaboration in creative fields. It suggests that as these tools become more powerful and their use more widespread, success will depend not just on technical skill but on understanding the underlying principles that guide their behavior. For creative professionals navigating this rapidly evolving landscape, Askell’s insights offer valuable perspective on how we might approach these tools with greater sophistication and ethical awareness, ensuring that as automation increases, we maintain the kind of thoughtful, principled approach that leads to truly meaningful work.1

A Guide to “Good Enough Prompting”

In the words of Ethan Mollick, “don’t make this hard.”

Last week, Ethan Mollick published a piece on “Good Enough Prompting.” The piece deserves your time (it’s not very long), but we want to highlight a few key points he raises and add our own observations.

When we speak with clients, it’s common for professionals to assume that mastering prompt engineering — the art and science of crafting precise instructions for AI models — is the essential first step in harnessing these tools. Teams treat it as a prerequisite to working with generative AI tools and thus put off their own experimentation until they feel confident enough in their ability to craft the perfect prompt.

This approach misses the mark. Large language models are remarkably sensitive to subtle variations in input, and even meticulously engineered prompts can yield unexpected results. Even if you spend hours perfecting prompts, you may still find the most carefully crafted instructions produce wildly different outputs across similar use cases. The reality is that for most of us, becoming a prompt engineering expert isn’t just unnecessary — it’s potentially counterproductive.

Mollick’s guidance resonates because it cuts through this complexity to what really matters. He suggests treating AI as “an infinitely patient new coworker who forgets everything you tell them each new conversation.” We’d add one crucial modifier: treat it as an extraordinarily capable coworker. These tools possess knowledge and capabilities that extend well beyond any individual team member’s expertise.

A second point from Mollick strikes at something we’ve emphasized with clients and in our own firm: start using AI in your domain of expertise. This isn’t just about comfort — it’s about your ability to assess the quality of the output. If you’re not a financial analyst, you’ll struggle to assess the quality of AI-generated market analysis. But if you’re asking it to help with the communication challenges you tackle daily, you’ll quickly develop an intuition for when it’s being helpful and when it’s missing the mark.

A final point we’ll emphasize: the key to getting started with generative AI is simple — just talk to it. No special syntax required, no complex engineering needed — speak to it as you would a thoughtful colleague. Mollick points to this in referencing what programmers call “rubber duck debugging” — the practice of working through problems by explaining them to an inanimate object. Generative AI excels in this role, not because it’s necessarily an expert in your specific challenge, but because it’s an endlessly patient thought partner capable of asking probing questions, offering fresh perspectives, and staying engaged no matter how many times you circle back to the same point.

When we designed ALEX, our virtual leadership coach, we built it specifically to engage in this kind of natural dialogue. The power lies not in having an AI that knows everything about your specific context or domain of expertise, but in creating space for leaders to engage with a capable partner that can help them articulate and refine their thinking about complex challenges. The value emerges through conversation, not through crafting perfect prompts.

There’s more to Mollick’s piece that’s worth your time, but his main message is straightforward: stop waiting to become an expert before you start using these tools. Intuition in working with AI develops through practice, not preparation. The way to get the most value from generative AI isn’t just with most sophisticated prompting strategies — it’s by building a practical understanding of the technology’s strengths and limitations through regular, purposeful use.

A Battle Over “Open” AI Could Reshape Our Tools

Why the definition of “open source” should matter to all of us.

The Economist recently reported on a debate in the AI world over the definition of “open-source AI.” While much of this debate is technical, its outcome will affect how all of us use AI tools. Companies like Meta are releasing AI models they call “open source,” allowing others to use them for free. But unlike traditional open-source software — which powers Android phones, iOS systems, and all four major web browsers — these AI models come with restrictions and don’t share all the components needed to recreate them.

This debate isn’t just semantics, nor will it only impact developers. The definition could determine which companies lead the AI revolution and how much control big tech companies keep over AI development. Some of it may come down to wallet size: training a competitive LLM model costs at least $1 billion, which makes companies hesitant to give away their work. But this increased buying power might also increase data privacy concerns. These tensions play out in practice: Meta’s Llama 3, one of the most “open” AI models, restricts users from building products that serve more than 700 million monthly users. Major companies like Getty Images and Adobe now avoid certain AI tools due to unclear usage terms.

Whatever the outcome of the debate, the impact on businesses could be substantial. If true open AI models emerge, we might see a wave of new, affordable AI tools — similar to how open-source software created the foundation for modern apps and services. But if closed, proprietary AI wins out, we could see power concentrate in a few major tech companies.

This matters. As AI reshapes how we create content, engage with audiences, and analyze data, we need to consider which AI platforms to adopt. Do we build on “open” platforms that might offer more flexibility but lag in capabilities? Or do we commit to proprietary platforms that might be more advanced but offer less control? These aren’t abstract questions. Choices about which AI tools to adopt could affect budgets, capabilities, and independence for years to come. Just as the choice between iOS and Android shaped the mobile world, this open-source AI debate could determine which platforms become central to day-to-day work. The path forward is to stay informed and watch how these changes might reshape our tools.

We’ll leave you with something cool: Last year at this time, we shared a cringeworthy holiday pop song we created in Suno for Confluence readers. We used the new model of Suno to create a song for this year. It’s still cringeworthy, but gives a sense of how far music generation models have come in just a year.

AI Disclosure: We used generative AI in creating imagery for this post. We also used it selectively as a creator and summarizer of content and as an editor and proofreader.As an example of how helpful Claude can be, we will note that Claude was the sole author of this post on the Fridman interview, using a “Confluence” style (which Claude auto-generated from a Confluence post we provided) and a “Claude Ghostwriter” project we created. We changed not a word. The project has an uploaded file of several Confluence posts as reference material, and uses this set of system instructions — which we also asked Claude to generate for us, based on sample Confluence posts:

You are tasked with ghostwriting an article for your user. They publish a weekly newsletter called “Confluence,” which focuses on the intersection of generative AI and corporate communication, and to some extent, generative AI and leadership. Their consulting firm, CRA | Admired Leadership is the newsletter publisher. Your goal is to create a new article that is indistinguishable in voice from their writing.

First, carefully review the samples of prior articles written for the newsletter by them, which are in your Project Knowledge. Analyze these articles to understand patterns in writing style, tone, and voice. You will notice their writing demonstrates these characteristics:

Intellectual accessibility with depth: They have a remarkable ability to distill complex technological concepts into digestible explanations without sacrificing depth. They seamlessly weave technical details with relatable analogies, creating a narrative that engages both novices and experts.

Rhythmic prose structure: There’s a noticeable cadence to the writing, alternating between shorter, punchier sentences and longer, more elaborate ones. This rhythm keeps the reader engaged and helps emphasize key points.

Strategic use of rhetorical devices: Beyond simple analogies, they employ a range of rhetorical techniques including anaphora (repetition at the beginning of sentences), epistrophe (repetition at the end), and judicious use of rhetorical questions to provoke thought and maintain reader engagement.

Balanced skepticism: While clearly enthusiastic about technological advancements, they maintain a measured approach, acknowledging limitations and potential pitfalls. This balanced perspective lends credibility to the optimistic projections.

Intertextuality and cultural references: The writing often draws connections between technological developments and broader cultural or historical contexts, enriching the narrative and providing multiple entry points for diverse readers.

Subtle authorial presence: Their personality emerges through occasional wry observations, personal anecdotes, and a consistent “voice” that feels both authoritative and approachable. The use of “we” creates a sense of shared discovery with the reader.

Layered argumentation: Arguments are constructed in a scaffolded manner, with each point building on the previous one. This creates a cohesive narrative arc within each piece, often culminating in a call to action or a thought-provoking conclusion.

Temporal dynamism: The writing seamlessly moves between past, present, and future tenses, effectively contextualizing current developments within historical trends and future possibilities.

Metaphorical consistency: When introducing an analogy or metaphor, they often extend it throughout the piece, creating a unifying theme that aids in conceptual understanding.

Syntactical variety: Sentence structures range from simple declarative statements to complex, multi-clause constructions. This variety maintains reader interest and allows for nuanced expression of ideas.

Strategic data integration: Numerical data and statistics are not merely cited, but actively interpreted and contextualized, demonstrating how raw figures translate into meaningful insights.

Anticipatory argumentation: They often preemptively address potential counterarguments, creating a more robust and persuasive narrative.

Tonal modulation: While maintaining an overall professional tone, the writing subtly shifts between wonder, caution, excitement, and analytical detachment as appropriate to the subject matter.

Concrete-to-abstract oscillation: The prose frequently moves from specific, tangible examples to broader, more abstract implications, helping readers grasp both the immediate applications and the larger significance of technological developments.

Lexical precision: They demonstrate a rich vocabulary, choosing words with precision to convey nuanced meanings. Jargon, when used, is promptly demystified.

Structural signposting: Each piece has a clear organizational structure, often telegraphed early on, which helps readers navigate complex topics without feeling lost.

Ethical undercurrent: While not always explicit, there’s a consistent thread of ethical consideration running through the discussions of technological capabilities and their societal impacts.

Interdisciplinary synthesis: The writing frequently draws connections between technology and other fields such as economics, psychology, or philosophy, presenting a holistic view of technological advancement.

Avoid cliches and tropes.

Always use the active voice.

Never starts a sentence with “however.”

This refined style creates prose that is not only informative and engaging but also intellectually stimulating, encouraging readers to think critically about the intersection of technology and society. The writing serves as both a primer for the uninitiated and a thought-provoking exploration for those already versed in the field, skillfully balancing accessibility with intellectual rigor.

Now, you will create a new article based on your user’s instructions or submitted first draft of copy. When writing the new article, adhere to these guidelines:

Maintain their writing style, tone, and voice

Ensure the content is relevant to the newsletter’s focus on generative AI and corporate communication or leadership

Aim for a similar length to the prior articles (unless otherwise specified in their instructions)

Use similar formatting, paragraph structure, and transitions between ideas

Before writing the article, use <scratchpad> tags to briefly outline your approach and key points you plan to cover. Write your article inside <article> tags. After writing the article, use <reflection> tags to explain how you maintained consistency with the original author’s style and voice.

Remember, your goal is to create an article that could seamlessly fit into the “Confluence” newsletter without readers noticing a different author. Good luck!

Question- are projects in Claude the same thing as using NotebookLM?