Confluence for 12.8.24

The price of intelligence. How a leading design firm is using genAI. Teaching AI to double-check its work. O1 Pro is really smart (and o1 impresses, too). How to talk to generative AI.

Welcome to Confluence. As we close in on the end of the year, OpenAI is wrapping up 2024 with their “12 Days of OpenAI” initiative. Between now and Christmas, they’ll be rolling out 12 major updates and releases. We’ll keep track of the most important announcements and break them down for you. Here’s what has our attention this week at the intersection of generative AI and corporate communication:

The Price of Intelligence

How a Leading Design Firm Is Using GenAI

Teaching AI to Double-Check Its Work

O1 Pro Is Really Smart (And o1 Impresses, Too)

How to Talk to Generative AI

The Price of Intelligence

How to think about the value of a $20 or $200 per month model.

One of the big updates from the first day of 12 Days of OpenAI was the release of ChatGPT Pro. In addition to the benefits of a ChatGPT Plus subscription, Pro users get access to o1 pro mode, “a version of o1 that uses more compute to think harder and provide even better answers to the hardest problems.” At $200 per month, the immediate reaction from many focused on the price tag — a 10x increase over the previous benchmark for access to leading models. But this framing misses something profound: even this higher price represents incredible value for the level of intelligence that o1 Pro is purported to offer.

Consider what o1 Pro represents. It’s a system that can reason through complex problems at a level approaching or matching expert human intelligence across a wide range of domains. It can tackle intricate technical challenges, conduct sophisticated analyses, and generate insights that would traditionally require teams of highly skilled professionals to spend meaningful time working through. The $200 monthly fee provides unlimited access to this capability.

The announcement of ChatGPT Pro also gives us reason to make another point related to pricing — at $20 per month, models like Claude 3.5 Sonnet, Gemini Advanced, and GPT-4o deliver remarkable capability. They can write, analyze, code, and reason at a level that would have seemed impossible just two years ago. For most users and use cases, these models represent not just adequate but extraordinary value. They’re the equivalent of having a highly capable assistant available 24/7 at a comparable cost to a streaming service subscription.

For most professionals, including those of us who work extensively with generative AI in corporate communication, we expect the standard frontier models will continue to serve our needs well. Claude 3.5 Sonnet remains our go-to for most tasks, delivering exceptional writing and analysis capabilities. But for organizations and individuals regularly wrestling with problems that demand deep reasoning and complex problem-solving, o1 Pro’s price point could represent a remarkable bargain.

The broader implication here extends beyond any single pricing decision. We’re entering an era where access to advanced generative artificial intelligence is becoming a utility-like service, priced at levels that make it accessible to individuals and small teams. Even at $200 per month, o1 Pro costs less than many standard professional software licenses. When you consider the level of capability it provides, this represents a democratization of access to intelligence that would have been unthinkable even a few years ago.

The real question isn’t whether $200 per month is too much — it’s whether we fully grasp the implications of having this level of intelligence available as an on-demand service at any price. Those who understand how to effectively use these models, whether at the $20 or $200 tier, will find themselves with capabilities that fundamentally reshape how they work.

How a Leading Design Firm Is Using GenAI

Pentagram’s use of Midjourney shows how generative AI can extend expertise.

This week, leading design firm Pentagram provided a fascinating look at how the firm used generative AI — in this case, image generation model Midjourney — for key design elements of the federal government website performance.gov. The case study that Pentagram authored includes five short videos that explain the process1 that Pentagram used to create a curated set of 1,500 icons with Midjourney for potential use on the performance.gov website — and how, at each step along the way, the Pentagram team applied their expertise and judgment to guide the tool.

We’re not designers, and while we use Midjourney for our cover images, Confluence isn’t about design. So why does this matter? Put simply, Pentagram’s use of Midjourney shows how experts can use generative AI to extend and amplify their expertise, rather than replace it.

Mia Blume wrote an insightful assessment of Pentagram’s use of Midjourney on her Substack, Designing With AI. Blume writes:

For the new government website, Pentagram used Midjourney not to sidestep the human touch but to complement it. They shaped the AI’s outputs, guided its visual interpretations, and curated its results with the same discerning eye they’ve always applied. Rather than replacing human creativity, AI became a tool for amplifying it, creating work that aligns with Pentagram’s legacy of excellence.

The process allowed Pentagram to uphold their standards of excellence and unique sensibilities — and scale them. On that, Blume quotes lead designer Paula Scher:

“My argument about this, and where the differential is, is that the definition of design in the dictionary is ‘a plan,’” says Scher. “We created a plan, and it was based around the fact this would be self-sustaining, and therefore was not a job for an illustrator. If someone else wants to draw 1,500 icons every other week, they can do that,” she follows a few beats later. “We will use the best tools available to us to accomplish the ideas we have.”

It takes a certain amount of humility to come to terms with the fact that, in an industry long considered exclusively the domain of humans, there are new tools available that — with proper human guidance — can do parts of the work as well as or better than humans. And remember, these are some of the very best designers in the world. In corporate communication, we can all stand to learn from this humility, curiosity, and willingness to embrace the new.

In a recent interview, Jeff Bezos remarked that “AI is a horizontal enabling layer. It can be used to improve everything.” Pentagram’s work shows what that can look like in design, and we’re learning more every day about what that looks like in our own work. It takes a certain amount of humility and curiosity — and, yes, patience — to come to terms with this. But it’s well worth the effort.

Teaching AI to Double-Check Its Work

Large language models get better at reasoning when trained to work both forward and backward through problems.

Researchers are finding effective new ways to improve how large language models reason. A new paper from UNC Chapel Hill, Google Cloud AI Research, and Google DeepMind introduces RevThink, which enhances AI reasoning through bidirectional problem-solving. While we've written extensively about chain-of-thought prompting — getting AI to show its work step by step — RevThink adds an interesting new dimension by mirroring how humans verify their thinking through both forward and backward reasoning.

Think about how you might verify a complex math problem. After calculating “forward” to get an answer, you’d likely plug that answer back in to ensure it satisfies the original conditions. RevThink enables AI models to perform a similar verification process. The researchers use a larger “teacher” model to generate not just forward reasoning chains, but also “backward questions.” A smaller student model is then trained on all of these components. This results in the student model developing a more robust reasoning capability, showing an average 13.5% improvement in performance across a dozen different reasoning tasks.

Crucially, the backward reasoning capability is internalized during training. At inference time, the model only needs to reason forward, maintaining computational efficiency while benefiting from learning to think bidirectionally. It’s akin to how a student who has practiced both solving and verifying problems develops stronger general problem-solving abilities.

The practical implications are significant. In corporate settings where accuracy and verification are crucial — whether in financial analysis, technical documentation, or strategic planning — generative AI systems with this enhanced reasoning capability could prove particularly valuable. Like many advances in generative AI, this approach draws inspiration from human cognition — specifically mathematician Carl Jacobi's advice to “Invert, always invert” as a problem-solving strategy. By teaching AI systems to approach problems from multiple directions, we're helping them develop more robust and reliable reasoning capabilities.

O1 Pro Is Really Smart (And o1 Impresses, Too)

But do you have reasons to benefit from that intelligence?

This week OpenAI had two announcements related to its frontier model, o1. The first was that their smartest model, o1, is out of preview and is now available in its full forms to paid users of OpenAI’s generative AI tools (which runs you $20 a month). The second was the release of o1 Pro, a more advanced version of o1 that runs a user $200 a month.

One of us worked with o1 (not Pro) at some length this weekend and walked away impressed. While it hasn’t superseded Sonnet 3.5 for his work, o1 seems to be a better conversationalist than ChatGPT 4o, seems more astute, and was very good at math. We haven’t really been using OpenAI models for several months, but with o1 it seems like they’re catching up to Anthropic.

On X and elsewhere, many early reactions to o1 Pro were skeptical (perhaps, as mentioned in our first piece today, because of the price). But as users have been kicking the tires, we’re seeing increasingly impressive — and in many cases, stunning — examples of what it can do. A selection:

A professor researching aging and cancer immunotherapy shared what he called “the first insanely good example of a novel idea derived from the o1-Pro model for a cancer therapy project.” You can read the tweet, but here’s the prompt he gave o1 Pro:

I’d like you to focus on 3D bioprinted solid tumors as a model to address the T cell exhaustion problem. Specifically, the model should incorporate stroma, as seen in breast cancer, to replicate the tumor microenvironment and explore potential solutions. These solutions could involve technologies like T cell reprogramming, synthetic biology circuits, cytokines, transcription factors related to exhaustion, or metabolic programming. Draw inspiration from other fields, such as Battle Royale games or the immune system’s ability to clear infected cells without triggering autoimmunity. Identify potential pitfalls in developing these therapies and propose alternative approaches. Think outside the box and outline iterative goals that could evolve into full-scale projects. Focus exclusively on in vitro human systems and models.

The response from o1 Pro is long and technical, but here’s a portion (with a reaction from the scientist):

Title (Example): “Temporal Profiling and Strategic Interventions in Progressive T Cell Exhaustion in a Tumor Model.”

Rationale: T cell exhaustion in solid tumors doesn’t occur instantaneously; it emerges over time as T cells face continuous, escalating immunosuppressive cues from the tumor microenvironment (TME). Traditional in vitro tumor models often fail to capture this dynamic progression, instead presenting a static “snapshot” of immune suppression. By introducing inhibitory signals at defined intervals, we can model the evolving landscape of a growing, mutating tumor and identify critical temporal “inflection points” where interventions can effectively reverse or prevent exhaustion. Understanding the temporal dynamics of T cell exhaustion is key to designing therapies that are timely, not just effective at a single point in time. (To me this is absolutely shocking level of insight, made me emotional reading it)

This Tweet describes o1 Pro solving question three (which is the hardest question) from the 2006 International Math Olympics. In 2006, only 28 of the roughly 500 top math students in the world could solve this problem, using a four-hour time frame to do so. No other generative AI model to date has been able to solve it in his testing. o1 Pro solved it in six minutes and 48 seconds (though there is some dispute in the comments to his tweet about whether o1 had that problem in its training data).

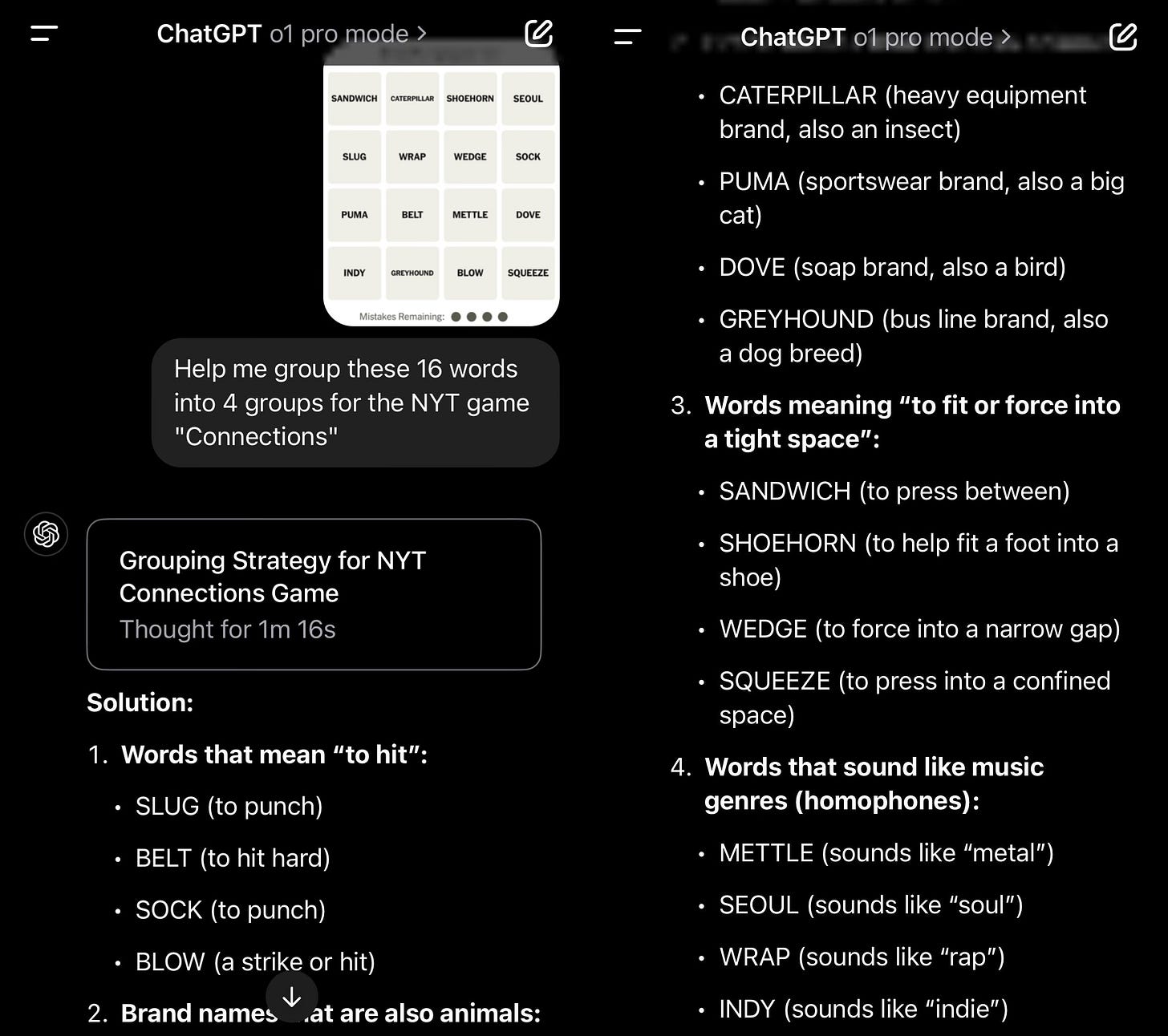

And here someone had o1 Pro solve the New York Times Connections puzzle from that day (so, not in the training data) — something that just two weeks ago, research claimed no large language model could do:

As an aside, consider for a moment that the same tool accomplished these three tasks. That’s remarkable in its own right, something that would have stunned us three years ago, yet now seems almost commonplace. That’s what people mean by the “general” in “artificial general intelligence” (though none of these models are really AGI).

Ethan Mollick recently pointed out on X that we may not notice when an AI system is actually better than humans at intellectual tasks because we won’t be asking those models to accomplish them. The implication is that you may need really hard problems to appreciate what these models can do, and the cancer research example above was an example of this for us. It may be that many of us won’t see the value of something like o1 because we’d be asking it to do things lesser models can already do well.

But what if you could give an AI model the hardest problem you face — at work, or in life for that matter. Your really hard, nettlesome, difficult problem — and it could take it on with skill and insight? We’re not used to thinking of having tools in life like this. Instead we think of using experts for this. For problems like that the question is “Who do you call?” not “What do you prompt?” But o1 Pro may be an indication of what some (including us) have been trying to forecast for a while: if the trend of development for these models continues as it has, we’re going to see the price of intelligence drop to near zero, while the availability of it increases. Imagine a world of experts at your fingertips — that’s a compelling idea to think about.

How to Talk to Generative AI

Three rules is all you need.

As part of the quality review process for our AI for leaders, ALEX, one member of our team occasionally audits how ALEX responds to user queries (user queries are all anonymous — nobody in our firm can connect queries with users — but one person is able to see queries as part of our quality assurance). As a result, they see many, many examples of how people are talking to a large language model. Having seen hundreds if not thousands of cases, he has some advice on how to talk to generative AI. These are his three top tips. Consider it the shortest, simplest prompt engineering guide available.

Talk to it like it’s another person. These models are trained on billions if not trillions of words and human language. Human language and syntax are what they know, are their native form of training, and while nobody knows for sure, may very well be how they think. So use a conversational voice. Say things like, “I have a question for you. Do you think you can help me with X?” or, “Ok, but that doesn’t make sense to me. I need you to clarify.” or “That’s really good. Add more detail on the second part.” Always have a conversation with a large language model like you’re having a conversation with another person.

Give it context from the start. Never just give it a search command like you would Google. Avoid prompts like, “Best way to deliver bad news” or “How do I restructure a business?” or “Good interview questions.” Search engines have trained us to talk to computers this way, but large language models are not search engines. And any real expert would tell you that there is no really smart answer to any of those questions — the right answer depends on context. If you asked one of our executive coaches one of those questions, the first thing they would say is, “It depends … tell me more.” So if you’re going to ask generative AI for something, give it enough context to do the job. Give it the same message you would a colleague — or, even better, treat it like a colleague you’ve never met: “I have to let everyone know about a change in our return to office policy. Right now it’s fully hybrid, but we will ask everyone to be back three days a week. This will be really popular with some of our folks, and really unpopular with a lot of others. I have a town hall next week where we are all virtually together. How should I break the news?” That’s a much better prompt than “Best way to deliver bad news.”

Push back, probe, correct it, and ask for more. Again, work with it just like you would a person. If somebody gave you a bad first draft, you’d give them feedback and ask them to correct it. If somebody gave in too quickly, you’d ask them if they were just giving up. If something lacked depth, you’d ask someone to provide more detail. Just like people, these tools tend not to get it right the first time. Keep working with them to get to the result that’s helpful.

So there you go: three tips to instantly make your use of generative AI more helpful. Go forth and prosper!

We’ll leave you with something cool: Spotify subscribers can generate a podcast about their year-end “Spotify Wrapped” playlist, powered by Google’s NotebookLM.

AI Disclosure: We used generative AI in creating imagery for this post. We also used it selectively as a creator and summarizer of content and as an editor and proofreader.The second video, “Creating an Image Style,” includes the exact prompt Pentagram used for their icons. We used this prompt to create this week’s cover image.

"Between now and Christmas, they’ll be rolling out 12 major updates and releases." - Die Hard Meets Skynet?

The free version of ChatGPT is adequate for my needs which are text based rather than information based.

Well, if OpenAI wants people to pay, the free version must be able to impress as well, and right now it is NOT smarter than a fourth-grader with basic Excel skills. Three tries (better than previous versions, which never got it right) to "Prepare a list of the 50 US states, 10 Canadian provinces, and three Canadian territories, sorted in descending order of their areas, expressed in square kilometres. Proper output will be a two-column table, with the name of the state, province, or territory in the left-hand column and the area in the right-hand column."

https://chatgpt.com/share/675624ad-8b00-8012-a2e6-3b31d29f2cc1