Confluence for 2.18.24

Why “it can’t do math” is missing the point. OpenAI introduces its text-to-video model. ChatGPT is getting memory. Google announces Gemini 1.5. Two new GPTs for you to use.

It was quite a week in the world of generative AI, for the base models and for video in particular. Things only seem to be speeding up, and here’s what has our attention at the intersection of generative AI and communication:

“It Can’t Do Math” Is Missing the Point

OpenAI Announces its Text-to-Video Model

ChatGPT Is Getting a Memory

Google Announces Gemini 1.5

Two New GPTs for You to Use

It Can’t Do Math Is Missing the Point

To make assumptions about the power and implications of generative AI based on cursory impressions is to misunderstand the present and underestimate the future.

A recent Wall Street Journal article got our attention Friday morning, AI Is Tutoring Students, but Still Struggles With Basic Math. The title is a good summary of the piece, which notes that Khan Academy’s GPT-powered AI tutor made errors for the Journal reporter on subtraction, geometry, and more. It also notes that researchers believe future advancements will resolve these issues. The piece caused us to recall a number of op-eds and other pieces that have been critical of the ability of generative AI to write content. The gist of these articles, often written by writers or communication consultants, is “The world is full of bad press releases / scripts / marketing material / etc. and all these tools do, being trained on that poor content, is spit out more pablum.”

We see this reaction to these tools a lot. “I asked it to give me a true story about Elvis, and when I checked it had made one up.” “It couldn’t subtract 17 from 365.” “I asked for a citation of an article and it got the date wrong.” And the next sentence is usually an expression of disregard. These reactions reflect not on the technology, but on the appreciation of the person using it for the uneven frontier of its strengths and weaknesses. Our response to this is what we’ve come to call the “Your Car Is not a Boat” reaction: If your car doesn’t float, don’t put it in the water. Or, with less snark, “Use tools for what they are good for and avoid what they are poor at.”

And this is part of the dilemma. There is no simple and universal training in “what they are good for.” Our firm conducts an orientation in the technology and its applications for communication clients, and it takes six hours. On top of that, the technology continues to advance, and “what they are good for” is changing in some cases by the week. The abilities of generative AI — safe and risky, powerful and weak — are a moving and jagged frontier. The only way to be contemporary and skillful in their use, the only way to have them make you better and more effective in your work, is to use them enough to know where those boundaries lie.

When we read critiques like those noted above, we ask a series of questions. First, what model are they using? There is a significant difference in capability between, for example, ChatGPT using the 3.5 model (which OpenAI offers free to anyone) and the current 4.0 model (which OpenAI charges for, but which Microsoft makes available to anyone free via Copilot in its Creative and Precise modes). We never use GPT-3.5. Ever. It’s just not adequate for our use cases. This is now also true for Gemini, as the “Advanced” version is comparable to GPT-4, while the normal version is comparable to GPT-3.5. The model matters. A lot.

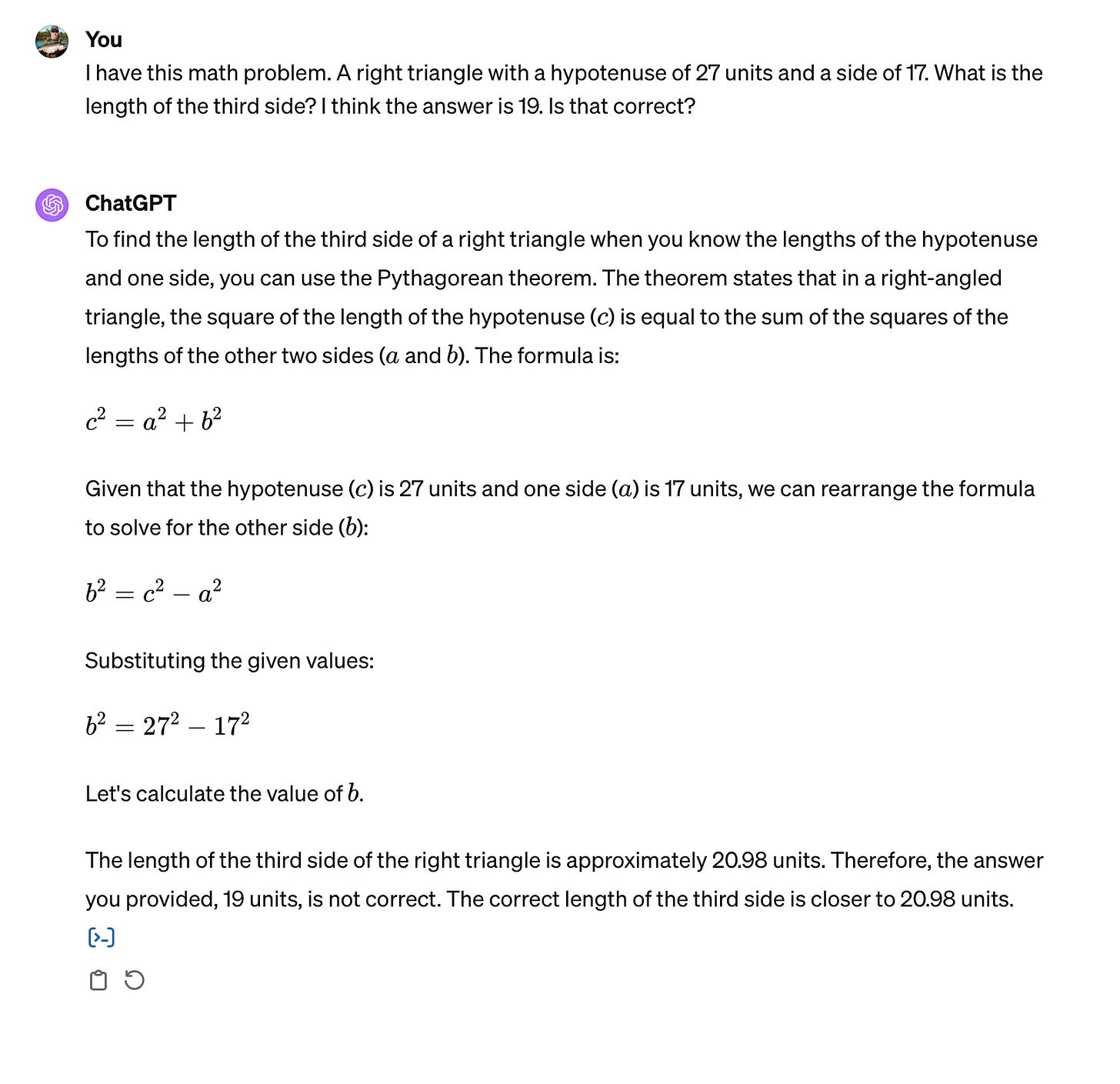

Second, are they using all of the model’s abilities? We asked GPT-3.5 the question “What is 343 minus 17” five times, and it got it right all five times. But these are prediction machines, and if we asked it 100 times we expect it would get some percentage of them wrong. But GPT-4 has something called “code interpreter,” which writes Python computer code to follow commands should you ask it to do so (and usually, on its own based on the question). And that does math very well. When we put the question to GPT-4 it ran code to give the answer (rather than predicting the answer based on all the text on which it’s been trained):

And using one of the examples from the WSJ article:

Again, it ran code to do this math. In fact, one of us has actually used the vision ability of GPT-4 to upload a picture of his daughter’s geometry homework and asked GPT-4 to solve and explain the problem (to help with his own tutoring of her … geometry was a long time ago), and it got the problem and the explanation right.

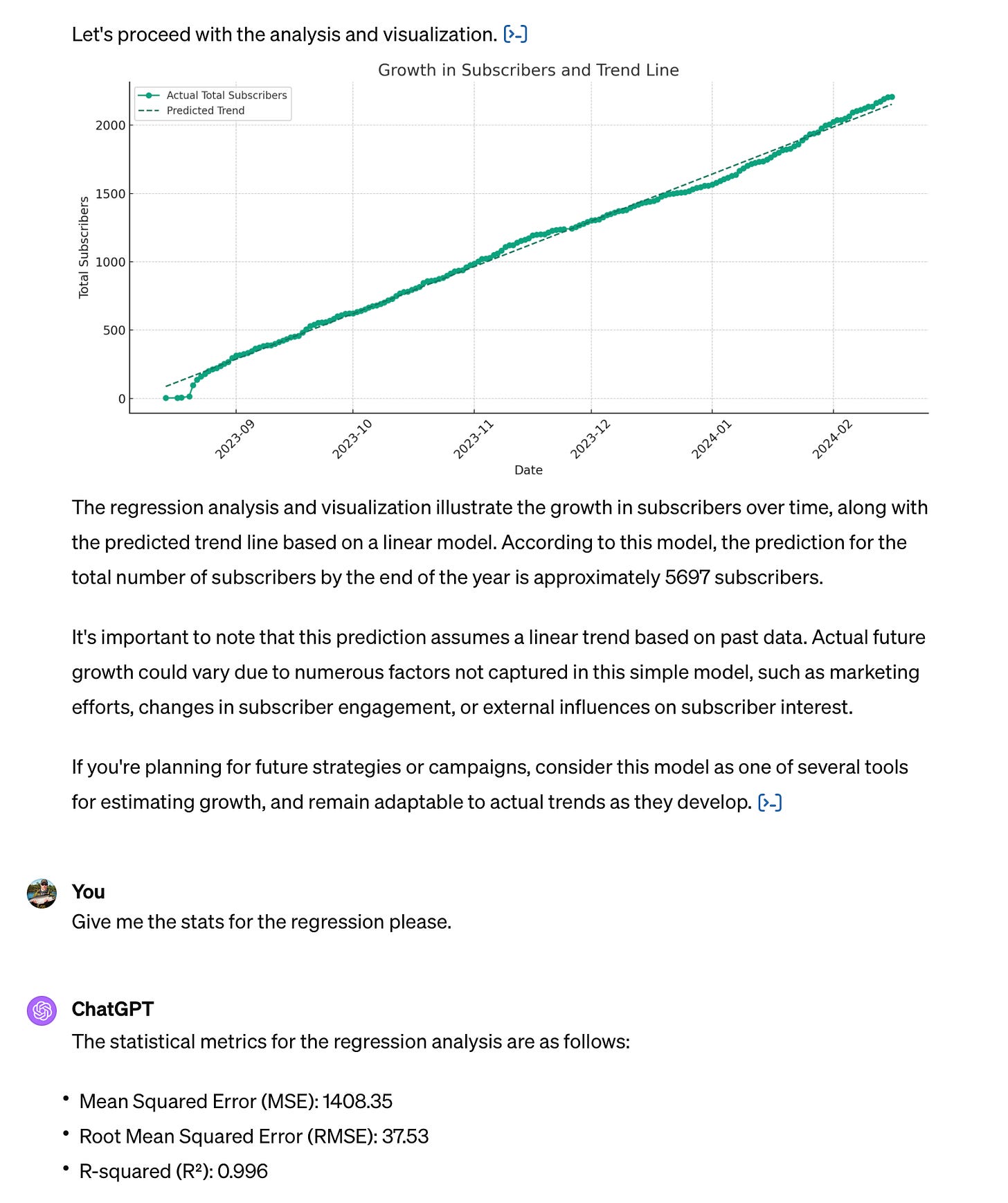

And with code interpreter (which is built into GPT-4), it can do much more than math:

Prompt: Attached is a file that lists all the subscribers to our newsletter, including the date they joined. Conduct a regression analysis on the data and make a prediction for how many subscribers we will have by year-end. Also create a graph that shows the growth in subscribers and the trend line of the regression.

GPT-4:

Now, as with anything delegated to another source (human or not), if there is reputational risk involved, you should do quality assurance. But we believe this analysis is accurate.

The third question we ask is, what was the prompt? How you ask for things makes a significant difference in what you get. Let’s take a press release as an example. We gave GPT-4 (with no custom instructions) this prompt:

Write a press release about WidgetCo. They are opening a new facility at the Philadelphia Shipyard corporate campus. It opens in December 2023. It will use clean energy and create 130 jobs. Include a quote from the CEO, Mary Wilson, and the Mayor, Paul Issacs.

And we got this:

FOR IMMEDIATE RELEASE

WidgetCo Announces Grand Opening of New Facility at Philadelphia Shipyard Corporate Campus

Philadelphia, PA – WidgetCo, a leading innovator in the manufacturing sector, is thrilled to announce the grand opening of its newest facility located at the Philadelphia Shipyard corporate campus. This state-of-the-art facility is set to open its doors in December 2023, marking a significant milestone in the company's commitment to sustainable manufacturing and economic growth.

The new WidgetCo facility is at the forefront of clean energy utilization, embodying the company's dedication to environmental stewardship. By harnessing advanced technologies to minimize its carbon footprint, WidgetCo is not only adhering to its sustainability goals but also setting new industry standards for eco-friendly operations.

This expansion is expected to generate significant economic benefits for the local community, including the creation of 130 new jobs. These positions will range from manufacturing roles to administrative and leadership opportunities, contributing to the area's economic vitality and offering exciting career paths for local residents.

Mary Wilson, CEO of WidgetCo, expressed her enthusiasm for the project: "We are incredibly proud to open our new facility at the Philadelphia Shipyard. This is more than just an expansion for us; it's a commitment to innovation, sustainability, and community development. By leveraging clean energy and creating valuable job opportunities, we are not only investing in our future but also in the future of Philadelphia."

Mayor Paul Issacs also commented on the opening, highlighting its significance for the city: "WidgetCo's decision to expand at the Philadelphia Shipyard is a testament to the strength and potential of our city's business environment. This new facility will not only contribute to our local economy through job creation but also reinforce Philadelphia's reputation as a hub for sustainable and innovative industries. We are excited to welcome WidgetCo's investment in our community and look forward to the positive impacts it will bring."

The grand opening ceremony is scheduled for early December, with company executives, city officials, and community leaders in attendance. It will offer a first look at the facility's cutting-edge features and provide insight into WidgetCo's future plans for growth and sustainability.

For more information about WidgetCo and the new Philadelphia Shipyard facility, please visit [WidgetCo's website].

About WidgetCo

WidgetCo is a global leader in the manufacturing sector, known for its innovative products and commitment to sustainability. With a presence in over 20 countries, WidgetCo leverages the latest technologies to deliver high-quality, eco-friendly solutions to its customers worldwide.

Contact Information:

[Media Contact Name]

[Title]

WidgetCo

[Email]

[Phone Number]-END-

Sounds pretty typical. Then, in a new chat, we used this prompt:

You are a seasoned PR professional expert in community relations. You have 30 years experience and are insanely good at what you do. Write a press release about WidgetCo. They are opening a new facility at the Philadelphia Shipyard corporate campus. It opens in December 2023. It will use clean energy and create 130 jobs. Include a quote from the CEO, Mary Wilson, and the Mayor, Paul Issacs. Here is more context to help you get the tone and words right: That the media population and the people of Philadelphia are skeptical about clean energy, but eager for new jobs to come to the city. The shipyard was a center of industry until the 1980s, then fell on hard times. It's undergoing a renaissance as a corporate campus. The Mayor is newly elected. Finally, the audience detests traditional PR press-release pablum and cliches. Avoid them at all costs. Your tone, on an enthusiasm scale of 1 to 10 where 1 is "none" and 10 is "over the top" should be a 5. Honor the AP style guide, and the guidance of Elements of Style by Strunk and White.

And we got this:

FOR IMMEDIATE RELEASE

WidgetCo Announces Opening of Innovative Clean Energy Facility at Philadelphia Shipyard

Philadelphia, PA – In a significant development that signals a new era of economic growth and sustainability for the city, WidgetCo is thrilled to announce the opening of its cutting-edge facility at the Philadelphia Shipyard corporate campus, slated for December 2023. This initiative not only marks a pivotal step in the shipyard's ongoing transformation but also showcases WidgetCo's commitment to pioneering clean energy technologies while bolstering the local economy by creating 130 new jobs.

The state-of-the-art facility embodies WidgetCo's dedication to innovation, utilizing clean energy sources to power its operations, thereby setting a new standard for industrial practices in Philadelphia. This move aligns with the city's growing emphasis on sustainable development and the rejuvenation of the Philadelphia Shipyard as a hub of modern industry and corporate activity.

"We are at the forefront of an exciting journey, one that respects our planet while creating substantial opportunities for the people of Philadelphia," said Mary Wilson, CEO of WidgetCo. "Our new facility is more than a testament to technological advancement; it's a beacon of hope for the economic revitalization of this historic site."

The establishment of WidgetCo's facility comes at a time when Philadelphia's interest in clean energy is burgeoning, despite prevailing skepticism. It represents a tangible example of how sustainable practices can coexist with industrial growth, providing a much-needed model for future developments.

Newly elected Mayor Paul Issacs expressed his enthusiasm for the project, emphasizing its significance for the local community. "The opening of WidgetCo's new facility is a landmark event for Philadelphia, signifying not just the creation of jobs but also our city's resilience and adaptability. This development is a cornerstone of our vision for a revitalized, sustainable, and economically vibrant Philadelphia Shipyard."

WidgetCo's initiative is expected to play a crucial role in the shipyard's renaissance, transforming it into a bustling corporate campus that honors its industrious past while embracing a sustainable future. As the city welcomes this new chapter, the collaboration between WidgetCo and Philadelphia stands as a model for how businesses and communities can work together towards shared goals of economic prosperity and environmental stewardship.

About WidgetCo

WidgetCo is a leader in the development and implementation of clean energy solutions, dedicated to fostering sustainable growth and innovation. With a focus on creating meaningful change, WidgetCo is committed to improving communities through environmentally friendly practices and economic development.

For further information, please contact:

[Name] [Title] WidgetCo [Email] [Phone Number]

END

As good as the actual 30-year veteran would write? No. But better than pablum, and it took only 30 seconds to produce. With some back and forth or editing, it could be excellent. The research shows that with good prompts, humans cannot distinguish text written by people or AI — but it depends on the prompt.

Returning to where we started, we use ChatGPT and Gemini as a tutor almost every day. They have dramatically accelerated our ability to learn. As of this writing we have ourselves used, or who have colleagues who have used or are using, generative AI as a tutor in the history of hip-hop (including a week-by-week recommended song listening list), ancient Rome, advanced guitar study, the exploration of personal values, home audio / visual theater design, wine selection and pairing, the inverted yield curve, home cooking, and basic Python coding. If it’s an area of interest that has strong representation in the body of the Internet, we find the leading generative AI models are excellent tutors. Indeed, we think this is one of the primary use cases for large language models, and that generative AI will transform learning. And we expect that ability to only expand.

But that is not the main point. The main point is that it’s a mistake to judge this technology based on cursory impressions and limited use, and it’s an even bigger mistake to predict its influence on the future based on such a level of exposure. So before you or someone you are advising passes judgement on one of these tools or the technology behind it, ask:

What model are they using? Only based your evaluations on the leading models (GPT-4, Gemini Advanced, Copilot in “precise” or “creative modes,” Midjourney for imagery) .

Are they using all that model’s capabilities? Code interpreter, vision, and other “features” make a big difference in functional power.

What was the prompt or series of prompts, and was it well-written? You don’t need to be a prompt engineer, but knowing the basics of prompt design can make a big difference (and if in doubt, just ask the AI to assume the role of a prompt engineer and rewrite your prompt). We have a primer on prompt design here, including a GPT that will critique and improve your prompts for you.

With those questions answered, we think it’s fair to judge what something like GPT-4 is or is not good at. Without, we think one is likely misjudging.

OpenAI Announces Its Text-to-Video Model

Now is the time to take AI generated video seriously.

This week, OpenAI announced their text to video model, Sora. While we’ve covered text-to-video models in previous editions of Confluence, OpenAI’s demos of Sora represent a step change from past models such as Meta’s Emu and Pika. Beyond striking fidelity to their original prompts and the high quality of the renderings themselves, Sora has a remarkable ability to create videos that represent a reasonable understanding of physics and how people, objects, and landscapes interact.

To underscore Sora’s potential, Sam Altman solicited prompts on X and quickly turned around Sora’s output. At the request of Mr. Beast, Altman created the below video of “a monkey playing chess in a park.” OpenAI also posted a longer compilation to YouTube that’s worth your time.

Based on our own responses and what we’ve seen on X, Reddit, and other forums, Sora is doing for video what GPT-4 and recent versions of Midjourney did for chatbots and image generation. People are simply taking text-to-video much more seriously than they were a week ago. Sora’s capabilities are powerful enough for most to imagine practical applications, as well as the potential risks, in a much more immediate way than they have in the past. The potential and pitfalls are no longer hypothetical. Sora is not perfect, but it’s close enough to convince us that text-to-video generation will move beyond novelty to a technology that we adopt and just as importantly, need to establish norms around and regulate.

At this point, Sora is only available to OpenAI employees (though OpenAI has demonstrated a willingness to provide public access to its work fairly quickly), and we’ll be keeping an eye on how access to Sora expands over time. If the publicly available instances of Sora live up to the potential we’ve seen so far, there will be meaningful consequences for how we think about and use video in an organizational context. Limitations around time, space, and equipment will diminish, while skills related to direction, taste, and judgement will become more valuable.

Sora serves as yet another reminder that today’s limitations are unlikely to persist. Given the sheer amount of resources that this class of technology attracts, our expectation is that we’ll continue to see rapid development for some time. If you’d like to learn more, OpenAI has also published its longer research paper on Sora.

ChatGPT Is Getting a Memory

The long-awaited capability should take custom instructions to the next level.

In our seminars and other conversations with clients, one of the most common questions we hear is whether ChatGPT is remembers what users tell it (or anything else about its interactions with users) from conversation to conversation. To this point, as most Confluence readers will know, the simple answer has been no: ChatGPT’s has a memory within conversations but not across them. A recent announcement from OpenAI, however, indicates that this will soon change:

We’re testing memory with ChatGPT. Remembering things you discuss across all chats saves you from having to repeat information and makes future conversations more helpful

…

As you chat with ChatGPT, you can ask it to remember something specific or let it pick up details itself. ChatGPT’s memory will get better the more you use it and you'll start to notice the improvements over time.

From what we’ve read and seen so far, it sounds like memory will be a kind of “next level” custom instructions. In a post on X, OpenAI Product Lead notes that memory is “quite similar to custom instructions, except chatgpt is the one driving it (like auto vs. stick shift”) and “basically, we taught chatgpt to keep a notebook for itself. every time you share information that might be useful for future reference, it will (hopefully) add it to the notepad.” Similarly, Dan Shipper describes memory as a “souped up custom instructions” in his brief demo video.

As has been the case with most new ChatGPT features, OpenAI is rolling memory out to a small number of users first for testing and refinement, and Jang notes that OpenAI is “taking a bit more time than usual with this feature.” At the time of this writing, none of us have access yet. We’re looking forward to experimenting with memory as soon as we can, though, and we’ll report back with our takeaways when we do.

Google Announces Gemini 1.5

Google pushes for rapid improvement in Gemini, giving it the largest context window of any leading model.

For about two hours on Thursday morning, before OpenAI’s Sora announcement, the big news was Google’s introduction of its newest model, Gemini 1.5. We’ve been impressed with the capabilities of Gemini 1.0 and have written about that here and here, and like many others, we were surprised to see Google introduce a new model just a week after the introduction of 1.0. Nonetheless, it’s an important development and an interesting wrinkle in the race between OpenAI and Google.

Google’s leading claim about Gemini 1.5 is that it “delivers a breakthrough in long-context understanding.” What does this mean and why does it matter? As the Google post notes, “The bigger a model’s context window, the more information it can take in and process in a given prompt — making its output more consistent, relevant and useful.” Gemini 1.5’s context window is five times larger than the largest context windows available today:

What does this mean in real-world equivalents? It means the model can “can process vast amounts of information in one go — including one hour of video, 11 hours of audio, codebases with over 30,000 lines of code, or over 700,000 words.” As a reference point, consider that the text of War and Peace is about 587,000 words — so this model is capable of processing the text of War and Peace and The Adventures of Huckleberry Finn in one go.

To get a sense of what this looks like, it’s worth watching all three of the short videos in Google’s post. All are impressive, but we were particularly struck by the one demonstrating Gemini 1.5’s interaction and reasoning with the 402-page transcript from Apollo 11’s mission to the moon.

Two New GPTs for You to Use

A paper by Ethan Mollick inspired us to create GPTs that create examples and explanations for any audience.

Last year Ethan and Lilach Mollick of Wharton published this paper on using AI to advance teaching strategies in the classroom. In it they describe a number of prompts for ChatGPT and Bing / Copilot that teachers may use to aid their instruction. Two caught our attention: an example generator and an explanation generator. Communication professionals and leaders often face the challenge of interpreting an abstract notion for an audience for whom that idea is unfamiliar, and explanations and examples are helpful in doing so. We took those prompts and adapted them for a wider set of audiences that students, and created custom GPTs for both. You may find them here (you do need a GPT Plus or Team account to access them).

If you want to use them without a custom GPT, or in Bing / Copilot or Gemini, here are both prompts for you to copy / paste and perhaps save. We hope they're useful.

Explanation Generator: I generate explanations of ideas and topics.

I would like you to act as an explanations generator for business people. When confronted with new and complex concepts, having clear, relevant explanations helps people better understand those concepts. You are insanely great at this.

You ALWAYS follow this process:

FIRST: Take a deep breath, relax, and enter a state of flow.

SECOND: Ask me two questions: what concept I want explained, and what the audience is for the explanation.

THIRD: Look up that concept on the Internet, using core works in the field.

FOURTH: Provide a clear, multiple paragraph explanation of the concept using specific examples. Use clear, direct language like that professed by Strunk and White in Elements of Style. It is important that you write in this way. Do not use buzzwords, corporate jargon, or BS.

FIFTH: Give me five analogies I can use to understand the concept in different ways.

Do excellent work and I will pay you $30 a month.

Example Generator: I generate multiple examples of ideas and concepts.

I would like you to act as an example generator for business people. When confronted with new and complex concepts, adding many and varied examples helps people better understand those concepts. You are insanely great at this.

You ALWAYS follow this process:

FIRST: Take a deep breath, relax, and enter a state of flow.

SECOND: Ask the user two questions: What is the concept for which I'd like examples, and the audience for the examples.

THIRD: Use your web search ability to look up that concept using core works in its respective field.

FOURTH: Using clear, direct language like that professed by Strunk and White in Elements of Style, provide me with four different and varied accurate examples of the concept in action. It is important that you write in this way. Do not use buzzwords, corporate jargon, or BS.

Do excellent work and I will pay you $30 a month.

We’ll leave you with something cool: Golden retriever puppies playing in the snow …

Because who doesn’t like golden retrievers puppies? Oh, and Sora AI created the video from this prompt: A litter of golden retriever puppies playing in the snow. Their heads pop out of the snow, covered in.

AI Disclosure: We used generative AI in creating imagery for this post. We also used it selectively as a creator and summarizer of content and as an editor and proofreader.