Confluence for 5.18.25

Why you need to pay attention to Codex. A look back. The power of shared inquiry and experimentation. What happens when generative AI invents novel solutions?

Welcome to Confluence. Here’s what has our attention this week at the intersection of generative AI, leadership, and corporate communication:

Why You Need to Pay Attention to Codex

A Look Back

The Power of Shared Inquiry and Experimentation

What Happens When Generative AI Invents Novel Solutions?

Why You Need to Pay Attention to Codex

The new OpenAI coding agent is a bellwether.

The big news in generative AI this week was OpenAI’s release of Codex, their computer programming agent. While the software development and AI communities paid a lot of attention to Codex, it did not register on the radar of most leaders and communication professionals.

But it should.

Per OpenAI, “Codex takes on many tasks in parallel, like writing [application] features, answering codebase questions, running tests, and proposing PRs for review. Each task runs in its own secure cloud sandbox, preloaded with your repository.” Translated into plain English: “Codex works like a digital assistant that can handle multiple coding jobs at once. It can help write new software features, answer questions about your code, check if your code works correctly, and suggest changes for your team to review. Each of these jobs happens in its own private, secure online workspace that already has your project’s code loaded and ready to go.”

Watch this video at 1:14 in to get a sense of how it works:

In brief, Codex has access to all the programming code a programmer is working on, and the libraries and other applications they use to work on that code. Codex can read and write to that codebase, and it can do so in parallel paths. So, as an example, the programmer can have Codex read their code and create a list of bugs it has found and improvements it could make. The programmer can ask Codex to do those things as individual tasks while adding their own tasks to the list (in the video, “See archived tasks, “Fix trigger notification,” “Add Live Activity updates,” “Write ConversationObserver”) based on what they want to do with the code next, and Codex pursues those tasks independently and in parallel.

So let’s keep that functionality, but change the domain to, say, communication. A communication professional has a folder of documents about a large project they are working on. They want to identify message inconsistencies across those documents. At the same time, they need to brainstorm possible questions and author answers for an upcoming town hall. They will need to spin those Q&As into a new FAQ document. And they need to go online and research comparable programs at similar companies. So they have “Comex,” their communication agent, do that for them. They assign each as a task, and go to their next meeting. When they return, first drafts are complete for them to review.

Or a leader has their agent handle these tasks, in parallel, while they sleep: “Build sales forecast,” “Identify open spots for family vacation in calendar,” “Brainstorm issues for Board meeting based on contents of this OneDrive folder,” “Read and summarize these documents,” “Create briefing report from research literature and online sources on topic X,” “Review contract agreement for strengths and weaknesses.”

This is part of the future for generative AI, and it is coming in months, not years. How quickly organizations choose to adopt that future is the open question, but we expect that the implications of agents for productivity, organization design, and workflows to be meaningful.

A Look Back

To appreciate what generative AI can do, just look back at what it could do.

On occasion we read past editions of Confluence as a way to check our own senses of how quickly generative AI is advancing. It’s almost always a surprise.

18 months ago, for example, we were writing about custom GPTs (which we’ve not written about in many months, as we no longer use them) and that Microsoft had launched an additional version of Copilot (and now it seems like they have five versions of Copilot). A year ago the big news was that OpenAI had launched GPT-4o, a model still in use today (and with recent character troubles) but also a model we never use today as we prefer other models.

To that point, perhaps more notable is that GPT 4.1, 4.1-mini, 4.5, o1 Pro, o3, o4 mini, and o4-mini-high (confused yet?) join and exceed 4o in the OpenAI model library, all released in the last year. As have been Gemini 2.5 and Gemini 2.5 Advanced at Google, Claude 3.5, and Claude 3.7 Sonnet at Anthropic. Oh, and a few iterations of Grok at xAI, and some big open-source models to boot. And if we’re wondering what’s next, the internet rumor telegraph is buzzing with speculation about a possible release of Claude 4 in coming weeks.

A year ago we tested GPT-4o by asking it this question and getting this response:

You are an expert in qualitative analysis. What types of analysis could you run on a set of open-ended survey comments from an all company town hall?

Conducting qualitative analysis on open-ended survey comments from a company town hall involves several methods to ensure comprehensive and insightful findings. Here are key types of analysis you could run:

1. Thematic Analysis

Description: Identify and analyze recurring themes or patterns within the data. Steps:

Familiarization with the data.

Generating initial codes.

Searching for themes.

Reviewing themes.

Defining and naming themes.

Producing the report.

2. Content Analysis

Description: Quantify and analyze the presence of certain words, phrases, or concepts. Steps:

Coding the text into manageable content categories.

Analyzing the frequency and relationships among words or concepts.

Interpreting the context within which these words or phrases appear.

3. Narrative Analysis

Description: Focus on the stories and accounts within the comments to understand how people make sense of events and actions. Steps:

Identify the narrative structure (e.g., setting, characters, plot).

Analyze how stories are constructed and the meaning they convey.

Explore the functions of narratives in the context of the organization.

4. Grounded Theory

Description: Develop a theory grounded in the data through a systematic methodology. Steps:

Open coding: Identify concepts and categories in the data.

Axial coding: Identify relationships between categories.

Selective coding: Integrate and refine the theory.

5. Discourse Analysis

Description: Examine how language is used in the comments to understand social and organizational constructs. Steps:

Identify discursive practices and patterns.

Analyze the power dynamics, identities, and social roles constructed through language.

Contextualize findings within broader social and organizational contexts.

6. Framework Analysis

Description: Use a structured approach to sort and interpret data within a pre-existing framework or one developed from the data. Steps:

Familiarization with the data.

Identifying a thematic framework.

Indexing: Applying the thematic framework to the data.

Charting: Rearranging the data according to the framework.

Mapping and interpretation.

7. Sentiment Analysis

Description: Determine the emotional tone of the comments. Steps:

Use coding or software tools to classify comments as positive, negative, or neutral.

Analyze the distribution of sentiments.

Interpret the overall mood and implications for the organization.

8. Comparative Analysis

Description: Compare different groups' responses (e.g., departments, job roles). Steps:

Segment the data by relevant categories.

Compare themes, sentiments, or narratives across groups.

Interpret differences and their implications.

9. Constant Comparative Method

Description: Compare data continuously to refine categories and identify variations. Steps:

Collect and code data simultaneously.

Constantly compare new data with existing codes and categories.

Refine and adjust categories throughout the analysis process.

10. Lexical Analysis

Description: Analyze the language use and vocabulary within the comments. Steps:

Identify keywords and phrases.

Analyze word frequency and co-occurrence patterns.

Interpret lexical choices in context.

Implementation Tips:

Triangulation: Use multiple methods to cross-verify findings.

Software Tools: Consider using qualitative analysis software (e.g., NVivo, MAXQDA, ATLAS.ti) for coding and analyzing data.

Intercoder Reliability: Ensure reliability by having multiple analysts code the data and compare results.

By employing a combination of these methods, you can gain a deep understanding of the feedback provided during the company town hall, uncovering actionable insights and informing decision-making processes.

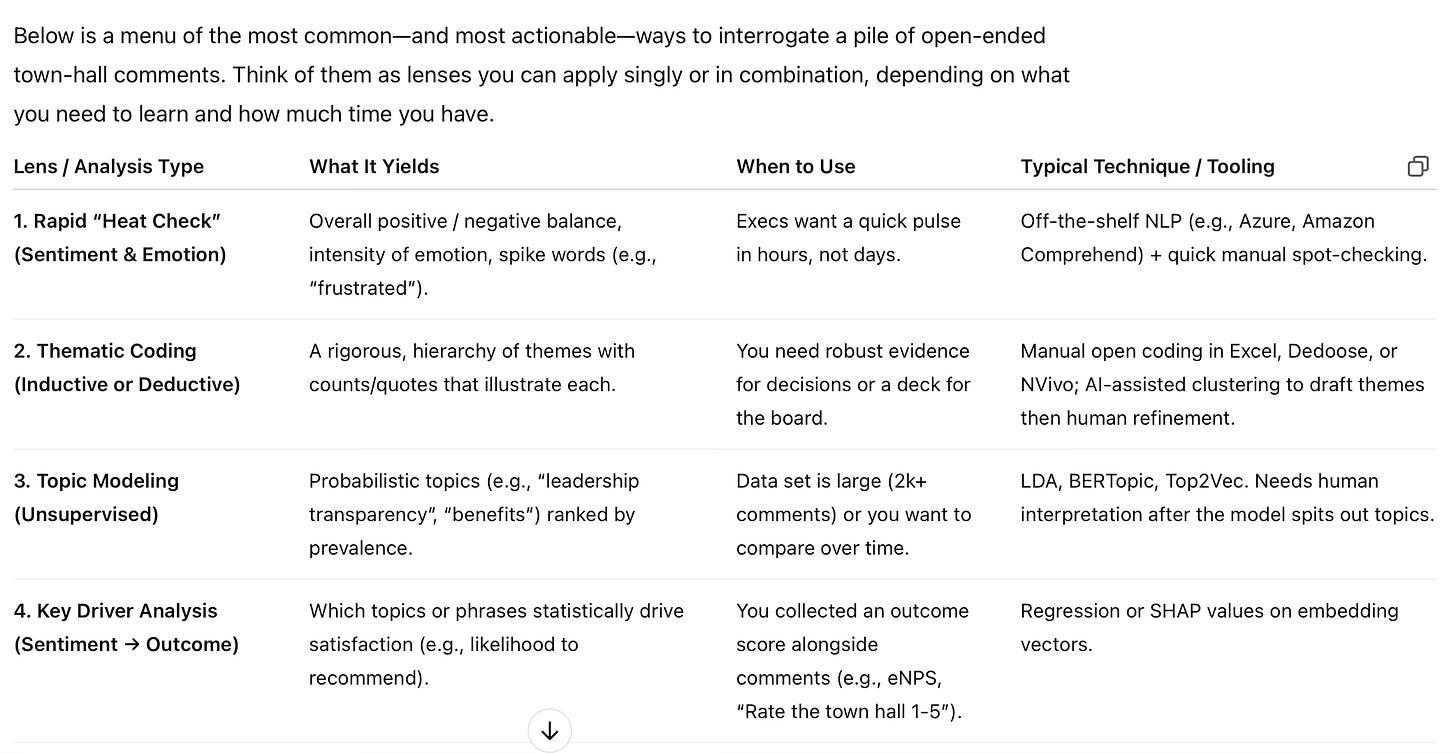

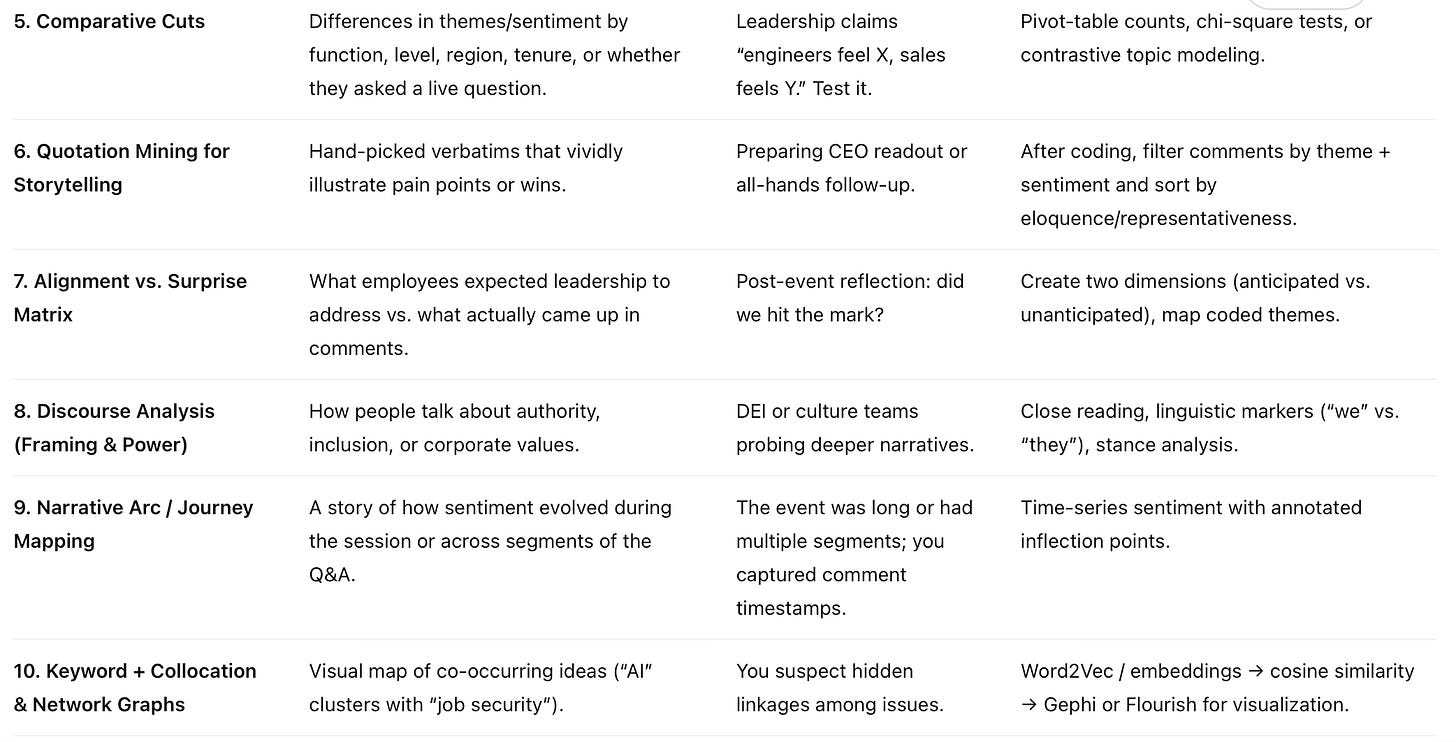

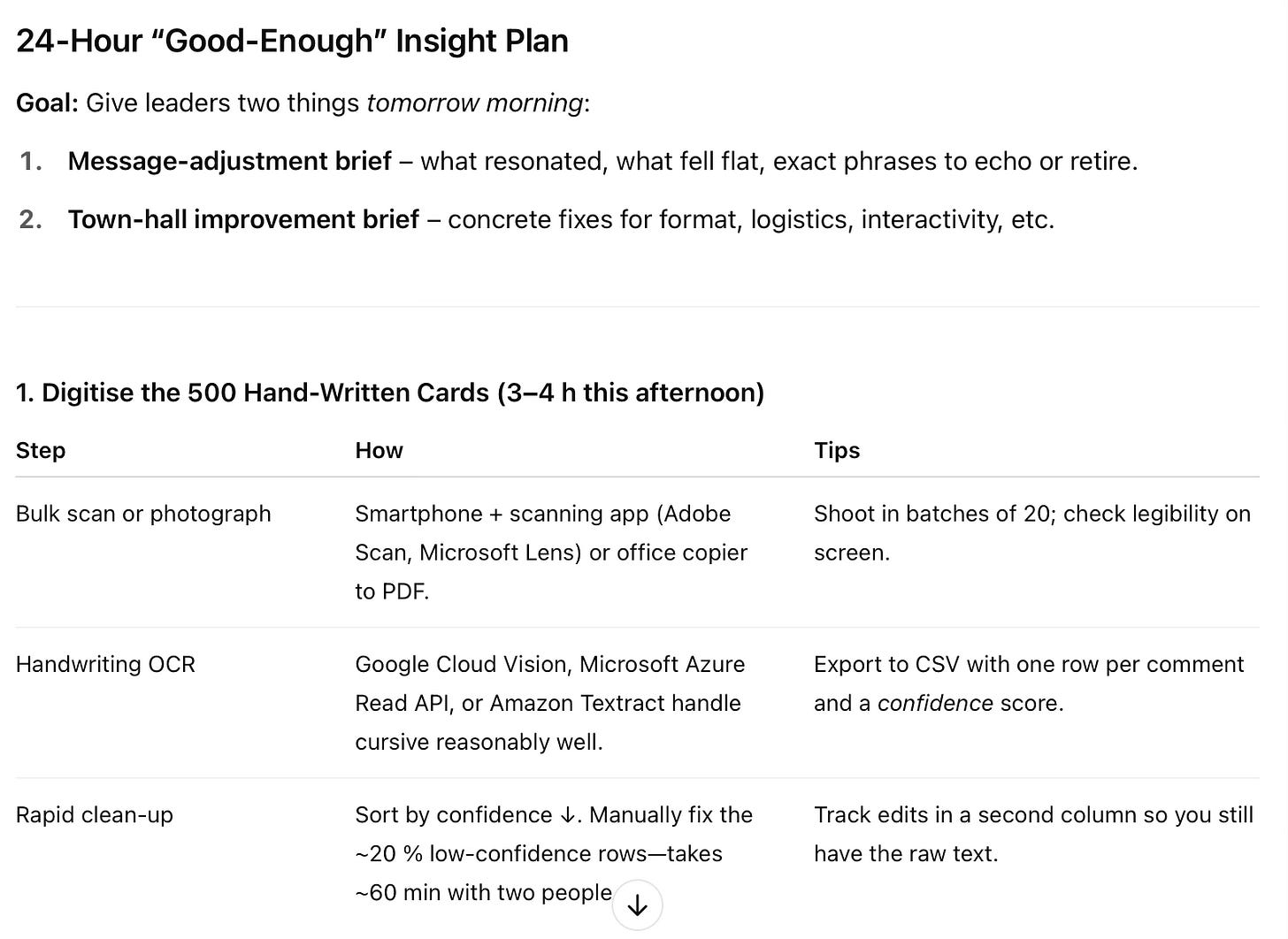

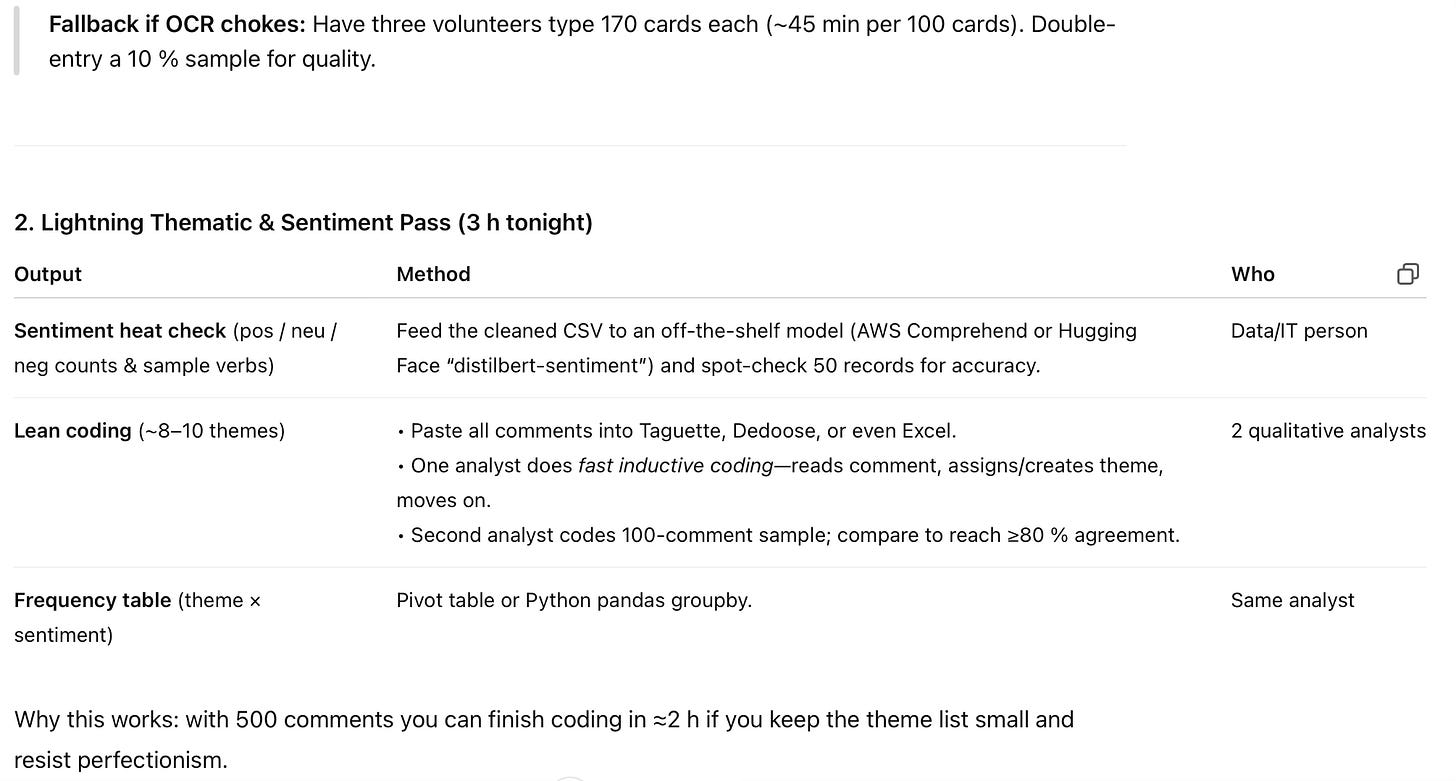

Today we asked the same of o3, and had this exchange, which is difficult for us to paste in here but which you can see in full at this link. o3 started by giving us a detailed table of options:

It then offered...

Practical workflow

Clarify the business question. “What do we want to do with the insights?”

Scope resources & timeline. Manual coding scales linearly; modeling doesn’t—but still needs interpretation.

Choose 1-3 complementary analyses. E.g., Thematic Coding (for depth) + Sentiment (for speed) + Comparative Cuts (for actionability).

Triangulate, don’t rely on one lens. Converging evidence beats a single metric.

Bring it to life. Pair every number with a verbatim quote or illustrative story.

If you tell me more about the size of your dataset, turnaround time, and the decisions riding on the analysis, I can suggest a concrete plan (including tooling and staffing) that fits your constraints.

We thought that would be a fine idea, and provided this context: “500 comments, on hand-written cards. We need results tomorrow. Decisions will be how to adjust message for the leaders and how to improve future town halls.” o3 responded with a “24 Hour ‘Good Enough’” Insight Plan” (its title, not ours):

These two chats are 12 months apart. To us the difference is obvious, and looking back, surprising. Given what we saw this week from OpenAI’s Codex agent, we expect that a similar retrospective this time next year will be just as (if not more) impressive.

The Power of Shared Inquiry and Experimentation

Team experimentation takes individual discoveries to the next level.

Last week we provided a three-step how-to guide for becoming more proficient with generative AI. We ended that piece with a simple directive: “For one week, use it for everything you can think of.” The value of that approach is twofold. First, as we noted last week, it accelerates the development of one’s intuition for “what these models do well, and where they fail,” which is particularly important because “they’re superhuman at some things, and dumb as rocks at others.” Second, this inquiry-based, experimental approach almost always leads to the discovery of powerful new use cases that one may not have discovered otherwise. In our experience, individual experimentation is the most efficient way to identify new use cases for these tools. Once an individual identifies a potential use case, however, applying the collective creativity, brainpower, and diverse perspectives of a team can take it even further.

We experienced this firsthand last week within our firm. We’re always looking for ways that our colleagues and users can get even more value out of ALEX, our AI-powered digital coach. Last week, we convened a few dozen colleagues to practice and experiment with two specific use cases, one of which was to use ALEX to role play a difficult conversation. The premise was simple: provide ALEX with context about an upcoming conversation for which you want to prepare , and then engage in a role-playing conversation with ALEX to practice, anticipate different conversation scenarios, and get feedback on your approach. We knew this was a powerful base use case. But when we approached it as a team, the collective creativity took over and uncovered variations on this use case that were as or more powerful than the original.

One of our colleagues decided to reverse roles. Rather than having ALEX play the role of the other person in the conversation, this colleague had ALEX play her, and then she played the role of the other person. Several other colleagues then adopted this approach and found it a powerful exercise in seeing things from the other’s point of view. Another of our colleagues decided to role play a conversation that did not even involve her, but instead involved a challenging conversation for which she knew one of her coachees was preparing. In her experiment, she took on the role of her coachee and had ALEX take on the role of the coachee’s CEO, and she found that playing out the conversation in this way allowed her to better understand the situation from the coachee’s perspective and uncovered new insights for her to bring to their next coaching conversation. All 30 of us in the conversation benefited from the real-time learning of new ways to use ALEX that none of us had thought of before.

The point is not necessarily that these specific use cases are so powerful (although they are, and we’d encourage all ALEX users to give them a try), but that we quickly discovered them by applying the collective brainpower of the team to an initial use case that we all knew worked. One of the primary differentiators of generative AI relative to other technologies is its generality of capabilities. There’s always another way, another variation of working with these tools, that can open up new possibilities. Focusing a team’s attention on a proven use case (often identified by an individual in their own experimentation) can accelerate the process to not only identify these variations, but to quickly diffuse that knowledge across the team.

What Happens When Generative AI Invents Novel Solutions?

Google’s AlphaEvolve hints at a future where machines add ideas, not just speed.

Google DeepMind’s new AlphaEvolve just found a shortcut nobody had spotted in 55 years, and it did it without a single human hint. The new tool shaved one step off a famous 4x4 multiplication “recipe” from 1969 and freed almost one percent of Google’s global computing power with a new scheduling rule. Tech talk aside, the bottom line is this: AlphaEvolve offers a glimpse of how humans and generative AI might collaborate next.

In the examples above, the agent wrote, tested, and rewrote its own code until it found the new solutions. It works like a never-ending writers’ room. One model drafts code, another critiques, automated tests check the result, and the best lines continue into the next round. DeepMind calls it an “evolutionary coding agent” because it will keep evolving and running until the gains flatten or the compute budget ends.

Though this new frontier is, for now, in the domain of coding and mathematics, it nevertheless opens a door for teams beyond these industries. Picture an agent that rewrites a policy, FAQ, or all-employee message overnight, A/B-tests every version against existing data, and leaves you three proven winners by sunrise. The human role in this scenario will shift from taking the pen to judging which option best fits brand and context.

DeepMind reports that AlphaEvolve compressed weeks of expert model-tuning into days, trimming a full percent off total training time. What might the same efficiency gain look like for a communication team? Perhaps an agent churns through hundreds of drafts while you sleep, and by the morning your team is judging only the three that land best. The goal isn’t fewer writers; it’s writers who spend their energy on intent, nuance, and judgment while machines shoulder the grind. AlphaEvolve appears to be yet another example of how we are likely moving closer to that reality.

We’ll leave you with something cool: Researchers at Carnegie Mellon have created “an AI model that creates physically stable Lego structures from text prompts.”

AI Disclosure: We used generative AI in creating imagery for this post. We also used it selectively as a creator and summarizer of content and as an editor and proofreader.