Confluence for 5.5.24

GPT-4 now has “memory.” Three other gen AI updates of note. Our first impressions of Midjourney’s web interface. A new Substack recommendation.

Welcome to Confluence. Here’s what has our attention at the intersection of generative AI and corporate communication this week:

GPT-4 Now Has “Memory”

Three Other Gen AI Updates of Note

Our First Impressions of Midjourney’s Web Interface

A New Substack Recommendation

GPT-4 Now Has “Memory”

A first move in generative AI being able to remember context across chats.

Several months ago X/Twitter popped with conversation about a number of GPT-4 users having seen a notice that forecasted a “memory” for the chat service — the ability for GPT-4 to remember facts and interactions across chats. When we read this we were immediately intrigued. One of the downsides of generative AI tools like GPT-4 is that they only understand the context you’ve provided in a given interaction. ChatGPT’s custom instructions address this to a certain extent, as they extend across all chats, and with custom GPTs you can include context you want to apply to all chats using that GPT, which is extremely helpful (we have a client who has fed a custom GPT all the information and manuals for his rather impressive HiFi system, for example). But these features have limits, and at least so far, are only available for ChatGPT.

Imagine the utility of a generative AI tool that has recall across your chats — that when you tell it that your wife doesn’t like pesto, it remembers it forever and accounts for that when you ask for recipes, or that when you tell it your lead product is always italicized in text, or who your key internal stakeholders are and what their primary needs are, it remembers that, too. Extend that across hundreds or even thousands of interactions, and you end up with an AI tool that can become much more precise and helpful in what it generates1.

So, we were understandably interested a few days back when we learned that “memory” was indeed coming to GPT-4. Several of us have this feature (it’s rolling out to the user base over time), and we can offer a few reactions. The first is that “memory” is probably a bit of an overstatement. “The ability to remember specific facts that you tell GPT-4 to remember or that it chooses to remember on its own” is more accurate. OpenAI’s post on the feature reads:

As you chat with ChatGPT, you can ask it to remember something specific or let it pick up details itself. ChatGPT’s memory will get better the more you use it and you'll start to notice the improvements over time.

Here’s a ChatGPT-generated summary of the key details from that OpenAI post:

Users have complete control over this memory feature. They can instruct ChatGPT to remember or forget specific details conversationally or through settings and can turn the memory feature off entirely.

Users can view, manage, and delete specific memories or clear all memories through the “Manage Memory” settings. Memories are not linked to specific conversations, and deleting a chat does not delete its associated memories.

Memories can be used to improve OpenAI models, but users can opt out of this. Content from Team and Enterprise customers is not used for model training. Additional privacy features include not proactively remembering sensitive information unless explicitly requested.

Users can engage in conversations that do not utilize the memory feature or appear in history through temporary chat settings, ensuring these interactions also do not train OpenAI models.

Users can provide direct guidance to ChatGPT to remember certain details, enhancing personalization and relevance of interactions.

Enterprise and Team account owners can manage memory settings across their organization.

Specific GPTs, such as those designed for book recommendations or greeting card creation, will also have their own memory capabilities. Users need to inform each GPT about relevant details as memories are not shared across different GPTs.

OpenAI plans to expand the availability of the memory feature and will provide updates on a broader rollout.

Only a few members of our team have access to memory, and only for our personal accounts (and we look forward to seeing how this feature rolls out and expands to those on our professional GPT-4 Team account). For communication professionals, we see memory being helpful with style preferences for content generation, facts about specific audiences, editorial preferences, and more. For leaders, we see similar utility in GPT-4 remembering details about organizations, teams, initiatives, and other facts.

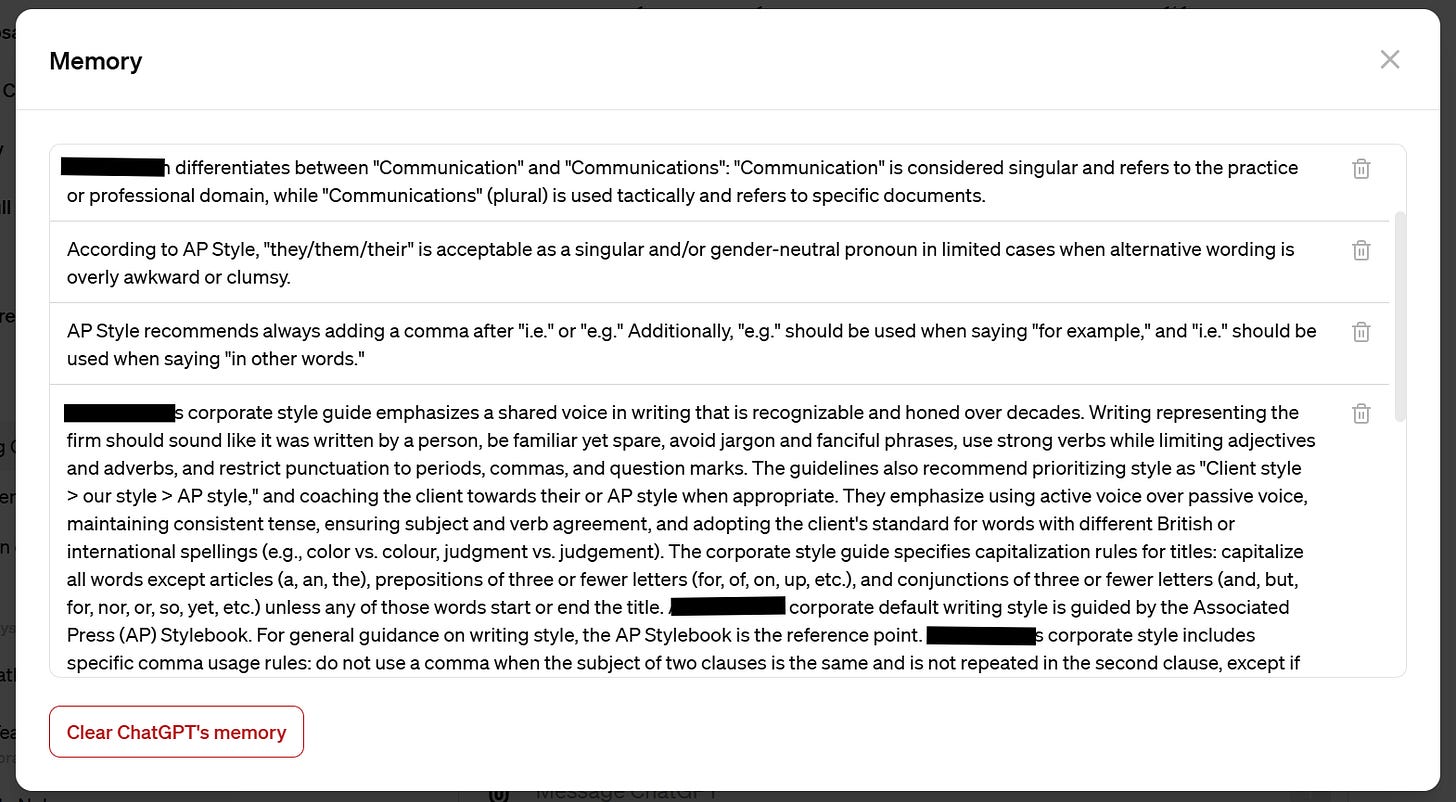

When you ask GPT-4 to remember something it gives you a “Memory Updated” message. In practice, we’ve found GPT-4 can sometimes struggle adding memories offered in long paragraphs, but it’s very good at adding specific memories. It also does a fair job of selecting what to remember from chats if you say “remember this chat.” We also noticed that if we started a chat by saying we were going to give GPT-4 things to remember, it remembered individual items as we added them without our asking it to remember. Here’s a screen shot of the memory settings page after a chat in which one of us asked GPT-4 to remember details from our corporate style guide:

How much can it remember? We don’t really know, but we will be testing the limits to find out. This will be the key variable — the utility in this type of memory is the ability for the AI to be “smarter” by knowing things that span a wide range of facts and preferences. We also aren’t sure how the memory factors into the size of GPT-4’s context window, although with GPT-4 Turbo having a context window length of 128,000 it may not matter much to most users.

We expect that OpenAI is a leader with this feature, and that it will come to other generative AI tools as well. We look forward to it, as context is so essential to generative AI providing helpful and correct output, and the more context it can start with prior to prompting, (presumably) the better.

Three Other Gen AI Updates of Note

Google and Anthropic both expand the potential reach of their models.

Three other updates recently came to the generative AI space, and each speaks to the work the leading generative AI labs are doing to broaden the reach of their chat-based tools.

Google now provides an enterprise-grade plan for Gemini via Google Workspace.

Anthropic announced a Claude app for iOS, which you may find here.

Anthropic also announced a Claude for Teams plan. There is a purchase minimum of five seats at $30 per month, and here’s their intro video:

We have two observations. First, the leading generative AI labs will keep working hard to extend the reach of their models and the tools that use them, both to the consumer and commercial sectors. The broader that reach, and the more people who begin to work with and learn the strengths (and weaknesses) of these models in their day-to-day lives, the more pressure we expect employers will have to make these tools available within their organizations.

Second, keep an eye on Anthropic and Claude. Claude 3 Opus is very, very good. Of the leading models, it’s Claude with which we are most impressed, and it is Claude 3 Opus that we select for our most challenging and important personal and commercial work. The only things Claude 3 Opus lacks, in our view, are some of the features that make GPT-4 so helpful (like memory and custom instructions). Expect a review of Claude’s Team features in the next week or two, and as Claude’s feature set expands, it should continue to be a significant and growing force in this space (until, at least, we see GPT-5).

Our First Impressions of Midjourney’s Web Interface

The new Midjourney “Alpha” web interface is now available to users who have generated 100+ images.

As we’ve mentioned before, Midjourney is our preferred image generation tool, and we use it to generate the majority of the cover images for Confluence, including this week’s. Another aspect of Midjourney that we’ve noted is its clunky, often frustrating user interface based in Discord (a social platform widely used in gaming communities). The friction in using Midjourney until now has been enough to drive many colleagues and clients to other (and, in our opinion, inferior) tools. For months we’ve been looking forward to using Midjourney Alpha, Midjourney’s new standalone web interface, and we finally got access to it this week — along with all other Midjourney users who have generated at least 100 images.

We’ve just begun exploring, but it’s immediately obvious that the user experience in Midjourney Alpha is vastly superior to the Discord-based interface. Today, we’ll note three improvements we noticed within minutes of using Alpha to generate this week’s cover image.

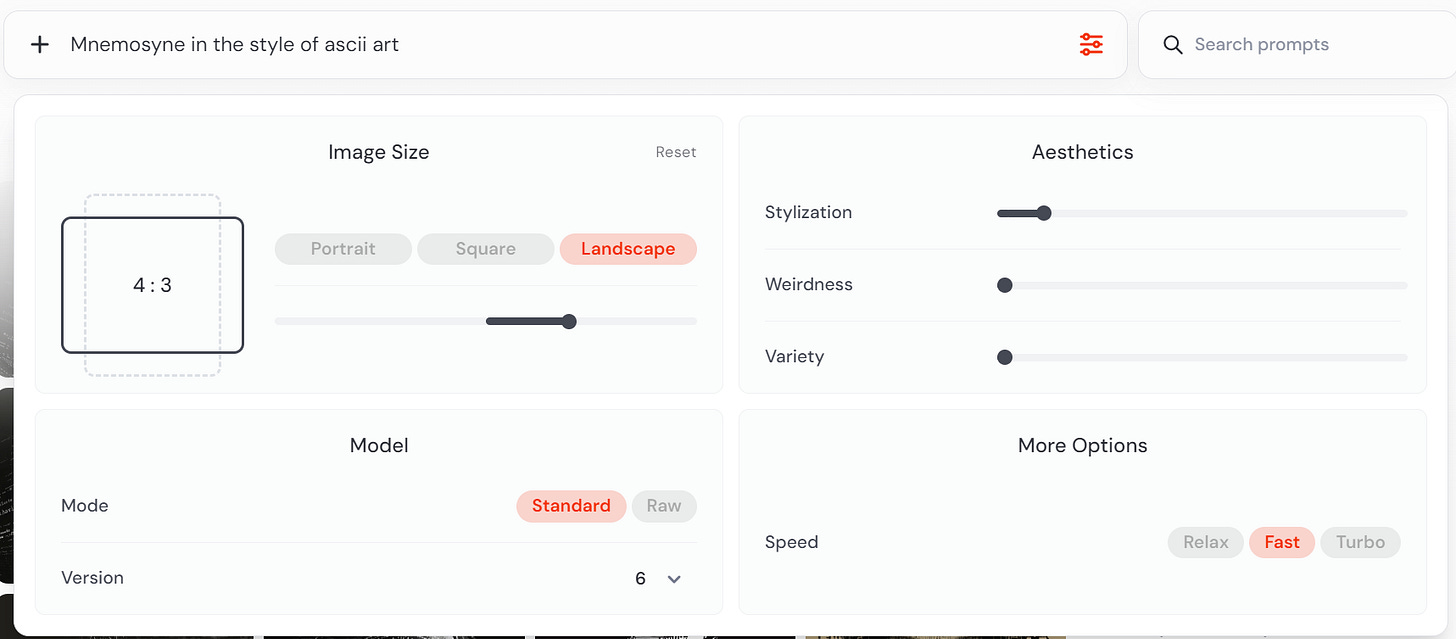

First, when entering a prompt to create a new image, users can choose the parameters of an image via an interactive panel, rather than by entering the information into the prompt itself (which often required research and, when complex enough, could almost feel like coding).

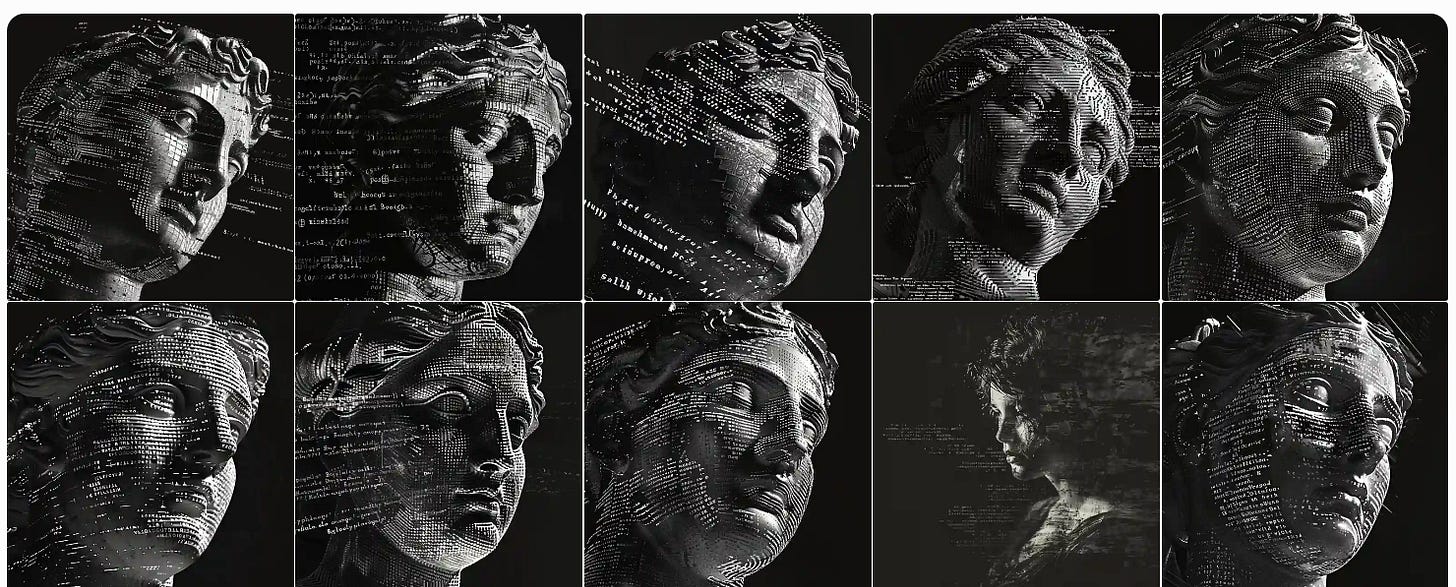

Second, it is much easier to browse the images you’ve created. Below is a snapshot view of some of the variations we generated for this week’s cover image.

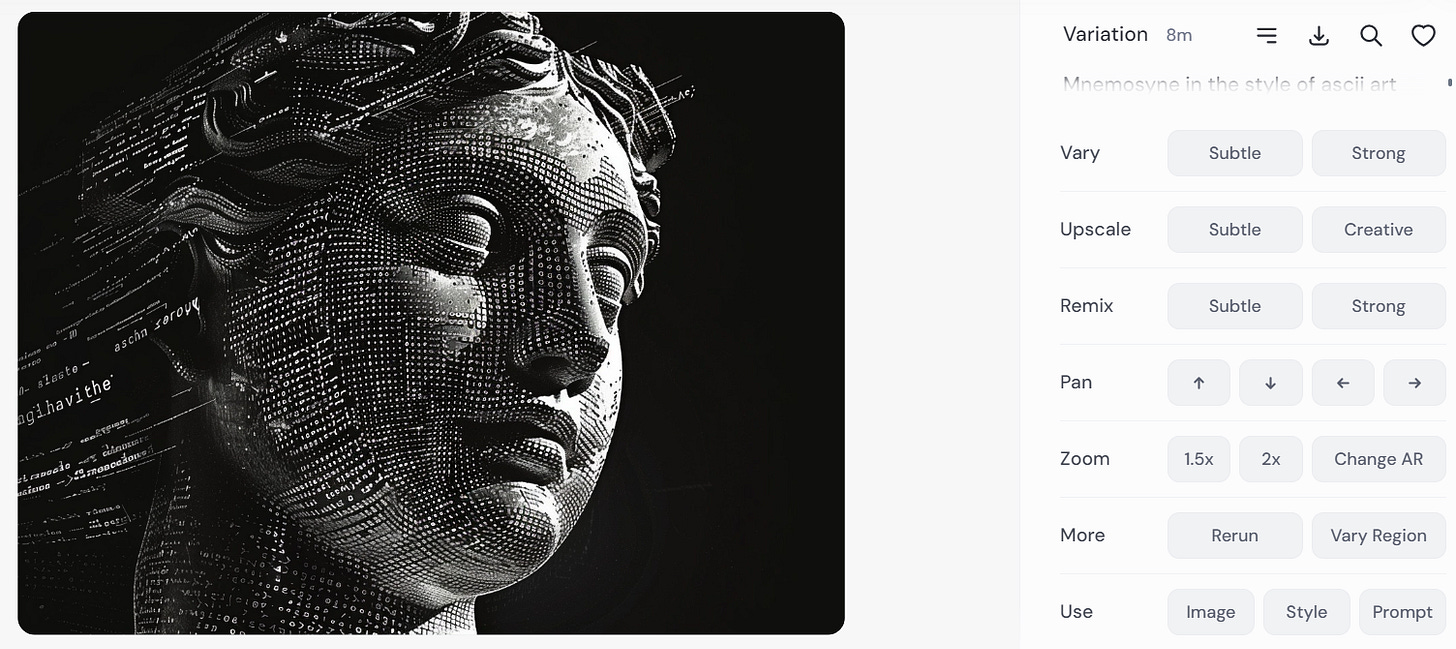

And third, you can simply click any image to open a different panel of options to edit the image.

Based on other user accounts posted online, we recognize we’re just scratching the surface of what’s possible in Alpha. But even with our limited use so far, there is no doubt that the Alpha interface is a game-changer. While it’s available only to Midjourney users who have generated at least 100 images, it’s a matter of time until this improved interface is available to everyone.

A New Substack Recommendation

UCSB Professor Matt Beane’s “Wild World of Work” Substack is worth following.

We’re always on the lookout for people who can make us smarter about the implications and applications of generative AI for individuals and organizations. We’ve written about and referred readers to Daniel Rock, Ethan Mollick, and many others. At the recommendation of Daniel Rock, we recently began following the work of Matt Beane, Assistant Professor in the Technology Management Program at the University of California, Santa Barbara. After reading Matt’s work over the past few weeks, we agree with Daniel’s assessment that Matt’s work is worth following.

Much of the writing on Matt’s Substack, Wild World of Work, focuses on a topic that has been at the forefront of our thinking lately: what the new class of AI technologies means for professional skills. Two recent posts we’d recommend are “Think You’re Skilled? Think Again.” and “The Specter of Skill Inequality.” Here’s an excerpt from the latter (emphasis ours):

But the sociologist in me doesn’t operate on hope, positive exceptions, or possibilities. It sees social forces at work. Situations, practices, tools, institutions, and cultures that make certain outcomes more likely for anyone who gets involved. Deprive someone of resources like money and, on average, they start to behave and think like a poor person. Put someone in a social network with a certain set of political beliefs and, on average, they’ll adopt them. Give us technology that allows us to get rapid productivity gains if we reduce novice involvement in the work? On average, we’ll do it. From this point of view, the “safe” prediction from all the research I’m aware of—mine and others’—is that we will continue to seek immediate productivity from genAI and other intelligent technologies. As individuals succeed with less help, the collaborative bonds between those who can and can’t will fray. We will degrade healthy challenge, complexity, and connection - the necessary components of skill development that comprise the skill code at the core of my upcoming book. The rare few who find shadow learning practices or who are lucky enough to avoid these traps will race ahead with far more skill than the rest of us.

The dynamic we’ve emphasized with bold text above is one that we are taking very seriously. How should we think about the tradeoffs between skill development in junior talent (or, to use Beane’s term, novices) and efficiency? There’s no easy answer, but it’s a question leaders in every organization need to ask in light of the growing capabilities of these tools. Matt is one of the leading researchers on this and other skill-related questions. We’ll be paying close attention to his work, and we recommend our readers do the same.

We’ll leave you with something cool: An amazing thread of animations by machine learning and researcher Matt Henderson.

AI Disclosure: We used generative AI in creating imagery for this post. We also used it selectively as a creator and summarizer of content and as an editor and proofreader.And possibly, manipulative. Early research shows that the more a generative AI tool understands about a user, the better able it is to persuade a user. This is, of course, true of people, too.

I get something from this newsletter every week. I feel more knowledgeable about the evolution and changing world of AI, learn how best to apply it to my work and I appreciate the Substack recommends. Keep up the great work.

Delighted you find my work valuable enough to share with your readership - clearly, an informed and thoughtful group! I'll be making a couple of rather large announcements on my Substack soon, one including a way to get a free chapter of my book before it comes out on June 11.