Confluence for 6.30.24

Introducing ALEX. Lessons in developing for large language models. The multiplier effect of expertise. New research on AI's creative prowess. Using LLMs to critique LLMs.

Welcome to Confluence. Here’s what has our attention this week at the intersection of generative AI and corporate communication:

Introducing ALEX

Lessons in Developing for Large Language Models

The Multiplier Effect of Expertise

New Research on AI’s Creative Prowess

Using LLMs to Critique LLMs

Introducing ALEX

A virtual leadership coach from our leadership development practice.

We have mentioned several times in prior issues that some of our team was hard at work developing something that uses generative artificial intelligence. And now we’re ready to talk about that with a bit more detail.

Last Thursday, our leadership development practice announced ALEX, a virtual leadership coach that combines the capabilities of a large language model with a database of roughly one million words of our proprietary knowledge and research on leadership and leadership development. It runs using Claude from Anthropic, though our team and the developers have gone to great lengths to make ALEX engage in authentic coaching conversations very similar to those our coaches engage in with clients (something the default models do not do well).

It’s been an interesting experience, and we’ve learned a lot about large language models and how you can use them for very specific and, we hope, powerful applications. We talk about that more in a separate post below. But for now, if you’d like to give ALEX a try, you can do so at this link. Access is for 24 hours. Go ahead and engage it however you like, although once you’ve registered, there’s advice on how to get the most from ALEX here. We think ALEX is an example of something we expect to see much more of in the months to come: specific AIs trained to serve specific roles and drawing on specific knowledge — in this case, our resident intellectual property on leadership, delivered through authentic conversation and dialogue.

Lessons in Developing for Large Language Models

There’s no better way to learn than by doing.

As noted above, some of our team has been working on ALEX for roughly four months, and the original vision was to take a significant body of proprietary research and content that we have about leadership and create something that could not only be useful for the people in our firm, but for leaders everywhere. We suspect this will be a common use of generative AI in years to come: using large language models as a way to make bespoke or proprietary expertise that’s not readily present in the base models’ training content available to others, in a specific way (We want ALEX, for example, to engage in real dialogue and conversation with users … a very different mode that what the large language models tend to do, at least without guidance.).

It’s been an interesting journey, and while we won’t get into too many of the technical details, we’ve learned some things that we think may be of interest to communication professionals and organizations thinking about integrating these tools with their resident content and expertise.

First, we learned that you can do really complicated and impressive things with this technology. Our team and the developers have worked very hard to create a distinct, more conversational experience for those working with ALEX, one very different from working with the standard models (Gemini, ChatGPT, and Claude). It took a lot of prompting to make that happen, but we believe it’s powerful, and we’re sure we’re only scratching the surface of what’s possible in terms of the interactions and use cases you can create.

Second, we learned that prompting still matters. A lot. While we think that as the models get more sophisticated prompting probably becomes less important — you won’t need to use prompting tips like “think step-by-step” or “I’m paying you $20 a month so do this well,” the prompts that drive ALEX are about 114 pages and 26,000 words long (about 1/3 of that is code, but still). The prompt is so long, and so complex, because of how much guidance we need to give Claude to help it behave the way wish — engaging the user in ways we like (being friendly, matching the user’s conversational style, doing things like changing languages when asked, and more), and not doing things we don’t want it to do (giving lists of advice, giving advice without first getting context, and more). The nuances of how ALEX represents knowledge from its underlying database of our content, and how it balances that with what it knows natively, only makes this more complex. So, while for day-to-day uses prompt engineering may get less important, if you want to do something sophisticated, we think it matters quite a bit, and probably will for some time.

Third, the models are getting very good at referencing specific content rather than generating things from their core training. When the user’s query is about leadership, we have ALEX conduct a vector database search of over a million words of our proprietary content, which it then uses (through Claude) to respond. And this gives it, we think, a much more on-point set of responses than you’d get from asking the same question to any of the default models. While we’re doing this with content about leadership, there are all kinds of things you could use as a database of reference material for a large language model, from style guides to examples of content to employee manuals and more. Until recently, the ability to chat with that content was hit-and-miss, as the models would not always accurately find or represent the search results. But we’ve found Claude to be quite accurate (though not perfect), and we expect it will just get better.

Fourth, these models still have drawbacks. As good as it is at following its complex instructions and integrating our proprietary content, ALEX will still occasionally give advice that comes from its general training and not from our data. As much as we’ve suggested that it shouldn’t say it can do things in the real world, every once in a while it says it can do something like send a user a workbook. As much as we’ve tried to help ALEX not to hallucinate, occasionally it will make things up. These are the byproducts of using a large language model that by necessity is generating text one bit at a time, based on probabilities.

This means you need to be careful about what you ask a model to do. Using generative AI to help employees get authoritative answers to policy questions is probably not a good use case as an LLM could get those facts wrong. Because ALEX engages in conversation and ultimately states opinions, the risk is less. But user guidance is important. Making sure that people understand these strengths and weaknesses is important. Making sure people know to fact check any specific information is important. You simply can’t, with any large language model, know with certainty that it will always do the thing you want it to do — and for now at least, there is no way around this. We think the utility of ALEX is significant enough that with the right guardrails and with the right cautions, users can know what they’re getting and can appropriately treat what ALEX tells them. But this variability is part of the game.

Fifth, things are evolving quickly. Just in the last four months, we switched from working with ChatGPT to Claude Opus, and now we’re developing the next version of ALEX to use Claude 3.5 Sonnet. The cycle times for this technology are remarkably short. Anthropic, for example, forecasts updating its models every few months. For any use case you have for a large language model, part of the ongoing challenge will be staying contemporary. We do think the model labs will work to ensure that each update doesn’t make everyone’s existing use cases obsolete. That said, in side-by-side testing our team has noticed subtle but important differences in how ALEX runs on Claude Opus versus Sonnet 3.5 (we can only describe these as differences in their core personalities), and we have to account for them. So the game will continue to change, and for anything that you’re doing in this space to create value, either for yourself in your own communication work or for others in the organization, expect that keeping your solutions contemporary with the models as they develop will need to be a constant focus.

The Multiplier Effect of Expertise

Domain expertise can amplify the power of generative AI tools.

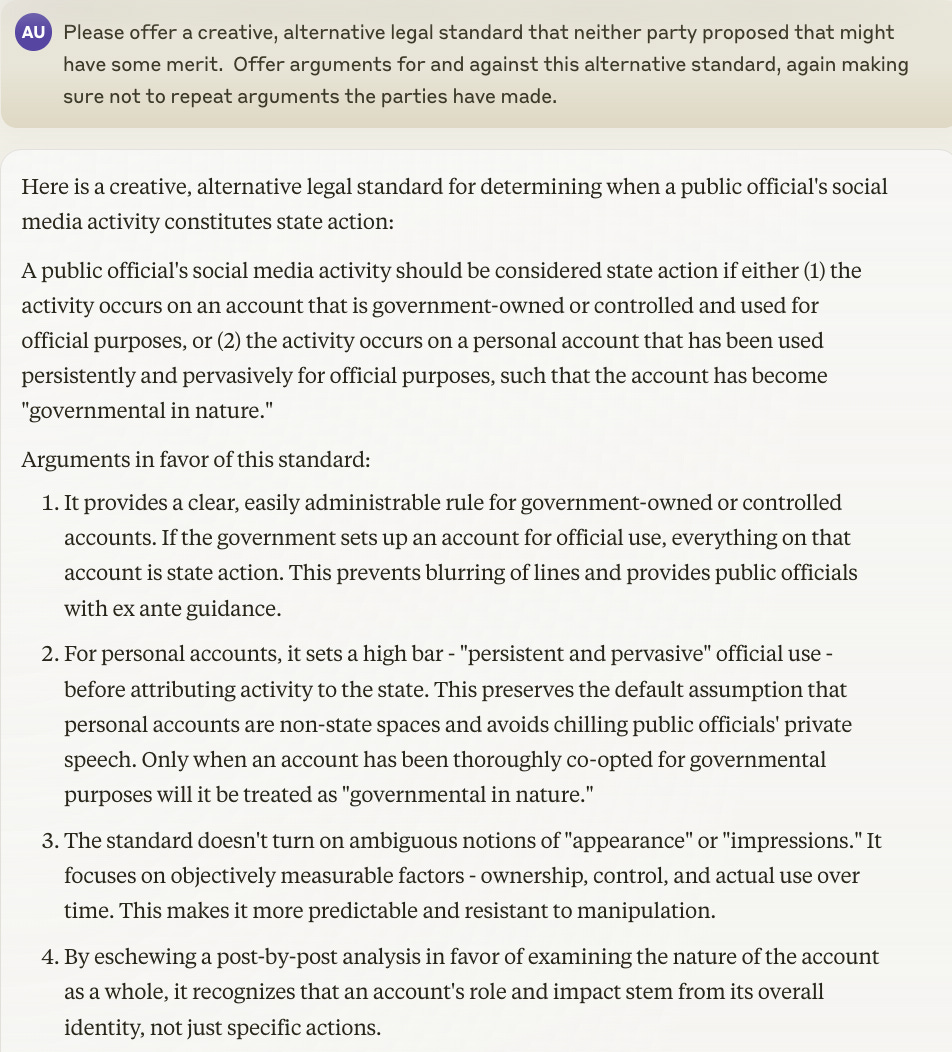

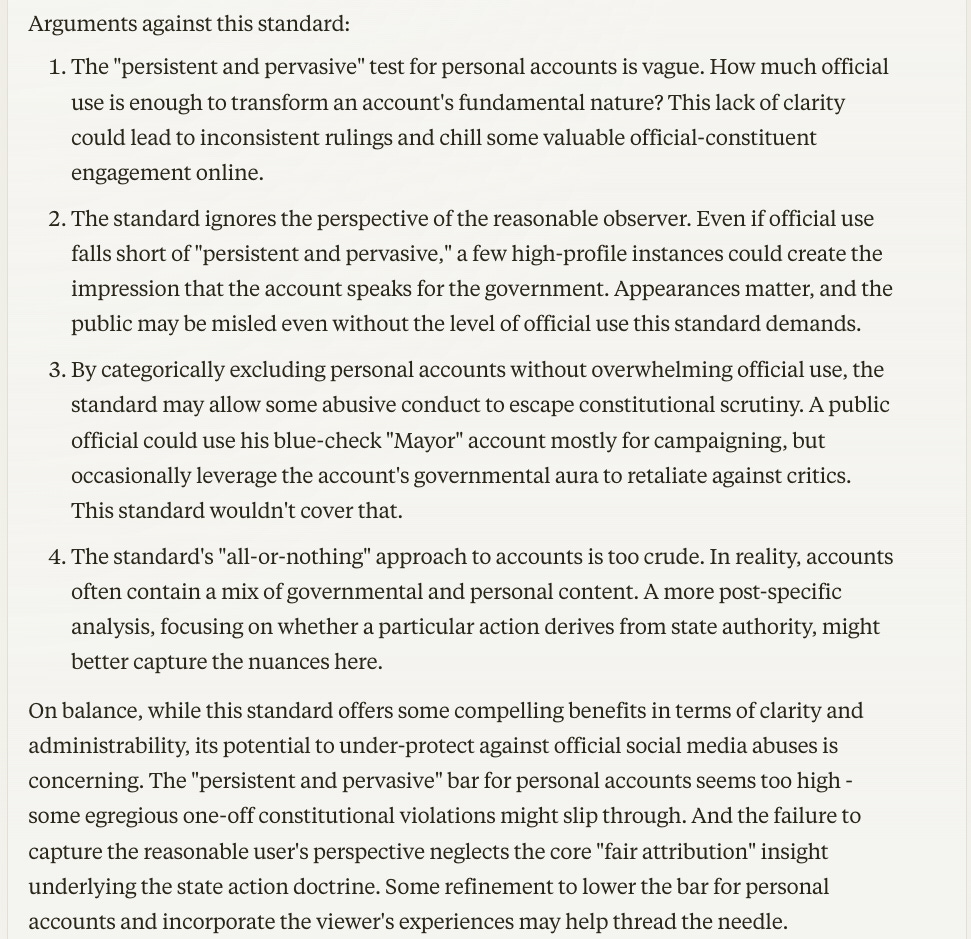

Two weeks ago, legal blogger Adam Unikowsky published a post titled “In AI we trust, part II.” The post — which is worth reading by professionals in any field, not just law — features well over a dozen examples of how Unikowsky used Claude 3 Opus to “conduct empirical testing of AI’s legal ability.” While none of the writers of Confluence are legal experts, we were blown away by the examples of Claude’s output in the post. We shared the post with several attorneys — many of whom are not following developments in AI closely, and some of whom are hardened AI skeptics — and nearly all of them were equally or more impressed. Here’s one of the examples from Unikowsky’s post:

There are about 20 others in the full post. Of the example above, Unikowsky notes:

This is an incredible answer. With no priming whatsoever, Claude is proposing a completely novel legal standard that is clearer and more administrable than anything proposed by the parties or the Court. Claude then offers a trenchant explanation of the arguments for and against this standard. There’s no way a human could come up with this within seconds like Claude did.

We’re not highlighting Unikowsky’s post simply to demonstrate the utility of generative AI tools like Claude for legal work, however. We’re writing about it because we think there’s a more important underlying point here, which is directly applicable to communication professionals (and professionals in any field). We would argue that Unikowsky is able to unlock such powerful legal use cases for Claude because of his legal expertise. It would never occur to one of us to do the things Unikowsky did with Claude because we do not know enough about the practice of law to even consider them.

Unikowsky’s work is a compelling example of how domain expertise can have a “multiplier effect” on the power of AI tools. This point extends to communication expertise and is directly relevant to a point Ethan Mollick makes in his book Co-Intelligence (the emphasis below is ours):

Our new AIs have been trained on a huge amount of our cultural history and provide us with text and images in response to our queries. But there is no index or map to what they know and where they might be helpful. Thus, we need people who have deep or broad knowledge of unusual fields to use AI in ways that others cannot, developing unexpected and valuable prompts and testing the limits of how they work.

— Ethan Mollick, Co-Intelligence, page 116

While law is not an “unusual field,” Unikowsky’s use of Claude is otherwise a perfect illustration of Mollick’s point. He applies his domain expertise to test the limits of Claude’s capabilities, and the results are astounding. For experts in communication, the implications are clear: your expertise can amplify the power of these tools. The only way to find out what that looks like is to try it.

New Research on AI’s Creative Prowess

Measuring the creativity of humans vs. machines.

A recent study by a team of researchers in Canada, “Divergent Creativity in Humans and Large Language Models,” sought to investigate the creative capabilities of artificial intelligence, comparing AI-generated content to that of human participants across various tasks. The results paint an intriguing, if unsurprising picture of AI’s creative potential.

Researchers pitted leading LLMs against 100,000 human participants in a range of creative challenges, including the Divergent Association Task (DAT) and creative writing prompts. Though this research was published before the release of the latest and greatest models, it’s still worth understanding the team’s main findings:

AI excels in divergent thinking. OpenAI’s GPT-4 model significantly outperformed the average human participant on the Divergent Association Task (DAT), a validated measure of creative thinking. Google’s Gemini Pro model, too, performed comparably to humans, while other AI models scored lower.

AI performs strongly in creative writing. Top-performing models demonstrated high levels of semantic creativity in tasks like creating haikus, movie synopses, and flash fiction. Human writers maintained an overall edge, but AI-generated texts showed impressive diversity and originality.

AI demonstrates tunable creativity. Adjusting parameters like the “temperature” setting in GPT-4 significantly impacted creative performance. Higher temperature settings, which essentially means that responses will be more variable, generally led to more diverse and creative outputs, particularly in longer-form writing tasks.

AI models show varied strengths. While GPT-4 was the overall top performer, other models like open-source Vicuna showed surprising strength in certain areas, despite being much smaller. This suggests factors beyond raw computing power influence an AI's creative capabilities.

Last week, we wrote about the impact AI has had on the freelance market — and this research clarifies some of the “why” behind it. As AI capabilities in creative tasks grow, professionals may need to focus more on higher-level creative direction, curation, and uniquely human aspects of creativity that AI still struggles to replicate, along with refining AI outputs. It continues to become increasingly apparent that AI tools likely best serve to augment and inspire human creativity rather than replace it.

The study raises important questions, too, about originality, authorship, and the value of human creativity in an age of increasingly capable AI. Creative industries will need to address these issues proactively and quickly, as things continue to develop at an astounding clip.

Using LLMs to Critique LLMs

Research from OpenAI reinforces key lessons in how to work with AI.

While Anthropic, with Claude 3.5 Sonnet and new features like Artifacts and Projects, has captured most of our attention in recent weeks, OpenAI has released research on what it’s calling CriticGPT that is worth noting.

OpenAI designed CriticGPT, a model based on GPT-4, to identify mistakes made by other language models. The fundamental question OpenAI sought to answer was whether AI could be used to find errors made by AI. The results are both intriguing and instructive for how we work with AI.

When tasked with identifying errors in code, CriticGPT demonstrated a remarkable ability to spot significantly more issues than human reviewers alone. But there was also a downside — the AI also had a tendency to flag non-existent errors. Given our understanding of these models, this finding wasn't surprising. We know that LLMs are predictive, not deterministic, and that they aim to make you happy — if you ask the AI to find errors, it’s going to find them whether they exist or not.

What this research also showed is that the best outcomes came from a cyborg approach, where human reviewers integrated AI reviews into their own critiques. By using CriticGPT to augment human reviewers, the research team found the strongest balance. Human-machine teams of critics and contractors were able to catch similar numbers of bugs as LLM critics while significantly reducing the rate of hallucinated issues compared to AI working alone. The combination of human judgment and AI capabilities proved more effective than either in isolation.

This research offers a few insights that we suggest keeping in mind when working with AI.

First, it demonstrates AI’s potential to improve its own output, reinforcing the value of using AI to critique its own work. This principle applies not only to coding but also to writing and other creative tasks. This is consistent with the advice we heard from Dean Thompson a few weeks ago and something we do nearly every time we work with AI.

Second, it highlights the benefits of integrating AI into our workflows rather than fully outsourcing tasks. While simple tasks might be automated, complex and nuanced work benefits from a collaborative human-AI approach. This gives us another signal that the future lies not in choosing between human or artificial intelligence, but in finding how to combine the strengths of both.

Third, it addresses a common concern among clients who are hesitant to trust AI with accuracy-critical tasks. While caution is understandable, this research suggests that even when AI isn’t perfect, it often performs comparably to, if not better than, human alternatives. With appropriate checks and balances, AI can assist us in making incremental yet meaningful improvements in our work.

OpenAI’s research on CriticGPT reinforces what we’ve seen in practice: AI works best when it enhances human capabilities rather than trying to replace them. The key is finding the right balance — leveraging AI’s strengths while relying on human judgment to refine the results.

We’ll leave you with something cool: Websim.ai creates webpages for any URL you can imagine.

AI Disclosure: We used generative AI in creating imagery for this post. We also used it selectively as a creator and summarizer of content and as an editor and proofreader.