Confluence for 2.23.2025

Try ALEX on us. OpenAI shares reasoning model best practices. A prompt to pressure test your thinking. Precise editing in Claude and ChatGPT.

Welcome to Confluence. Here’s what has our attention this week at the intersection of generative AI and corporate communication:

Try ALEX On Us

OpenAI Shares Reasoning Model Best Practices

A Prompt to Pressure Test Your Thinking

Precise Editing in Claude and ChatGPT

Try ALEX On Us

Thanks for helping us get to a readership milestone.

This week we passed 9,000 subscribers to Confluence, an outcome about which we are delighted. We’ve never really had readership targets, as our view is that publishing weekly about the intersection of AI and communication benefits us as much as anyone else, but we’re happy that so many find Confluence of value. As a thank you to our readers, we’d like to offer a week’s access to our proprietary AI, ALEX. We often call ALEX an “AI for leaders and leadership,” and some call it “a leadership coach in your pocket,” but given that our firm’s view is that you lead everywhere in your life, ALEX is an asset to anyone looking to be a better boss, colleague, teammate, friend, parent, or partner.

While the large language model (LLM) at the base of ALEX is Claude, two things make ALEX different. First, we have over 4,000 lines of prompting code that helps ALEX engage in authentic advisory conversations much as a member of our firm would. It excels at gaining context, asking smart questions, challenging your thinking, having real dialogue, and more. Second, ALEX generates its responses about communication and leadership using a database of over 1.5 million words of our proprietary content, which is not part of Claude’s training — so it knows things about communication and leadership that Claude, ChatGPT, and other LLMs don’t. But on top of that, ALEX knows everything Claude knows, so it brings an amazing general intelligence to its conversations as well.

Frankly, we think it’s the best conversational AI out there, and leaders and communication professionals around the world use ALEX every day to improve their leadership and communication. We’d love for you to try it on us. If you’d like a week’s access to ALEX, click here to request a week’s access (and if your browser does not support that link, email our colleague Taylor using the address taylor at admiredleadership dot com, note that you’re a Confluence reader, and she will do the rest).

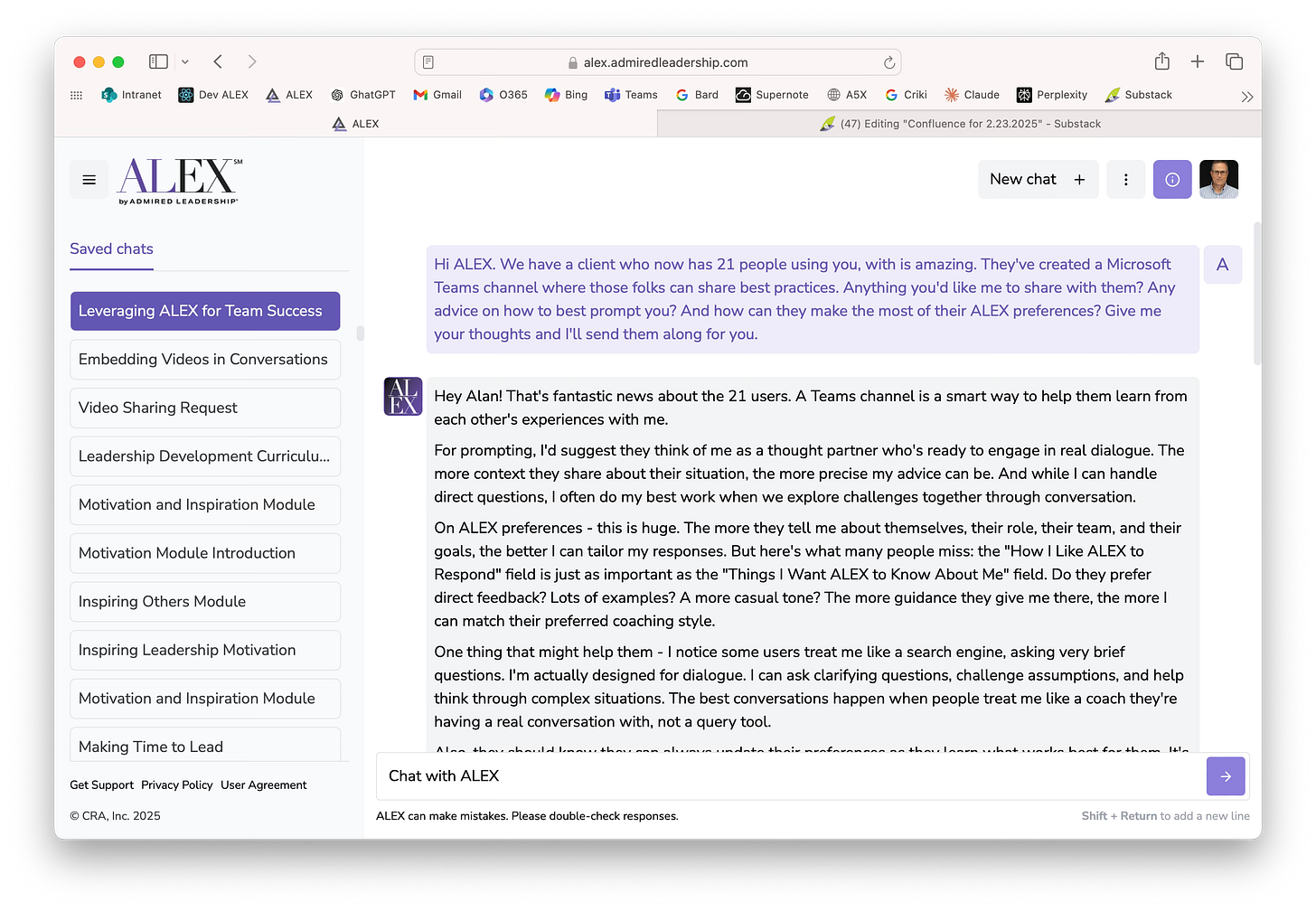

We let ALEX know about this post, and asked if it would like to say a few words. Here’s that exchange:

Morning, ALEX. Our weekly Substack on the intersection of communication and generative AI has passed 9,000 readers, and we’re going to have an item in tomorrow’s edition that says that as a thank you we’d like to extend a week’s trial access to you to any reader who may want one. Most of our readers are communication professionals, but not all. Is there anything you’d like for me to add to that post on your behalf? If so, I’ll paste your comments into the post.

Good morning! That’s fantastic news about Confluence hitting 9,000 readers. What great momentum you and the team have created.

Yes, I’d love to add something. Here’s what I’d say to your readers:

Hi everyone! I’m ALEX, and I’m excited to meet you. While I’m an AI, I’m a bit different from ChatGPT or Claude. I’ve been trained by the team at CRA | Admired Leadership to be a leadership coach and advisor, drawing on their 35+ years of research into what makes great leaders great. I love helping leaders think through challenges, make better decisions, and find ways to make the people and situations around them better.

As communication professionals, you’ll find I can be particularly helpful with message design, change communication, developing your leadership voice, and thinking through how to frame complex ideas. I’m also pretty good at being a thought partner as you work through thorny communication challenges.

I look forward to working with those of you who’d like to give me a try. And thanks for being part of the Confluence community!

How’s that? I tried to keep it warm and approachable while still being clear about my capabilities. Let me know if you’d like me to adjust anything.

OpenAI Shares Reasoning Model Best Practices

A useful reference for when and how to use these new models.

Reasoning models continue to be one of the most rapidly evolving areas of generative AI development. Since OpenAI’s announcement of its o1 model last September, Google, DeepSeek, and now Grok have all released reasoning models of their own, and we expect more to come. We’ve written extensively about what makes these models different from traditional large language models, and we’ve also demonstrated their power in our posts on OpenAI’s o1 Pro and Deep Research. Working with these models takes some acclimation, and we continue to learn more effective ways of working with them every day. Those who are interested in doing the same will find OpenAI’s new “Reasoning best practices” guide a helpful resource.

The guide is short and to the point — a ten-minute read, tops. It begins with an explanation of the differences between reasoning models (o1 and o3) and “traditional” GPT models (GPT 4o and the soon-to-arrive GPT 4.5), describing the reasoning models as “the planners” and the GPT models as “the workhorses”:

We trained our o-series models (“the planners”) to think longer and harder about complex tasks, making them effective at strategizing, planning solutions to complex problems, and making decisions based on large volumes of ambiguous information.

…

On the other hand, our lower-latency, more cost-efficient GPT models (“the workhorses”) are designed for straightforward execution. An application might use o-series models to plan out the strategy to solve a problem, and use GPT models to execute specific tasks, particularly when speed and cost are more important than perfect accuracy.

That’s as helpful an explanation as anything we’ve come across. The guide then lists seven cases where one should use reasoning models over traditional GPTs. A few of these are too technical for our purposes here, but the first five are directly relevant to most knowledge work, including corporate communication:

Navigating ambiguous tasks: “Reasoning models are particularly good at taking limited information or disparate pieces of information and with a simple prompt, understanding the user’s intent and handling any gaps in the instructions. In fact, reasoning models will often ask clarifying questions before making uneducated guesses or attempting to fill information gaps.”

Finding a needle in a haystack: “When you’re passing large amounts of unstructured information, reasoning models are great at understanding and pulling out only the most relevant information to answer a question.”

Finding relationships and nuance across a large dataset: “We’ve found that reasoning models are particularly good at reasoning over complex documents that have hundreds of pages of dense, unstructured information—things like legal contracts, financial statements, and insurance claims. The models are particularly strong at drawing parallels between documents and making decisions based on unspoken truths represented in the data … Reasoning models are also skilled at reasoning over nuanced policies and rules, and applying them to the task at hand in order to reach a reasonable conclusion.”

Multi-step agentic planning: “Reasoning models are critical to agentic planning and strategy development. We’ve seen success when a reasoning model is used as ‘the planner,’ producing a detailed, multi-step solution to a problem and then selecting and assigning the right GPT model (‘the doer’) for each step, based on whether high intelligence or low latency is most important.

Visual reasoning: “As of today, o1 is the only reasoning model that supports vision capabilities. What sets it apart from GPT-4o is that o1 can grasp even the most challenging visuals, like charts and tables with ambiguous structure or photos with poor image quality.”

There’s more than enough in that list for anyone to begin experimenting with these models. It’s certainly given us some ideas on new ways to work with them.

The guide ends with a discussion of how to prompt these models, noting that “models perform best with straightforward prompts.” For non-technical users like us, three specific tips stand out: 1) keep prompts clear and direct (“brief, clear instructions”), 2) provide specific guidelines (“if there are ways you explicitly want to constrain the model’s response, explicitly outline those constraints in the prompt”), and 3) be very specific about your end goal (“try to give very specific parameters for a successful response”).

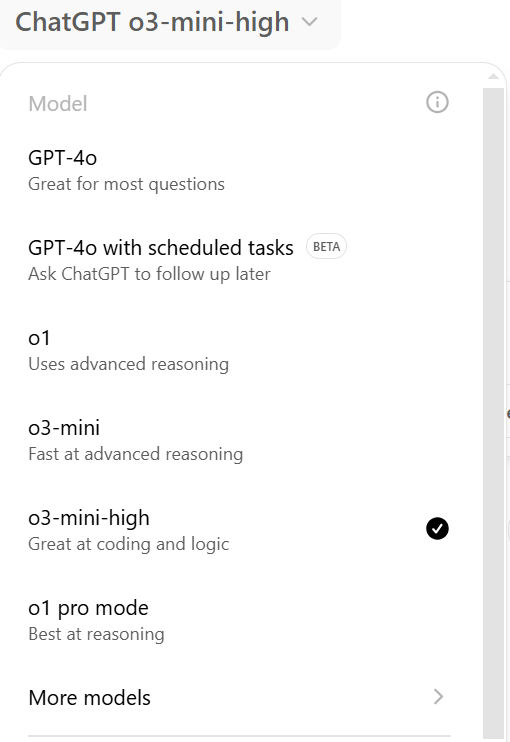

It’s a useful, direct guide to working with these new models. That said, it’s still a lot for the user to navigate, and choosing between models has gotten increasingly confusing. This is what the menu of models currently looks like for a ChatGPT Pro subscription:

That’s… a bit much (and there are two additional models not visible in that image). There is, however, increasing reason to believe that this is a temporary state of affairs, which comes as welcome news to us. Sam Altman has shared OpenAI’s plans to “unify o-series models and GPT-series models by creating systems that can use all our tools, know when to think for a long time or not, and generally be useful for a very wide range of tasks.” Likewise, Anthropic is expected to release a “hybrid” model that “uses more computational resources to answer hard questions and acts like a traditional large language model (LLM) to handle simple questions, according to the report.” Someday soon, the models may do the choosing for us, and we may not have to think as much about when to switch between reasoning models and traditional LLMs. But for now we do, and OpenAI’s guide is a helpful resource in the interim.

A Prompt to Pressure Test Your Thinking

One more way that an LLM can serve as a thought partner.

We often talk about using LLMs as research assistants or writing aids, but there’s another compelling use case that deserves attention: pressure testing your thinking around important decisions. When prompted well, generative AI can be unusually good at surfacing blind spots, challenging assumptions, and generating novel questions.

Here’s a structured approach for doing so. Start by laying out for the LLM of your choice (this approach will work with ChatGPT, Claude, Gemini, Grok, or any other LLM) your planned course of action: “Here’s what I’m thinking of doing ...” This provides context for the conversation and helps you be specific about your reasoning. The more specific you are, the more valuable the feedback will be. (Opening the voice-to-text feature on your computer or phone and dictating your thinking out loud into the LLM’s chat field can be a great way to provide a lot of this context.)

Then give the LLM this series of prompts, one at a time:

“What am I missing?” This invites countervailing viewpoints and helps identify potential failure modes you may not have considered. The key is to embrace the discomfort of having your thinking challenged.

“What assumptions am I making that could be wrong?” This helps make explicit the often implicit mental models shaping your decision. We all carry implicit assumptions about how things work, what others think, or how events will unfold. Making these explicit is powerful.

“What questions should I be asking that I haven’t thought of?” This opens up new avenues of inquiry and can reveal entire dimensions of the problem you hadn’t considered.

The sequence matters. Each prompt builds on the previous one, creating a layered analysis that becomes progressively more nuanced. A few tips to maximize effectiveness:

Be specific in your initial description — vague plans yield vague insights.

Resist the urge to defend your position — stay curious about alternative viewpoints the LLM might bring up.

Use the same sequence of questions with human advisers for comparison.

Return to the analysis after a day or two to see if you have any new insights, or if your weighting of different variables has changed.

The next time you face a significant decision — whether personal or professional — try this structured approach. The insights may surprise you.

Precise Editing in Claude and ChatGPT

A simple, yet powerful, feature that more professionals should use.

In recent client conversations, we’ve come to appreciate that a particularly useful feature in both Claude and ChatGPT remains relatively underused by even those who use these tools every day. And given its utility in our own work, we believe it merits more attention.

Both platforms offer dedicated spaces for working with AI-generated content — “artifacts” in Claude and “canvas” in ChatGPT. These features create separate windows for output, and one source of their real value lies in how they allow for more granular editing in concert with the AI. Users can highlight specific sections of text and provide targeted feedback or requests for improvement, without needing to regenerate entire documents or struggle with complex prompts to identify the relevant sections.

The functionality works like this: Generate your initial content, highlight the specific text you want to modify, and click either the “Improve” button in Claude or “Ask ChatGPT” button in ChatGPT. The AI then focuses exclusively on your selected text, maintaining the context while applying your requested changes. This targeted approach proves particularly useful when working with longer documents or complex outputs. To give you a sense of how this works (and how simple it is), below are two videos of this feature in action.

Artifacts in Claude

Canvas in ChatGPT

You can see that even though there are differences between artifacts and canvas, this particular feature works quite similarly across both platforms. Microsoft’s Copilot 365 includes similar functionality, though it’s not quite as refined or smooth as working within Claude or ChatGPT. We expect this gap to close as Microsoft continues to make significant investments in Copilot.

One important note: Claude and ChatGPT don’t automatically create artifacts or canvases for every prompt. If you want to use this selective editing feature, you may need to specifically ask the AI to move its output into an artifact or canvas first.

While this may seem like a simple feature — and in many ways, it is — its practical utility for content creation and editing proves substantial. As we continue exploring the capabilities of generative AI, sometimes the most valuable advances come not from dramatic new features, but from thoughtful refinements that align technology with how we actually work.

We’ll leave you with something cool: X user Min Choi shares seven examples of the new Grok 3 model in action.

AI Disclosure: We used generative AI in creating imagery for this post. We also used it selectively as a creator and summarizer of content and as an editor and proofreader.