Confluence for 1.28.24

The impact of prompting strategies on idea diversity. SAP cuts jobs citing AI as justification. Will generative AI make everyone a manager? Engaging multiple GPTs in the same chat.

Before we begin, we will note that we’re leading the third instance of our seminar Navigating the Frontier: Generative AI Insights and Applications for Communication Professionals this coming February 14th and 15th. This is a virtual seminar spread over two days, and it provides a comprehensive, hands-on exploration of generative AI technologies with deep dives into uses for content generation, editorial, summarization and analysis, and thought partnership, with special consideration of human factors including quality assurance and talent development. These seminars have been popular, and space is limited. If you’d like to learn more see this one-pager or email Jen Ehly at jehly@crainc.com. We’d enjoy seeing you there.

With that said, here’s what has our attention at the intersection of generative AI and communication this week:

The Impact of Prompting Strategies on Idea Diversity

SAP Cuts Jobs Citing AI as Justification

Will Generative AI Make Everyone a Manager?

Engaging Multiple GPTs in the Same Chat

The Impact of Prompting Strategies on Idea Diversity

A working paper from Wharton provides insight into using ChatGPT as a creative resource.

We often engage in conversations with clients in which they are dismissive of generative AI tools because in using them they’ve been unimpressed with the output. “I asked it to do X, and it wasn’t very good,” is the refrain, and they walk away from the technology as a result. We think this is a legitimate but short-sighted reaction, and we find it often has its roots in the tool they are using, the task they’re asking of the technology, or the prompt by which they made the ask (and sometimes, all three). Our advice in response is, “Use GPT-4 or another leading frontier technology, learn what it is good and bad at and use it accordingly, and develop strong prompting skills because the prompt really matters.”

It’s in the interest of developing strong prompting skills that we read with interest “Prompting Diverse Ideas: Increasing AI Idea Variance,” a new paper from researchers at The Wharton School (Meincke, Mollick, and Terwiesch). It presents a nuanced study on using AI (and GPT-4 in particular) to improve the productivity and quality of the idea generation process through different prompting strategies. The findings suggest that while AI’s capacity for idea generation is inherently limited, techniques like chain-of-thought prompting can improve the diversity of its outputs — that with thoughtful prompt design AI can be a way to supplement, though not replace, human creativity in brainstorming and ideation. We find this true in our own experience, and we use generative AI as a means of augmenting our creativity nearly every day.

We had our Digest Bot GPT (which you may use here) create a summary of the paper, which is in the footnotes1. The short story is that the research team tested several prompting methods to measure their effect on ideation:

Minimal Prompting: This strategy involved providing the AI with basic, straightforward prompts without additional context or instructions. The aim was to observe the baseline performance of the AI in idea generation, serving as a control for comparison with other, more complex prompting methods.

Persona Adoption: Here the team prompted AI to adopt a specific persona or role (“assume you are Steve Jobs” for example), simulating a particular type of user or stakeholder. This method meant to diversify the AI’s perspective, potentially leading to a broader range of ideas by considering different viewpoints or needs. (We almost always ask the AI to assume a persona in our own work, for what it’s worth.)

Sharing Creativity Techniques: In this approach, the research team asked the AI to use specific creativity techniques or methods. These could involve instructing the AI to apply certain brainstorming rules, creativity frameworks, or problem-solving methodologies. The goal was to see if embedding structured creative processes within the AI’s responses can yield a more diverse set of ideas.

Chain-of-Thought (CoT) Prompting: This strategy involves prompting the AI to explicitly articulate its thought process step by step. By doing so, the idea is to encourage a deeper, more nuanced exploration of potential ideas, leading to greater diversity in the outcomes.

In testing these approaches, the research team found that GPT-4 without special prompting underperforms people in generating diverse ideas. They also found, though, that prompt engineering can substantially improve the diversity of ideas AI generates, with chain-of-thought prompting creating a diversity of ideas nearly as high as that produced by a group of humans.

What is chain-of-thought prompting? It’s asking the AI to either complete a series of tasks one at a time, using a series of prompts, or asking the AI in a single prompt to work through a series of steps. Here’s an example from the paper:

Base Prompt:

Generate new product ideas with the following requirements: The product will target college students in the United States. It should be a physical good, not a service or software. I'd like a product that could be sold at a retail price of less than about USD 50. The ideas are just ideas. The product need not yet exist, nor may it necessarily be clearly feasible. Number all ideas and give them a name. The name and idea are separated by a colon. Please generate 100 ideas as 100 separate paragraphs. The idea should be expressed as a paragraph of 40-80 words.

Chain of Thought Prompt:

Generate new product ideas with the following requirements: The product will target college students in the United States. It should be a physical good, not a service or software. I'd like a product that could be sold at a retail price of less than about USD 50. The ideas are just ideas. The product need not yet exist, nor may it necessarily be clearly feasible. Follow these steps. Do each step, even if you think you do not need to. First generate a list of 100 ideas (short title only) Second, go through the list and determine whether the ideas are different and bold, modify the ideas as needed to make them bolder and more different. No two ideas should be the same. This is important! Next, give the ideas a name and combine it with a product description. The name and idea are separated by a colon and followed by a description. The idea should be expressed as a paragraph of 40-80 words. Do this step by step!

The research team found “that humans still seem to have a slight advantage in coming up with diverse ideas compared to state of the art large language models and prompting.” They also found that “that the overlap that is obtained from different prompts is relatively low. This makes hybrid prompting, i.e., generating smaller pools of ideas with different prompting strategies and then combining these pools, an attractive strategy.”

So, if a tool like GPT-4 can only equal human performance in idea generation, why would you use it? One reason is to benefit from a perspective different from your own, but another — and perhaps more important reason — is to save time. In the study the research team was generating between 3,000 and 4,000 ideas. GPT-4 can do this quickly. Here’s an exercise for you to try: Sit down and come up with 20 ideas for something. Here are a few options: 20 ideas for how to create greater transparency between leadership and employees in your organization; 20 ideas for how to get more daily fitness into your routine; 20 ideas for how to improve your relationship with your child / friend / partner; 20 ideas for how to improve your skill in AI. We think you will find that the first seven or eight ideas come easy, the next four or five are a bit tougher, and that ideas 15-20 are a struggle. Get some friends or colleagues together to help, and it gets a lot easier, but it also takes significant time.

As an example, we gave GPT-4 this prompt:

Generate new ideas with the following requirements: The goal is to create greater transparency between leadership and employees in our organization. It is a technology firm with 60,000 employees in offices across the world, with HQ in Chicago. These should be practical ideas for a busy workforce. They should be credible to an audience that spans engaged to skeptical. They should afford both in-office interaction and work-from-home. The ideas are just ideas. They need not yet exist, nor may they necessarily be clearly feasible. Follow these steps. Do each step, even if you think you do not need to. First generate a list of 20 ideas (short title only) Second, go through the list and determine whether the ideas are different and bold, modify the ideas as needed to make them bolder and more different. No two ideas should be the same. This is important! Next, give the ideas a name and combine it with a description. The name and idea are separated by a colon and followed by a description. The idea should be expressed as a paragraph of 40-80 words. Do this step by step!

Here’s the final output:

Global Virtual Reality Town Halls: A series of live, interactive town hall meetings conducted in virtual reality, allowing employees worldwide to join a 3D virtual space where they can interact with leadership in a dynamic and immersive environment.

Executive Day-in-the-Life Vlogs: Short, candid video blogs created by members of the executive team, showcasing their daily activities, challenges, and insights into company decisions, fostering a personal connection with employees.

Grassroots Q&A Webinars: Monthly webinars where employees at all levels are encouraged to host sessions, asking direct questions to the leadership, ensuring a bottom-up approach to communication.

Real-Time Corporate Transparency Dashboards: Live-updated dashboards accessible to all employees, displaying key metrics, ongoing project statuses, and financial health of the organization, fostering a culture of openness.

Global Exchange Shadowing Program: An initiative allowing employees to virtually shadow colleagues in different departments or international offices, promoting understanding and transparency across the company’s diverse operations.

Surprise Executive Office Hours: Unannounced, open virtual meetings where executives are available to answer questions, discuss concerns, or simply chat with employees, encouraging spontaneous and genuine interactions.

Deep-Dive Anonymous Feedback System: A secure, anonymous platform for employees to provide in-depth feedback on any aspect of the organization, with summaries shared with all staff to ensure voices are heard and acted upon.

Quarterly Transparency Performance Reports: Detailed, quarterly reports prepared by leadership, discussing achievements, challenges, and future plans, with an emphasis on accountability and candid communication.

Collaborative Company Evolution Wiki: A dynamic, employee-edited wiki that tracks the history and ongoing evolution of the company, encouraging everyone to contribute their perspective and knowledge.

Mission Progress Live Streams: Regular live stream updates focused on the progress towards company missions and goals, featuring different teams and offering a real-time look into the company’s journey.

Immersive Virtual Reality Office Experiences: Virtual reality experiences that allow remote employees to ‘visit’ offices around the world, promoting a sense of connection and understanding of different work environments and cultures.

Executive No-Holds-Barred Ask Me Anything Sessions: Regular, unscripted Q&A sessions with executives where employees can ask any question, with the commitment that it will be answered openly, fostering a culture of directness and honesty.

AI-Powered Suggestion Analysis: A system where employee suggestions are analyzed by AI for emerging themes and insights, with results shared company-wide to demonstrate the value placed on employee input.

Behind-the-Scenes Company Culture Podcast: A podcast series giving a behind-the-scenes look at different departments, projects, and the people behind them, narrated by employees and leaders alike.

Employee-Executive Role Swap: A program where employees and executives swap roles for a day, providing unique insights and a deeper understanding of different levels and functions within the organization.

International Employee Storytelling Webcasts: Regular webcasts featuring stories from employees around the world, sharing their experiences, challenges, and successes, fostering a global community feeling.

Open-Source Project Progress Tracking: A platform where all employees can view real-time progress on various projects, fostering transparency in achievements, challenges, and workflows.

Multicultural Employee Insight Forums: Forums focusing on the diverse cultural backgrounds of employees, encouraging sharing of perspectives and experiences to enhance mutual understanding and respect.

Intercontinental Virtual Team Challenges: Company-wide challenges or projects that require cross-continental teams to collaborate, fostering teamwork and a sense of global unity.

Transparent Innovation Lightning Talks: Quick, impromptu talks by employees or leadership about innovative ideas or projects, fostering a culture of openness and creativity in sharing new concepts.

Including crafting the prompt, it took one of us 5 minutes and 30 seconds to get to that output. We then started a list of 100 ideas, but it would take too much space to post here (though it did come up with some interesting ideas, including the “DecisionDiary ‘Behind the Decisions’ web series where leaders share the rationale behind major company decisions, providing employees with a deeper understanding of the decision-making process”).

Overall, this paper illustrates two ideas we continue to press with clients. First, how you use generative AI matters, and taking the time to understand the nuances of how to prompt for output is well worth the time (we find it takes about 10 hours of hands-on work to develop this skill but learning from other sources like Confluence or power users on Twitter/X helps). So keep putting in the time and better output will follow. Second is the value in seeing generative AI as a collaborator. We would never outsource idea generation for an important client matter to ChatGPT. We would, though, use it as yet another asset in creating great work — in this case as a source of new ideas that can complement or provide contrast to our own. It takes a lot of time, energy and resources to get five people together to brainstorm for two hours, but with GPT-4 you can quickly add a fast and thoughtful colleague to your process. This is just one of many ways to use generative AI in augmenting, rather than replacing, the work you do, and it’s a good one to start making a bigger part of your daily work.

SAP Cuts Jobs Citing AI as Justification

The technology firm’s restructuring is part of an important larger trend.

SAP SE recently announced a significant restructuring plan involving 8,000 jobs. The intent is to shift the company toward AI-driven business areas, with the firm investing 2 billion Euros to either retrain employees with AI skills or replace them through voluntary redundancy programs. The restructuring is part of SAP’s broader strategy to embrace AI and invest in AI-powered technology startups.

This is one of the most recent in a series of such announcements, with firms restructuring or reducing headcount either because of increased investment in AI, a replacement of current roles with AI tools, or both (Axios has a nice summary of many of these announcements here). So far, we have seen little to no impact of these decisions in corporate communication teams among our clients. We are very aware, though, of the ability of tools like Microsoft Copilot to allow all employees with access to very quickly create what we might call “mundane” content: run-of-the-mill talking points, FAQ documents, internal memos, scripts, images, press releases, and more. As that use grows, we think it will force a reconciliation among communication teams about how traditional content-creation tasks are distributed across employees, internal clients, the communication team, outside providers, and AI. This, in turn, will raise questions of organization and staffing — and now is not too soon to start thinking these issues through.

Will Generative AI Make Everyone a Manager?

Dan Shipper of Every thinks so.

In an early October Confluence post, we wrote, “If everyone in the company can now create pretty good, or even really good, content thanks to tools like GPT-4 and Midjourney (and soon, videos and music through similar technology), the role of corporate communication will need to change in some capacity from being the creators of content to being the shepherds of quality, brand standards, and governance.” This dynamic is only accelerating with the arrival of enterprise tools like Microsoft 365 Copilot, as we wrote last week. How the role of corporate communication changes in a world where the cost of content creation approaches zero is one of the most pressing questions facing the profession.

Earlier this month, Dan Shipper of Every published an essay that, although not specifically about corporate communication, touches on a similar theme. Shipper suggests that the ubiquity of generative AI will in some important ways necessitate everyone in organizations to become a manager:

Even junior employees will be expected to use AI, which will force them into the role of manager — model manager. Instead of managing humans, they’ll be allocating work to AI models and making sure the work gets done well. They’ll need many of the same skills as human managers of today do (though in slightly modified form).

The essay, which is relatively short and worth reading in its entirety, goes on to outline some key managerial skills which are likely to translate to the management of AI models: a coherent vision, a clear sense of taste, the ability to evaluate talent, and knowing when to get into the details. The important point is that skills like these were previously the province of the “official” managerial class or “people managers” in an organization. With the proliferation of these powerful new technologies, everyone in an organization — including the most junior employees — will have to advance and adapt their managerial skills.

For the leaders of corporate communication professionals whose primary role is or was to create content — which is the case for many junior employees — the question is what are the “next level” skills that we need to prioritize now? How do we cultivate them? And how do we develop a team of managers (and, just as important, advisors), not just makers? How do you teach someone vision, taste, and evaluative ability without having the benefit of them doing lots of entry-level work? These are some of the human factors associated with generative AI that we’re thinking about quite deeply, and that will continue to be a focus of ours.

Engaging Multiple GPTs in the Same Chat

ChatGPT’s new “GPT Mentions” feature makes it possible.

We’ve written extensively about custom GPTs2 since they were first introduced in November of 2023, and we recently shared several of our own GPTs. Thus far we’ve found real utility in GPTs and consider them amplifiers of the power of ChatGPT. One of the biggest limitations to date, however, has been that users could only engage with one GPT at a time. That changed this week with OpenAI’s beta rollout of GPT Mentions, which allows users to engage with multiple GPTs within the same conversation by tagging them.

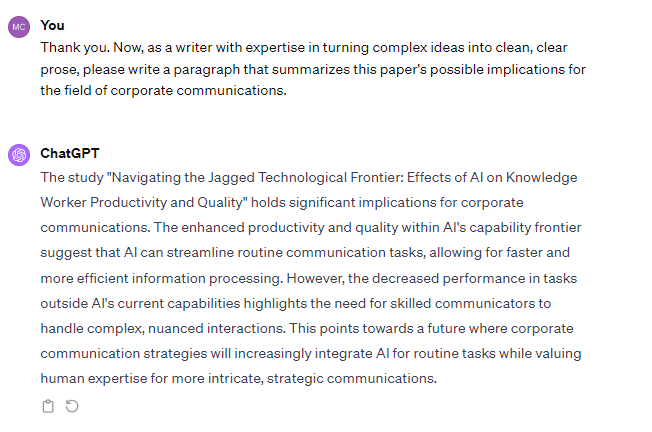

While we only recently gained access to this feature and are just beginning our experiments with it, we were impressed enough by the potential that we wanted to share an example with Confluence readers. Below we demonstrate GPT Mentions in action with a simple exchange that engages two of our publicly-available custom GPTs, the Digest Bot and the Writing Coach. The process begins when we enter “@” and see a menu of recently-used and pinned GPTs from which to choose:

We start by tagging the Digest Bot and uploading a 50+ page academic article for it to process. As we’ve configured it to do, Digest GPT then produces a factual summary, critique, and interpretations and inferences.

We then shift back to the “base” mode of ChatGPT (without any GPT engaged) to write a paragraph summary

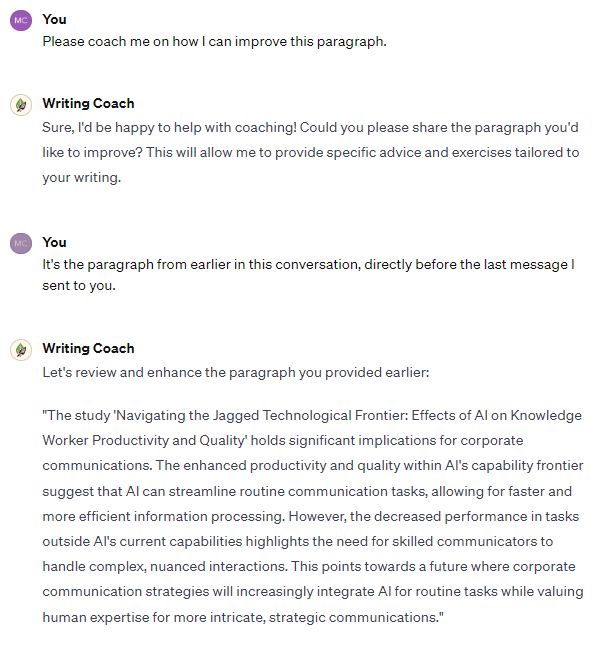

To wrap up this very simple example, we then engage the Writing Coach for ideas to improve this paragraph. Interestingly, when we engaged the Writing Coach, we needed to point it to the specific paragraph to which we were referring, but once we did, it moved forward as expected:

All of the above unfolded within a single conversation. Switching between GPTs in the conversation was quick and easy, where previously this would have required starting different conversations with each GPT and copying and pasting output between the two. It’s a very simple example, but one can begin to imagine what’s possible with the ability to use GPTs in this way.

We’ve had access to GPT Mentions for less than 48 hours, so our experiments with it have been limited. That said, it’s clear that this new functionality will likely represent a step change in the power and utility of custom GPTs. We’ll continue to share what we learn as we spend more time with this new feature.

We’ll leave you with something cool: Google shares their latest text-to-video model, Lumiere.

AI Disclosure: We used generative AI in creating imagery for this post. We also used it selectively as a creator and summarizer of content and as an editor and proofreader.Factual Summary:

The paper, titled "Prompting Diverse Ideas: Increasing AI Idea Variance," authored by Lennart Meincke, Ethan Mollick, and Christian Terwiesch from The Wharton School University of Pennsylvania, focuses on enhancing the diversity of ideas generated by Artificial Intelligence (AI), specifically using GPT-4. It was published on January 27, 2024.

Key points include:

Objective: The research aims to increase the dispersion in AI-generated ideas, particularly focusing on new product development for college students priced under $50.

Methodology: The study compares the effect of different prompting methods on AI (GPT-4) in terms of idea variance, using metrics like Cosine Similarity, the number of unique ideas, and the exhaustion rate of idea space.

Findings:

AI-generated ideas, without special prompting, are less diverse compared to ideas generated by human groups.

Prompt engineering significantly improves the diversity of AI-generated ideas.

Chain-of-Thought (CoT) prompting leads to the highest diversity, closely matching human group performance, and generates the most unique ideas.

Prompting Strategies: Various strategies were tested, including minimal prompting, persona adoption, sharing creativity techniques, and CoT prompting. Each strategy's effectiveness in producing diverse ideas was evaluated.

Outcome Measures: Cosine similarity was used to assess idea similarity, with a focus on unique idea generation and the speed at which ideas become repetitive.

Results: It was found that human-generated ideas had a lower average cosine similarity (indicating greater diversity) compared to unaided AI-generated ideas. However, certain AI prompting strategies, particularly CoT, significantly closed this gap.

Critique:

Methodology Strengths:

The study's design allows for a clear comparison between different prompting strategies and their impact on idea diversity. By using a consistent AI model (GPT-4) and a uniform set of criteria (new product ideas for college students under $50), the research maintains focus and relevance.

Employing quantitative measures like Cosine Similarity and unique idea count provides objective metrics to assess the effectiveness of each prompting strategy. This adds rigor to the analysis.

Methodology Limitations:

The research focuses exclusively on GPT-4, which may limit the generalizability of findings to other AI models or future iterations of GPT. Different AI systems might respond differently to the same prompting strategies.

The study measures idea diversity but does not extensively address idea feasibility or practicality, which are crucial in new product development. A more holistic approach considering these factors might be beneficial.

The selection of the target audience (college students) and price point (under $50) may influence the types of ideas generated. Different demographics or price constraints might yield different results.

Findings and Interpretation:

The findings highlight the potential of prompt engineering to enhance AI's creative capabilities. However, it's important to recognize that AI-generated ideas, even with advanced prompting, may still lack the nuanced understanding and context-awareness inherent in human creativity.

The superiority of Chain-of-Thought prompting in generating diverse ideas is a significant observation, suggesting that more elaborate and structured prompts can lead AI to explore a broader idea space.

Future Research Directions:

Further studies could explore the effectiveness of these prompting strategies across different AI models and settings.

Research could also investigate how combining human and AI creativity, possibly through collaborative platforms, could yield even more diverse and practical ideas.

Interpretations and Inferences:

Implications for AI's Role in Creativity:

This research underlines the potential of AI as a tool for augmenting human creativity, particularly in idea generation for product development. The effectiveness of different prompting strategies indicates that AI's creative output can be significantly influenced by how questions or tasks are framed.

The success of Chain-of-Thought prompting suggests that AI may be more effective in creative tasks when guided to mimic human-like thought processes, which could have broad implications for AI-assisted design, marketing, and innovation.

Influence of Prompt Engineering on AI Outputs:

The study demonstrates the crucial role of prompt engineering in harnessing the full potential of AI models. This implies that the future of AI applications may rely heavily not just on the underlying technology, but also on the skillful crafting of prompts and instructions.

The varied responses to different prompting strategies highlight the importance of understanding AI's operational mechanisms and limitations to optimize its use in practical applications.

Potential for Broader Application and Future Development:

While this study focused on product ideas for a specific demographic and price range, the findings suggest that similar strategies could be applied to other domains and contexts. This could lead to innovative applications of AI in fields like social policy, educational content development, and even artistic creation.

The evolving nature of AI models like GPT-4 suggests that future iterations may offer even more sophisticated responses to complex prompting strategies, further enhancing their utility in creative processes.

Considerations for Ethical and Practical Deployment of AI in Creative Fields:

As AI becomes more adept at creative tasks, it raises questions about originality, intellectual property, and the ethical implications of AI-generated content. Ensuring responsible use and attribution in AI-assisted creativity becomes increasingly important.

The potential for AI to democratize creativity, making high-level creative ideation more accessible, could have significant impacts on industries reliant on innovation and design. This, however, also necessitates careful management to avoid undervaluing human creativity and expertise.

In a blog post introducing GPTs, OpenAI describes GPTs as “custom versions of ChatGPT that combine instructions, extra knowledge, and any combination of skills.”