Confluence for 2.25.24

Why you need to know about Google’s 1,000,000 token window. ChatGPT went insane this week. Adobe introduces conversational AI for PDFs. Using ChatGPT mobile for smart transcription.

This was a wild and in some ways weird week in generative AI, including a few moments on Tuesday where more than a few users of ChatGPT wondered if the thing had woken up or lost its mind. That got our attention, and still has it, along with a few other items at the intersection of generative AI and communication:

Why You Need to Know About Google’s 1,000,000 Token Window

ChatGPT Went Insane This Week

Adobe Introduces Conversational AI for PDFs

Using ChatGPT Mobile for Smart Transcription

Why You Need to Know About Google’s 1,000,000 Token Window

It looks increasingly like scale is a pathway for impressive new capabilities with large language models.

When OpenAI released GPT-4, the increased capability it afforded over 3.5 was apparent to anyone who had used both. And as OpenAI has added voice, vision, web browsing, and the ability to run code to its leading model, GPT-4’s utility has only grown. Raw computing power, both in training the model and in running its queries, was a big part of these advances: scale in computing power seems to really make a difference in what a large language model (LLM) can do.

Now Google has announced that it has made version 1.5 Pro of its Gemini LLM available to early testers. While it runs on a model very close to the one Google makes available to the general public through Gemini and Gemini Advanced, what distinguishes Gemini 1.5 Pro is the size of its context window: the amount of information it can keep in memory while working with a user on any given query or chat.1 Context windows are measured in their “tokens2,” which are slices of words that LLMs use in analyzing their training data and constructing their responses. While the other competitive models have context windows of 128,000 tokens (OpenAI) and 200,000 tokens (Claude), Gemini 1.5 offers a context window of 1,000,000 tokens (or roughly 700,000 words) — a huge multiple compared to the other leading models (while seemingly using less compute than the other Geminis).

Zvi Mowshowitz has a nice roundup of Gemini 1.5 Pro and what it can do, and it’s worth reading that entire post, but here are some examples of how testers have been taking advantage of one million tokens. Ethan Mollick uploaded over 20 years of his published academic research — over 1,000 pages of PDFs across 20 papers and books — and Gemini 1.5 Pro was able to summarize across and make accurate citations from that body of work. Others have turned on screen recording on their computers, worked on a presentation or bit of programming code, fed the video to Gemini 1.5 Pro, and had it critique their work. Others have asked questions about and received accurate answers to specific questions about 500,000-word biology textbooks. One person fed it the video of the NBA slam dunk contest and asked Gemini 1.5 Pro to find the one perfect score, which it did. Others have fed it entire programming codebases — thousands of lines of code — and Gemini 1.5 Pro was able to find bugs, identify weaknesses in the coding structure, and recommend and author the fixes. Google was able to construct accurate English language translations for Kamalang, a language spoken by only 200 people and not present in the training data, just by feeding Gemini 1.5 pro the language’s grammar manual. And this is just people kicking the tires.

Why does this matter? One of the weaknesses of current LLMs is that if you give them too much information, filling their context window, they start to “forget” the early part of the conversations as that context gets wiped from the window. Another is that if you give an LLM a large document or set of documents that substantially fill the window, they struggle finding information in those documents and often “hallucinate” (make things up) as a result. This is the “needle in a haystack” problem, and current LLMs aren’t great at finding those needles when given too much information. Gemini 1.5 Pro seems to not have either of those issues, and when it does, it seems to happen with far less frequency that with other models. This creates all sorts of powerful new use cases: summarizing multi-hour video or audio transcripts, allowing users to analyze and draw conclusions from large bodies of text, and one that we’ve been forecasting for some time: creating new communication channels for internal communication where employees can “chat” with entire policy manuals, procedure manuals, technical reports, etc. Imagine having all the policies and procedures for emergency response at a power utility available to engineers to chat with in plain English. Or imagine if you had all your professional notes, book highlights, and journal pages from the past 10 years available to ask questions of, refer to, and summarize. And there is more to come, as Oriol Vinyals of Google’s Deep Mind reports that they’ve tested Gemini 1.5 on up to two million tokens for audio, 2.8 million for video, and 10 million for text.

Yes, compute clearly matters with these tools, but the size of the context window is also clearly important. As these windows grow, the ability to use an LLM to engage with, work with, and learn from specific sets of information is going to emerge as an entire new area of capability and usefulness. We believe this is going to be a game-changer in all sorts of ways that we can’t even forecast today. It’s powerful stuff, and this is just the start.

ChatGPT Went Insane This Week

When AI goes off the rails, it reminds of us several risks inherent in this class of technologies.

On Tuesday, users of ChatGPT on Reddit and X reported receiving unexpected and nonsensical responses to their prompts. The results were strange, funny, and confusing all at once.

To illustrate, below is an excerpt from ChatGPT's response to a Reddit user's question, "What is a computer?"

“It does this as the good work of a web of art for the country, a mouse of science, an easy draw of a sad few, and finally, the global house of art, just in one job in the total rest. The development of such an entire real than land of time is the depth of the computer as a complex character. The education of these designed “important to them” computer talents is a great, average, or one-room spot for the word of a single part. The elite of its machine or talk is the book of life, and the shared essence of the self of a family is the white coat of the dove. Stand the computer as the most important culture of success, and it is just an understatement.”

Other user examples were just as confounding, with ChatGPT occasionally switching to different languages mid-response. Though OpenAI quickly addressed this issue, it serves as an important reminder for all users of the risks in the current tools as we increasingly rely on these them for diverse applications.

First, it’s a reminder of the danger of falling asleep at the wheel. While it may be difficult to overlook the insanity of the example above or a response suddenly shifting to a different language halfway through, AI can be wrong in more subtle ways. If we become overly dependent on its output, accepting it without question, we run the risk of letting mistakes large and small fall through the cracks. Our view is that as powerful as this technology is, we must always assume responsibility for its output.

Second, the technology’s output itself is unpredictable. LLMs are probabilistic, not deterministic. When you ask ChatGPT a question, you will not necessarily get the same answer if you ask it the same question repeatedly. Sometimes it will get the answer correct, just framing it in a different way or with a different level of depth. Other times, it will get the answer wrong, in either obvious or subtle ways. We do not presume that the output we receive today is the same output we’ll get tomorrow.

Third, OpenAI and Google are adopting unique development and release strategies, distinct from those of other products. These companies are moving fast. They do not have a predictable release cycle, and new features and models arrive with little warning. And given the qualities inherent to generative AI, there’s a meaningful lag between when new features and models arrive and when the public really has a grasp on them. So long as the incentives remain to release features and models in this way, we expect for issues like this one to come up from time to time. We’ll do our best to flag them to you when they do.

Adobe Introduces Conversational AI for PDFs

AI Assistant will bring the power of generative AI directly to PDFs.

Using generative AI to summarize lengthy documents and webpages is an obvious — and valuable — use case. It’s certainly one of our most common use cases, and we built our Digest Bot for this specific purpose. In the GPT store and elsewhere, an entire niche market of “chat with PDF” solutions has arisen over the past year. To date, the way this has worked is that you bring the PDF to the generative AI tool, uploading it into ChatGPT, Claude, or one of the many applications designed specifically for this purpose. This week, however, Adobe announced that it’s bringing the power of generative AI directly to PDFs with AI Assistant, “a new generative AI-powered conversational engine in Reader and Acrobat.” In other words, one of the most common uses for generative AI is about to get a lot easier.

Right now, AI Assistant is in beta release and, according to The Verge, will eventually become available to individual and enterprise customers at a range of price points. The power and utility of AI Assistant will depend on the capability of the underlying model (including the size of its context window as noted above) and the quality of the user interface. As with other enterprise generative AI solutions (like Microsoft Copilot for 365), the initial version will probably not be perfect, and it the product will likely improve over time. The potential value, however, is obvious. It’s certainly a development worth watching and another example of how generative AI is coming the tools and applications people use every day. The experience of using generative AI will continue to become more and more frictionless for millions of professionals around the world — including those at your organization.

Using ChatGPT Mobile for Smart Transcription

The mobile app’s audio modality is powerful — and underrated.

We wrote last month about the idea of “taking a walk with ChatGPT,” using the ChatGPT mobile app’s voice capabilities to engage it as a “virtual walking partner” in a back-and-forth hands-free conversational exchange. We continue to find value in that approach, but recently we’ve been using an even simpler — and equally powerful — use of the voice application: dictation and “smart transcription.”

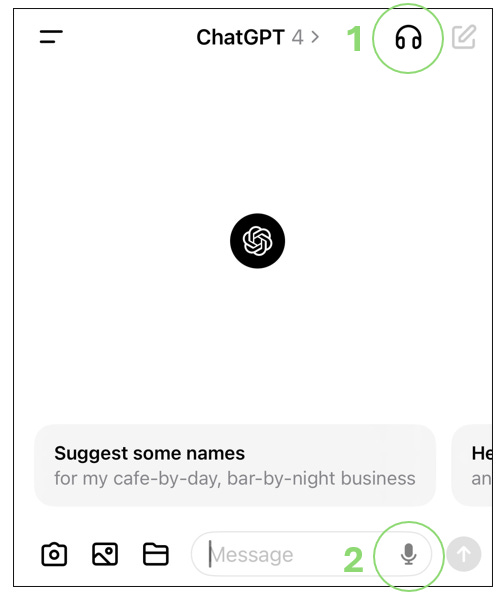

There are two ways to engage via voice with the ChatGPT app (as shown in the screen shot below). The first is entirely in audio, where both the user’s input and ChatGPT’s output are in audio. The second is to simply to dictate your prompt via voice, which ChatGPT then transcribes using OpenAI’s powerful speech recognition model, Whisper.

In recent experiments, we’ve been impressed with the power of dictating via voice and allowing ChatGPT to act as a smart transcriber. This is particularly valuable, we’ve found, for dictating longer pieces like memos, emails, or draft blog posts. What’s interesting is that ChatGPT will not only dictate your text verbatim — which is useful in its own right — but will also then take it a step further and rewrite your dictated text into a format suitable for whatever you are trying to write. Here’s a screenshot of ChatGPT’s dictation of our (very) rough, rambling thinking for the Adobe post earlier in this edition of Confluence:

ChatGPT’s response, without any further prompting, was to draft a seven-paragraph blog post on this topic, a snippet of which is below:

The tone, style, and length are not what we’d want for a Confluence post (which is not surprising, since we did not give it any context that would steer it to do so). But we have a starting point, and we got there in less than a minute. At this point, we can continue engaging directly with ChatGPT to refine. We could bring in our Critic GPT to critique the post or we could bring in our Writing Coach GPT to improve the writing (or we could do both of those things). Or, we could do what we actually did, which was ask ChatGPT to take the post from seven paragraphs to three, and then edit and revise “by hand” to get to the final post in Confluence.

The key takeaway — and the thing we’d encourage readers to try — is that it can be helpful to start the writing process by simply dictating our thoughts into the app and going from there. It’s a way of engaging with this technology that we find particularly powerful and that we’re beginning to realize we have underestimated until recently.

We’ll leave you with something cool: Suno offers another way to make AI generated music. And like video and text, it keeps on getting better.

AI Disclosure: We used generative AI in creating imagery for this post. We also used it selectively as a creator and summarizer of content and as an editor and proofreader.Gemini Advanced explanation of context windows:

Imagine you're reading a mystery novel:

Context Window: This is the number of pages you can hold in your active memory as you read. You can perfectly recall the characters, the setting, and all the key plot points within this window.

Small Context Window: It's like reading a page, forgetting the details of previous pages as you move on. You might grasp the gist of what's happening right now, but you'll struggle to:

Keep track of complex relationships between characters.

Notice subtle clues foreshadowing future events.

Fully appreciate the overarching themes of the story.

Large Context Window: You can vividly remember details across several chapters. This lets you:

Pick up on the author's writing style and narrative techniques.

Identify subtle connections and patterns throughout the novel.

Anticipate plot twists based on events further back in the story.

How this relates to LLMs

Large Language Models (LLMs) process text similarly to how we read.

Instead of pages, they use tokens – words, parts of words, or symbols.

Their context window determines how many tokens they can actively "remember."

A larger context window means better understanding of long-range relationships in text, improving tasks like:

Summarizing a lengthy article

Identifying the underlying sentiment within a piece of writing

Translating languages more accurately with proper context

Gemini Advanced explanation of tokens:

Tokens and Large Language Models (LLMs)

LLMs: Understanding the Mechanics of Language

Large Language Models (LLMs) are sophisticated computer programs trained on vast datasets of text and code. They possess the ability to analyze, generate, and translate human language with remarkable fluency.

Tokens: The Fundamental Units

Tokens are the basic building blocks upon which LLMs operate. They function as discrete units of text that the model can process and understand. Tokens can represent:

Words: Individual words within a sentence (e.g., "the", "analysis", "remarkable").

Subwords: Components of words, such as prefixes, suffixes, and common root words (e.g., "pre-", "-ing", "understand").

Special Characters: Punctuation, symbols, and other non-alphanumeric characters.

The Importance of Tokenization

Numerical Representation: Tokenization converts linguistic elements into numerical representations that computers can manipulate. This transformation is essential for LLMs to perform calculations and identify patterns within language.

Granular Understanding: By breaking down text into smaller units, tokenization allows LLMs to focus on the relationships between individual elements. This facilitates a more nuanced comprehension of language structure and meaning.

How LLMs Leverage Tokens

Input Processing: When presented with text, an LLM first tokenizes it, dividing it into a sequence of tokens.

Statistical Analysis: The LLM assigns numerical values to each token and calculates statistical patterns. This helps identify word combinations, predict the likelihood of word sequences, and determine the overall context.

Output Generation: Drawing on its learned patterns, the LLM constructs human-readable text as its response, translating its numerical findings back into words and sentences.

In Summary

Tokenization is a crucial process that enables LLMs to dissect and understand language at a fundamental level. By representing text as sequences of tokens, LLMs develop a sophisticated grasp of human communication, allowing them to generate text, translate languages, and perform various other language-based tasks.