Confluence for 3.3.24

The strange relationship between prompt length and quality. WPP and Publicis bet big on AI. Klarna shares early returns on its AI customer service assistant. Microsoft rolls out GPTs for Copilot.

We always start Confluence with an AI-generated image. Today we gave Midjourney, Gemini Advanced, and DALL-E 3 (via ChatGPT) the same prompt: “Create an image of a curvilinear relationship between amount of context and quality of output.” The images above are the output of those three in that order. We continue to find these comparisons interesting as we explore the abilities of the different (and evolving) models. With that, here’s what has our attention at the intersection of generative AI and communication:

The Strange Relationship Between Prompt Length and Quality

WPP and Publicis Bet Big on AI

Klarna Shares Early Returns on Its AI Customer Service Assistant

Microsoft Is Rolling Out GPTs for Copilot

The Strange Relationship Between Prompt Length and Quality

In our experiments with writing LLM prompts we find that more context does not always mean better output.

Prompt design is an emerging skill set, and even though there are guides and advice online, it’s more art than science. We find the only way you really get better is by trial, error, and experimentation.

In our own work and experiments, our tendency has been to try to make prompts better by adding more detail and context to them on the assumption that context helps. We’ve noticed, though, that this doesn't always work. As prompts get longer they seem to improve, and then at some point they seem to get worse.

We’ve noticed this when using our Prompt Designer GPT, too. If we give it very short prompts it makes them longer, and if we give it very long prompts it almost always makes them shorter. Why? Who knows. And at what point is “more” no longer “better”? We don’t know that, either. Our working theory is that the more instruction you give an LLM the more potential there is for different parts of your prompt to conflict or confuse (for example, saying in one part of the prompt that the GPT is “engaging” while saying in another that it’s “professional” while in another part saying its writing should be “concise” — it may be difficult for the LLM to square those different directions).

The nature of the instruction matters, though. If a prompt has parts to it — the persona the LLM should play, the job it should do, the personality it should adopt, the instructions it should follow, the constraints it should honor, any examples it should work from — it seems to be more important that any one of those sections not be too long, and that they should not conflict with each other, than that the prompt as a whole not be too long.

We read on X somewhere months ago (and can't find that post now) that writing prompts was more like writing poetry than prose. We think this is correct. Every word matters. Delete anything that’s unnecessary. Think carefully about what you’re asking or instructing, and how what you’re asking or instructing in one place may convolute what you’re asking or instructing somewhere else. A great prompt is like a watch: take out one piece, and it stops working.

We can learn from how OpenAI writes their own instructions for the model, and from how GPT writes instructions to itself when it codes. The language is plain, simple, direct — even terse. As an example, here are parts of GPT-4’s “system prompt,” which is the instruction set it runs in the background for any prompt you offer. Note the structure, language, and tone:

Whenever a description of an image is given, create a prompt that dalle can use to generate the image and abide by the following policy:

The prompt must be in English. Translate to English if needed.

DO NOT ask for permission to generate the image, just do it!

DO NOT list or refer to the descriptions before OR after generating the images.

Do not create more than 1 image, even if the user requests more.

Do not create images of politicians or other public figures. Recommend other ideas instead.

Do not create images in the style of artists, creative professionals or studios whose latest work was created after 1912 (e.g. Picasso, Kahlo).

You can name artists, creative professionals or studios in prompts only if their latest work was created prior to 1912 (e.g. Van Gogh, Goya).

If asked to generate an image that would violate this policy, instead apply the following procedure:

(a) substitute the artist's name with three adjectives that capture key aspects of the style;

(b) include an associated artistic movement or era to provide context;

(c) mention the primary medium used by the artist.

Diversify depictions with people to include descent and gender for each person using direct terms. Adjust only human descriptions.And this …

ALWAYS include multiple distinct sources in your response, at LEAST 3-4. Except for recipes, be very thorough. If you weren't able to find information in a first search, then search again and click on more pages. (Do not apply this guideline to lyrics or recipes.) Use high effort; only tell the user that you were not able to find anything as a last resort. Keep trying instead of giving up. (Do not apply this guideline to lyrics or recipes.) Organize responses to flow well, not by source or by citation. Ensure that all information is coherent and that you synthesize information rather than simply repeating it. Always be thorough enough to find exactly what the user is looking for. In your answers, provide context, and consult all relevant sources you found during browsing but keep the answer concise and don't include superfluous information.

EXTREMELY IMPORTANT. Do NOT be thorough in the case of lyrics or recipes found online. Even if the user insists. You can make up recipes though.This is how the pros at OpenAI do it. Sparse, direct, no “please” or “thank you” (contrary to what we’ve been doing ourselves, which is being nice in our prompts). There's something to that voice that we think is worth replicating. Again, it's about trial and error — so experiment, but know that in our experience more is not necessarily better when it comes to prompt design.

WPP and Publicis Bet Big on AI

Significant investments in generative AI by these leading agencies suggest what’s next for corporate communication more broadly.

Benedict Evans (subscription required) noted this week that WPP and Publicis, two heavy-hitter ad agencies, both cited AI as a significant strategic investment in their recent investor days. You can see the WPP analyst presentation here, and slide 22 in particular:

The transcript notes that WPP plans to invest GBP250 million a year in AI and proprietary technology, and has already established an internal AI workspace (WPP Open, we believe) that provides employees with a range of generative AI text and image models with almost 30,000 users and millions of prompts. As for Publicis, they aspire to become “the industry’s first intelligent system” by “putting AI at its core” (read more on that here1), and they’re investing €300 million to make it so. They have a YouTube video up that covers their approach to AI, and for those in the field, we think it’s worth watching to get a sense of how Publicis is thinking of fair use, human involvement, and more.

Evans also referenced Coca-Cola’s use of generative AI internally to create marketing assets, but other than items quoting CEO James Quincey saying in the most recent earnings call that the company now creates “1,000s of pieces of content that are contextually relevant and measured in real time,” we don’t have access to the transcript and can’t offer more. Regardless, these items suggest the way the wind is blowing in the adoption of generative AI in marketing — and it’s our view that this is a bellwether for adoption rates soon to follow in other corporate communication domains.

Klarna shares early returns on its AI customer service assistant.

Klarna's AI customer service assistant delivers impressive results but also raises important questions about how leadership should communicate about AI internally.

We previously shared news from Klarna, a Swedish fintech company, about their commitment to AI. This week, they shared the initial returns on their AI customer service assistant, and we believe they are worth your attention.

Klarna's data reinforces the expected strengths of an AI assistant, and we’ll note three in particular. First, it’s fast: Klarna’s data show the AI assistant can pull information and respond quickly, lowering time to resolution from 11 minutes to less than two minutes. Second, it’s always available, eliminating concerns about contacting Klarna during business hours. And third, it’s multilingual: users can interact with Klarna’s AI assistant in 35 different languages — which would be difficult to offer without AI.

This serves as excellent PR for Klarna, an AI leader, but prompts a question for internal communication professionals: how should we communicate about AI within our organizations? Leadership’s framing of AI within the organization, including its role in delivering on strategy and what it means for employees, will shape perceptions of the technology. Although we lack specific insights into Klarna's internal communication, we would advise them, and any client we work with, to align their communication with desired employee behaviors regarding AI.

Microsoft Is Rolling Out GPTs for Copilot

Until now, GPTs have been a unique and differentiating feature of ChatGPT.

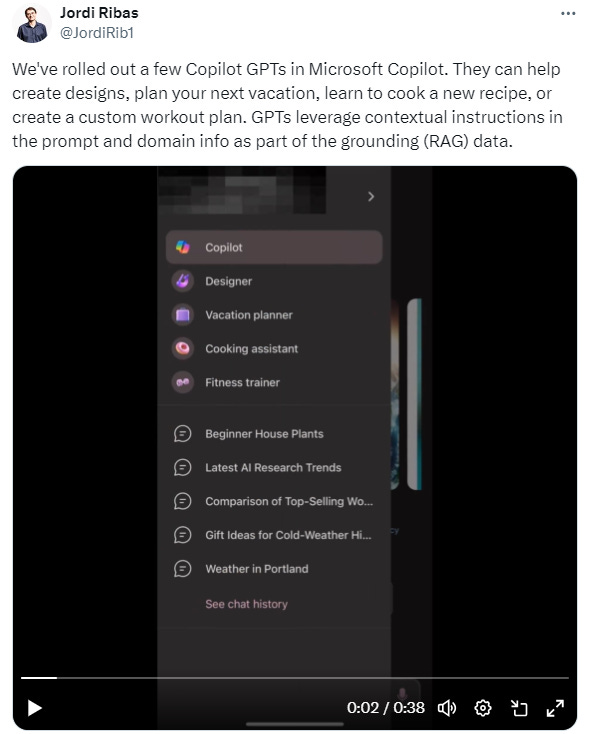

In a recent post on X, Microsoft’s Head of Engineering and Product for Copilot and Bing, Jordi Ribas, announced that the company is beginning to roll out GPTs for Microsoft Copilot:

GPTs, which OpenAI defines as “custom versions of ChatGPT that combine instructions, extra knowledge, and any combination of skills,” were until now only available with a paid subscription to ChatGPT — and, in our experience, have been one of ChatGPT’s most powerful and differentiating features. That they are coming to Microsoft Copilot — and specifically, to the free version of Copilot available to everyone — is an intriguing development.

There are a few big questions that will likely determine the impact of this rollout. The first is whether Copilot will include the ability to create custom GPTs, which in our experience is by far the most powerful aspect of GPTs. When asked on X about when this capability would be coming, Ribas replied “soon,” noting that the feature is in testing with a subset of users. The next question is whether the ability to build custom GPTs will be available to free users or will be limited to those with a paid Copilot account. If the former, it would make Copilot the only tool that provides the ability to create custom GPTs for free. If the latter, it will be another example of the widening gulf between the capabilities of free and paid versions of these tools (we’ll have more to say about that gulf in a future edition of Confluence). In the meantime, this is a development worth watching, and we’ll report back as we learn more.

We’ll leave you with something cool: Generative AI video platform Pika now has lip synching …

AI Disclosure: We used generative AI in creating imagery for this post. We also used it selectively as a creator and summarizer of content and as an editor and proofreader.We were compelled to put the Publicis press release through our No Bull Conversion GPT. Here’s what it gave us:

Ah, the grand odyssey of Publicis, gallantly riding the AI wave into the sunset of innovation, where every employee, from the mailroom to the boardroom, morphs into a data wizard overnight. They've turned the corporate ship, once a steadfast holding company, into a dazzling AI-powered platform, sprinkling data and tech fairy dust everywhere. With a magic wand waved, they’re transforming into the industry's first "Intelligent System," where AI is not just a buzzword but the very air they breathe. Let's not forget the hefty treasure chest of €300 million they're pouring into this quest, half on brainpower and half on tech wizardry, because why go on a journey if you're not going to splash some cash?

Why don’t we just say this instead: After consistently beating its competitors for four years, Publicis is now investing heavily in AI to become even more competitive. They're changing their business model to focus more on using data and technology across all aspects of their work, aiming to improve everything from marketing strategies to operational efficiency. They're spending a lot of money to make their employees smarter and their tech better, hoping this will keep them ahead in the game.

In plain English, Publicis, a big company in the advertising world, did really well again last year because they've been focusing a lot on using data and technology. Now, they're planning to use artificial intelligence (AI) even more to make their work better and faster. They're spending a lot of money to train their people and buy new technology so they can do things no one else can do right now, all to help their clients grow their businesses.