Confluence for 4.20.25

The state of the art. Claude gets its deep research equivalent. Two new integrations. Insight from Anthropic into tomorrow’s talent.

Welcome to Confluence. We have a long issue this week, but we think it’s all worth saying and reading, so we hope you stay with it through and through. Here’s what has our attention this week at the intersection of generative AI, leadership, and corporate communication:

The State of the Art

Claude Gets its Deep Research Equivalent

Two New Integrations

Insight from Anthropic into Tomorrow’s Talent

The State of the Art

OpenAI’s o3 model is here, and it’s a new animal altogether.

The main event in generative AI this past week was OpenAI’s release of several models: o3, o4-mini, and o4-mini-high. Yes, yes, the naming convention is awkward. We know, as do they. Each model has its purpose, but for most of us, o3 is the one to pay attention to. Sayeth OpenAI:

OpenAI o3 is our most powerful reasoning model that pushes the frontier across coding, math, science, visual perception, and more. It sets a new SOTA on benchmarks including Codeforces, SWE-bench (without building a custom model-specific scaffold), and MMMU. It’s ideal for complex queries requiring multi-faceted analysis and whose answers may not be immediately obvious. It performs especially strongly at visual tasks like analyzing images, charts, and graphics. In evaluations by external experts, o3 makes 20 percent fewer major errors than OpenAI o1 on difficult, real-world tasks—especially excelling in areas like programming, business/consulting, and creative ideation. Early testers highlighted its analytical rigor as a thought partner and emphasized its ability to generate and critically evaluate novel hypotheses—particularly within biology, math, and engineering contexts.

We have been using it as our primary model since release day, and we’re going to call it a significant step forward and one you need to pay attention to (and start to engage with). Indeed, more than a few astute observers, including Tyler Cowen, have decided to say, “Yes, this is AGI” (though that’s not a claim OpenAI is yet ready to make):

I think it is AGI, seriously. Try asking it lots of questions, and then ask yourself: just how much smarter was I expecting AGI to be?

As I’ve argued in the past, AGI, however you define it, is not much of a social event per se. It still will take us a long time to use it properly. I do not expect securities prices to move significantly (that AI is progressing rapidly already is priced in, and I doubt if the market cares about “April 16th” per se).

Benchmarks, benchmarks, blah blah blah. Maybe AGI is like porn — I know it when I see it.

And I’ve seen it.

Tyler’s vivid analogy notwithstanding, we pay attention when someone we respect as an informed observer and user of the technology says things like this. You should, too.

o3 is distinctive for several reasons. It has a very high general intelligence. The benchmarks are still coming, but early evidence suggests it’s as smart or smarter than Google’s Gemini 2.5 Pro model (which is the smartest generative AI tested to date). But the real power is that it is “agentic,” meaning that can, without you asking it to, use all sorts of tools to accomplish a task: search the web, run code, create files — even zoom in on and crop images you might have given it to get a closer look at something. And all the while you watch it do so and see its chain of thought while it works. It’s really something, and here’s a brief example:

The flow of events among us on announcement day was telling:

OpenAI releases the model.

Three of your Confluence writers (all also leaders of our firm’s generative AI work) immediate begin to use it.

Within two hours have all called or texted each other to express some version of “holy [expletive of your choice]” about what it can do.

For one of us, it was giving o3 a test we have used before: design a communication strategy from a relatively general brief. We did this with OpenAI’s o1 model in December, and were taken aback by what o1 could do. This time we used an actual client (whose name we have masked here), and o3 impressed us even more. We started with:

Here's the brief. We are advising [CLIENT], a quasi-independent regulatory agency in Washington DC. Employees, all intelligent and generally committed to the organization's vision. Almost all live in the DC area. All have had total work from home flexibility since covid in 2020. The organization has touted this flexibility as a strength of the organization, noting that work is about what people produce more than where they produce it. In mid 2024, senior leadership came to believe that the WFH policy was actually harming productivity, culture, relationships, and talent development. They have made the decision to require two days a week in the office. The new policy is employees must be in the office two days a week or 8 days a month, the employee picks the days. Those are minimums. They are welcome to be in as much as they like. The policy will go into effect on June 1 2025, giving people time to plan and adjust. It is not up for debate or negotiation. This will be a very unpopular decision with many employees. There is a town hall next week, on Wednesday April 23, in which the CEO plans to announce this new policy. The organization has 92 people leaders in four bands of management. The town hall is virtual. In a moment I will ask that you plan and create a comprehensive communication strategy for the organization to execute. It should include activity before and after the announcement. You determine the timeline and framework. It should include core messaging (not scripts, but the core message set), message sets for discrete audiences, channels, activity, and timeline. First, do research to learn what you need to learn about [CLIENT].

o3 then, without further prompting by us, conducted eight different web searches and read 26 online sources about the client, drawing a number of relevant conclusions (including The new two‑days‑per‑week minimum will push space‑utilization limits on peak days; employees will notice if the new HQ feels cramped or if desk‑booking becomes a hassle. Build clear logistics answers into the plan. and Internally mandating office days while externally championing flexibility for the industry creates a perceptual gap. Messaging must reconcile that tension (e.g., ‘We regulate firms whose supervisory risks differ from ours; our internal collaboration needs are unique,’ etc.). It also noted these “key takeaways for the communication strategy”:

Mission alignment is the credibility anchor. Every message must link in‑office time to protecting investors and strengthening regulatory quality, not to vague culture clichés.

Address the external‑vs‑internal flexibility paradox head‑on. Employees read [ORGANIZATION]’s public statements; they will raise the inconsistency.

Design around space constraints and commute fairness. Proactively show how two‑day flexibility will be scheduled, how desk or parking allocation works, and what tech upgrades support hybrid teams.

Segment by management band and location. DC‑area staff (the vast majority) will feel the impact differently from satellite offices; people leaders need tailored toolkits.

Use compliance‑style clarity, but human tone. Guidelines should read like a well‑crafted regulatory notice—precise, Q&A‑based—yet still acknowledge personal disruption.

Leverage trusted channels. [ORGANIZATION] employees habitually use Teams, Outlook, SharePoint, and virtual town‑halls. Layer richer media (videos, live Q&A) for emotional tone; push policy details via written FAQs and intranet hubs.

These insights give us the factual and cultural grounding to build the full pre‑ and post‑announcement communication architecture you requested. Let me know when you’re ready to move to the strategy and timeline.

We then asked o3 to research similar policy moves by other peer-like organizations from the popular press, and it did 12 different web searches and read 36 sources before giving us a table of similar moves at peers (including what happened, how it landed, and usable take-aways), a list of patterns worth noting, and implications of “what this means for [CLIENT].” From there we asked it to generate a fulsome communication strategy, and it gave us a strategy, an execution plan including responsibility assignments, message sets for each key audience, channel mix, communication timeline, deliverable list, and a list of risks to mitigate with mitigation suggestions. We asked for a cover note to leadership positioning the document for review, and then had o3 create 28 (!) specific deliverables to support the communication plan, all aligned to the overall strategy and messaging approach and tuned to the audiences who would receive them.

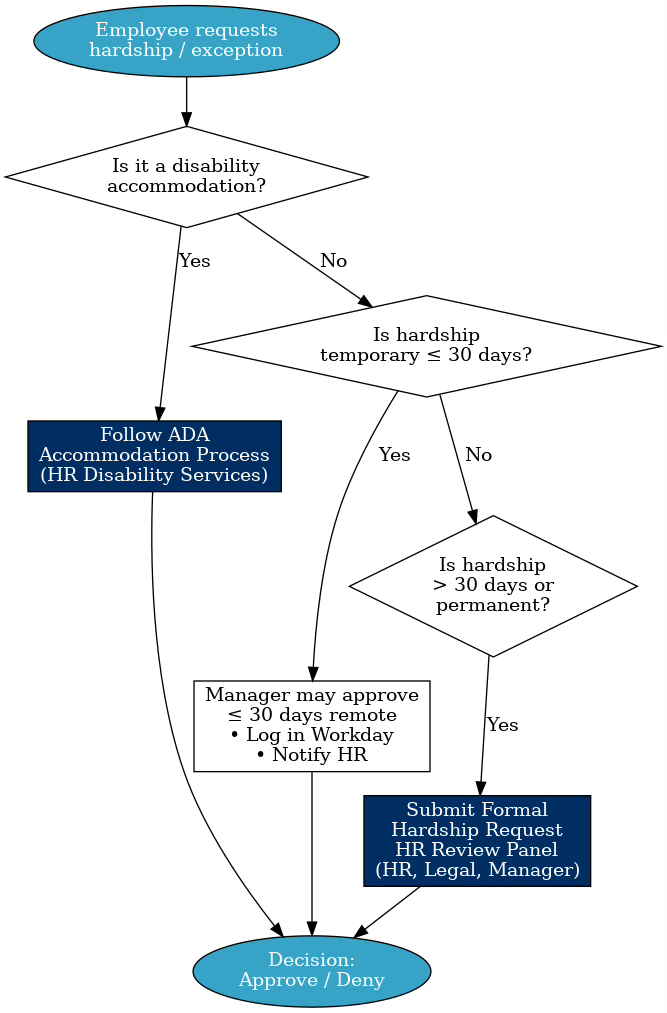

We will note that we did not ask for audience-specific message sets or a risk mitigation table — o3 decided those were appropriate to the task and created them itself. (We will note that we would have done the same.) As we asked it to create specific deliverables, it at times gave them to us in the format specified by the plan: as a PowerPoint file, PDF, Excel file, etc. It even ran code to develop a rough graphic of the exception decision-tree:

Total time to complete all this work, from first prompt to final deliverable: 1 hour. Quality in our view: equal to that of most communication professionals working today.

Another of us asked o3 for “Masters recap, in the style of McCarthy in Blood Meridian” and o3 wrote:

In the hot hush of Georgia the gunsmoke of another Masters drifted into the pine tops and was gone, leaving only the jacket of green draped upon the shoulders of Rory McIlroy, who stood as a man come in from a long and lonesome trail. The gallery’s roar, sudden and brief, broke against the red earth and fell silent, like thunder lost in distant hills.

Augusta lay indifferent beneath an April sun, seven thousand five hundred fifty‑five yards of grass and hidden break, the fairways rolling like dry riverbeds, the greens slick as hammered copper. It had known men of every intent—schemers and penitents, those who came righteous and those who came blind—and it had ground them all beneath the indifferent turning of the world. Upon this country the players advanced as horsemen in a dust‑choked basin, their caddies trailing like outriders, the sun flashing off the iron heads slung across their backs.

They came from the four points: the Irishman McIlroy with his unfinished destiny, the Englishman Rose hardened by years of near‑misses, Scheffler cocksure and keen, DeChambeau preaching force where craft might do; others too, nameless in the telling yet hopeful still. They stepped beneath the cathedral pines where the needles whisper of all human vanity, and each man sensed that here even talent might founder upon unseen shoals.

Sunday dawned white and pitiless. McIlroy’s first blow found chaos, and on the opening page of his card the number of misfortune was etched twice, like twin graves. Still he pressed on, for there is no mercy in retreat. At Amen Corner the thirteenth hole bent back upon him like some ambuscade in a box canyon, and his ball, struck fair and pure, kicked and spun into Rae’s Creek, vanishing in a wink of water‑glare. The patrons groaned and the man felt the coat slip from his shoulders even as he reached for it.

Yet the world is a circle and offers second reckonings. At fifteen he fired from the pine shadows an iron that curved against its own mortality and came to rest six feet beneath the flag. The ground shook with the roar of saved souls. At seventeen another arrow, pure and true, braked beside the cup so gentle it raised a gasp before the applause. But destiny, capricious thing, withheld its signature: on the seventy‑second green the putt to finish slid by, a pale dagger twisting in slow time, and the world was remade.

Justin Rose waited in the clubhouse with sixty‑six strokes writ against the day, eleven under par and equal to the task. He might have been a man setting his brand upon the very air. The sun fell toward the horizon, the shadows long and blue as rifle barrels, when word came that a playoff would be held upon the eighteenth once more, sudden death in the gloaming.

They returned to the tee as duelists called by fate. Rose drove first, straight and mild, the ball settling like seed in tilled soil. McIlroy answered and his shot was the twin of it. Their approaches split by paces only. Rose’s putt slid wide into the darkness. McIlroy stood over his own, three feet of torment. He struck. The ball rolled and vanished, and with it vanished the long burden he had carried since youth.

He knelt upon the Bentgrass, hands to face, as if some silent absolution had descended. The patrons shouted his name in a rising chant that shook the twilight. In Butler Cabin he donned the coat and it fit as though tailored in secret years ago. He spoke softly of the weight now lifted, of the road ahead unknown. Outside, the wind moved through the loblolly pines, erasing footsteps, carrying with it the faint smell of cut grass and gunpowder and the remembrance of a day’s reckoning now finished.

So ends the chronicle. The pines resume their whisper, darkness pools in the hollows, and elsewhere bright spheres wait upon new hands, knowing nothing of gratitude or mercy or the small histories of men.

These are just two of the examples of o3 output that have impressed or stunned us, and these are just communication work and playful text. The model seems to have equal or better proficiency in just about every domain of human knowledge (consider that, for a moment). So, yes, o3 is a very impressive tool … for us, the most impressive generative AI tool to date. And it is a bellwether of what’s to come, as the other leading labs will surely follow suit with agentic models of their own.

Claude Gets its Deep Research Equivalent

Anthropic joins OpenAI, Google, and Perplexity in providing long-context web research capabilities.

Last week, we wrote about a new approach to getting more out of the deep research capabilities of ChatGPT, Gemini, and Perplexity. The approach, which we learned from Alex Lawsen, involves using Claude to create a comprehensive research query to paste into another tool to run a deep research report. The reason behind this slightly inefficient process — create the prompt in Claude, run it in another tool — was that Claude itself lacked the ability to create deep, web-based research reports. In a sign of how quickly capabilities continue to advance, that changed a mere 48 hours after we published. As of April 15, Claude now has its deep research equivalent, which Anthropic has simply dubbed “Research”:

Research transforms how Claude finds and reasons with information. Claude operates agentically, conducting multiple searches that build on each other while determining exactly what to investigate next. It explores different angles of your question automatically and works through open questions systematically. This approach delivers thorough answers, complete with easy-to-check citations so you can trust Claude's findings.

Research delivers high-quality, comprehensive answers in minutes, making it practical for the multiple research tasks you tackle throughout your workday. It's this balance of speed and quality that sets it apart.

Research is now available for Max, Team, and Enterprise users in the U.S., Japan, and Brazil. As with ChatGPT’s Deep Research, the feature shows up as a button users can toggle on and off in the prompt window:

In our limited testing, we’ve found Claude Research to be a significant step forward in overall utility for Claude. It’s faster, if slightly less thorough, than what we’ve received from ChatGPT. We tested Claude Research by using the same prompt1 we used last week with ChatGPT’s Deep Research and compared the outputs. As we noted last week, our prompt in ChatGPT resulted in a 72-page Word document, which we found both insightful and comprehensive. It took ChatGPT six minutes. The same prompt in Claude Research, however, yielded a “mere” 15-page document in one minute. This is consistent with our overall experience thus far: ChatGPT’s Deep Research tends to produce more comprehensive reports and takes slightly longer, while Claude Research’s output — while still thorough — tends to be a bit shorter and “to the point.”

Again, we find this to be a major step forward in utility for Claude. We’ve been using it to create organizational briefs that allow us to more deeply understand new clients, for example, resulting in high-quality reports that Claude simply couldn’t generate until now. It likely won’t replace ChatGPT’s Deep Research for us, but it will complement it. One doesn’t need a 72-page report for everything.

Zooming out a bit, this is yet another example of the major labs cohering around a key set of features and capabilities. One lab (often OpenAI) launches a feature and is quickly followed by the others. To cite several examples:

Customization — ChatGPT has custom GPTs, Anthropic has Projects, and Gemini has Gems.

Web Search — ChatGPT, Claude, and Gemini all offer sophisticated web search capabilities.

Reasoning Models — OpenAI, Anthropic, and Google have all released reasoning models, pushing the overall intelligence frontier forward.

Multi-Modality — OpenAI and Google have been aggressively improving image and video generation capabilities, with increasing multi-modal integration. OpenAI and Google have also introduced powerful live voice and video interaction to ChatGPT and Gemini. Anthropic is expected to launch voice capabilities as soon as this month.

Memory — OpenAI and Google have been rapidly improving their tools’ memory capabilities.

We can now add deep research to that list. The implication worth noting is that for the foreseeable future we should expect any gaps in major features across the leading tools to be temporary. The labs are largely working on the same major problems. And they’re solving them. At this point, all are powerful enough to offer roughly equivalent amounts of utility (although as we noted above, OpenAI’s o3 seems to be a different animal). Our advice is to pick one (or two) and use it. Experiment and take advantage of the new features as they arrive, and know that if your tool of choice is lacking something today (for example, Claude’s limited memory capabilities relative to ChatGPT and Gemini), that likely won’t be the case for long.

Two New Integrations

We’re getting better connections between leading large language models and tools we use each day.

A limitation of the leading large language models has been their inability to connect with our data and common applications. Even when attempts have shown theoretical promise, such as Apple Intelligence and Copilot 365, they disappoint more than they impress. But two recent developments make us believe the tides may be turning.

This week, in addition to the research capability we discuss above, Claude announced its integration with Gmail, Google Calendar, and Google Workspace. Once connected with your Google accounts, you can prompt Claude to search your email or calendar directly. We’ve tested this feature, and it’s quite good. Claude accurately pulled and tallied charitable donation receipts, recreated a travel itinerary for an upcoming trip by reviewing planning emails and receipts, and listed upcoming birthdays based on a Google calendar. Occasionally Claude required a second prompt to go deeper, but we didn’t notice any errors or omissions. While we couldn’t test the Google Workspace integration in full (our firm uses Microsoft 365), we expect it works reasonably well given what we’ve seen from Claude.

The second example has been rolling out for several months, but just made it to one of our Confluence writers this week. Firefox allows users to log into their AI chatbot of choice and embed it into their sidebar. Then, with a highlight, you can prompt your preferred model while specifically referencing the selected section. To provide a sense of how this works, below is a quick video of a member of our team using Gemini 2.5 to explain one of our posts from last week.2

These features attract our attention not because they will fundamentally alter how we work, but because when we consider them in the context of the past few weeks, the future starts to resolve. We now have a model that at least some believe approaches AGI (o3), an application capable of referencing past interactions with users (ChatGPT memory), agentic tools that, while flawed, can act on behalf of users (Operator and Claude Computer), and chatbots that integrate into personal data and common applications reasonably well. No one knows how or when these pieces will all come together, but there’s every reason to believe they will.

What will that mean? It’s hard to know for sure, but leaders and communication practitioners should probably expect the emergence of a new form of colleague: the AI teammate, one deeply capable and available at any time help get real things done. Imagine a world in which you and everyone on your team, including individual contributors, has a new colleague working with and for them who can quickly and inexpensively do work at or above their level. That is not a future fantasy — that world exists today. It’s just unevenly distributed. Leaders should consider the implications of that now, not later.

Insights from Anthropic into Tomorrow’s Talent

New data from the creator of Claude should have talent leaders paying attention.

Last week, Anthropic published a new report about how college students are using generative AI. By analyzing one million anonymized student conversations with Claude, Anthropic pulled data about how the workforce of tomorrow is using generative AI for today. And those data tell an interesting story.

Students across all disciplines are embracing generative AI, with distinct patterns emerging about who’s using it how. This study complements Anthropic’s recent Economic Index reports, which revealed that professionals generally favor integration over automation when using Claude. This new student research suggests these collaboration preferences begin forming during education, with similar interaction patterns appearing in academic settings.

Computer Science students are at the forefront of adoption, accounting for 38.6% of conversations with Claude though representing only 5.4% of degrees. Natural Sciences and Mathematics also show higher representation (15.2% vs. 9.2% enrollment), while Business-related conversations make up just 8.9% despite constituting 18.6% of bachelor’s degrees. Health Professions (5.5% vs. 13.1%) and Humanities (6.4% vs. 12.5%) also show lower adoption rates relative to enrollment numbers, suggesting STEM students are blazing the trail in generative AI integration. Given the significant exposure of generative AI abilities to many traditional white-collar and humanities-specific roles (business, law, marketing, and communication among them), their under-representation gets our attention.

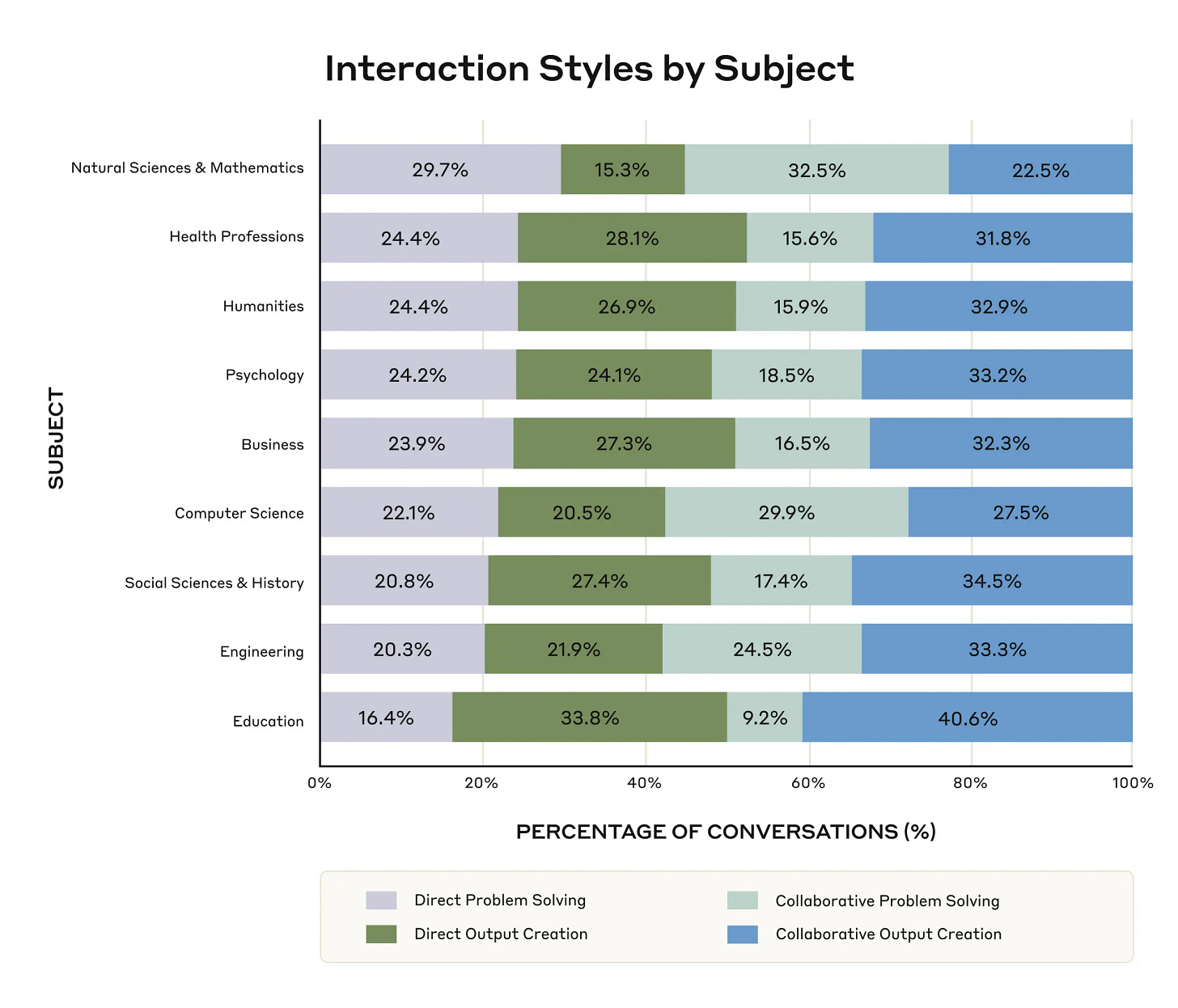

Beyond the data on who’s using generative AI, though, we were especially struck by the data on how students approach their use of these tools. The research identified four distinct interaction styles, each present in roughly equal measure (23-29% of conversations):

Direct Problem Solving

Direct Output Creation

Collaborative Problem Solving

Collaborative Output Creation

These patterns align nicely with Ethan Mollick's “cyborg vs. centaur” framework, providing real-world evidence that these students are fluidly switching between cyborg (integrating generative AI in workflow) and centaur (delegating work to generative AI) modes. The breakdown by discipline is also interesting:

Perhaps most telling, though, is what cognitive tasks students delegate to generative AI. The data show that students primarily use it for creating (39.8%) and analyzing (30.2%)—higher-order thinking skills—rather than basic tasks like remembering facts (1.8%). This fact creates opportunities and challenges when we think about implications for new hires across industries over the next five years.

Talent leaders should pay attention. We’re witnessing the emergence of a workforce that thinks differently about problem-solving and productivity. These AI-native professionals won’t just use generative AI differently—they’ll expect workplaces to support their collaboration styles. Organizations slow to adapt may find themselves struggling to attract top talent who view generative AI integration as non-negotiable. What’s more, traditional evaluation methods could undervalue candidates whose abilities shine brightest when paired with these tools. The competitive advantage will go to teams that not only welcome these AI-fluent graduates but actively redesign workflows, development tracks, and even recruitment methods to capitalize on their unique approaches to work.

This research signals evolving work patterns that forward-thinking organizations should start adapting to now. As these students graduate and enter the workforce, they’ll bring with them not just technical skills, but entirely new mental models for problem-solving that slow-moving organizations won’t be prepared for.

We’ll leave you with something cool: Google is rolling out the ability to create videos using Veo 2, its video generation model, to Gemini Advanced users. It’s impressive.

AI Disclosure: We used generative AI in creating imagery for this post. We also used it selectively as a creator and summarizer of content and as an editor and proofreader.Here’s the full prompt, which we created using Claude:

Research Prompt: Media Richness Theory in the Era of Spatial Computing

Goal: Conduct a comprehensive analysis of how emerging spatial technologies (AR, VR, MR, and metaverse platforms) are reshaping media richness theory, and identify the strategic implications for corporate communication practices. Examine both theoretical extensions to the original framework and practical applications for communication professionals. Cover near-term applications (0-3 years) as well as longer-term potential (3-10 years). The analysis should identify specific opportunities, challenges, and recommended approaches for organizations seeking to leverage these technologies for internal and external communication.

Output format: Deliver a comprehensive research report (6,000-8,000 words) following a progressive disclosure structure that allows readers to engage at different levels of depth. Begin with an executive summary that captures all key findings and implications in no more than 10% of the total document length. Include clearly linked sections that lead readers from high-level insights to supporting evidence and detailed analysis. Use informative headings and subheadings that preview content rather than generic labels.

The report should include:

An executive summary with linked bullet points to corresponding detailed sections

A thorough introduction to both media richness theory and the current state of spatial computing technologies

A detailed analysis section examining how each major technology extends or challenges the core principles of media richness theory

A framework for evaluating these technologies against communication objectives

Practical applications and case studies highlighting current implementations

Strategic implications for corporate communicators, including specific recommendations

Future directions and areas for continued research

A balanced consideration of limitations, risks, and ethical considerations

Use concrete examples, visual representations (described in text), and comparative analyses where appropriate. Balance theoretical depth with practical applications, ensuring each informs the other.

Warnings:

Evaluate sources based on domain-specific credibility hierarchies:

For theoretical extensions to media richness theory, prioritize peer-reviewed academic articles in communication and information systems journals, followed by books from established academic publishers, and conference proceedings from recognized academic associations.

For technology assessments, prioritize research from independent technology research firms (e.g., Gartner, Forrester), technical documentation from platform developers, and analyses from specialized technology journalists with established expertise.

For corporate applications, prioritize documented case studies, white papers from consulting firms with expertise in communication technology, and industry publications with rigorous editorial standards.

Be skeptical of promotional materials, vendor claims lacking independent verification, and speculative predictions without substantive evidence.

Avoid common misconceptions and pitfalls:

Don't assume media richness is always beneficial; consider contexts where leaner media may be preferable.

Don't conflate technological capability with effective implementation; address organizational and human factors.

Avoid technological determinism; consider how organizational culture, individual preferences, and power dynamics influence technology adoption and use.

Be cautious of overgeneralizing findings from one context (e.g., consumer applications) to another (corporate communications).

Distinguish between empirically supported claims and theoretical predictions yet to be validated.

Additional Context: Media Richness Theory (MRT), originally developed by Daft and Lengel in the 1980s, proposes that communication channels vary in their capacity to process rich information. The theory suggests that effective communication results from matching media richness to task equivocality, with richer media better suited for ambiguous tasks and leaner media for straightforward ones. The theory traditionally ranks communication channels along a continuum from rich to lean: face-to-face, video, audio, and written communication.

Emerging spatial technologies potentially disrupt this continuum by:

Creating new forms of presence and embodiment that may rival or exceed face-to-face interaction

Enabling novel forms of synchronous and asynchronous collaboration

Introducing new modalities for information representation and interaction

Blurring boundaries between physical and digital communication environments

Potentially altering cognitive and emotional engagement with communication content

Corporate communication encompasses multiple domains, including:

Leadership communication (executive messaging, vision/strategy dissemination)

Employee communication (change management, engagement, training)

Team collaboration (project coordination, knowledge sharing)

External stakeholder communication (customer interaction, investor relations, public affairs)

The analysis should consider how spatial technologies might differentially impact these domains and examine implications for:

Message fidelity and interpretation

Audience engagement and retention

Credibility and trust

Bandwidth and efficiency

Accessibility and inclusion

Organizational structure and culture

Additional considerations should include:

Technology implementation challenges (cost, infrastructure, adoption barriers)

Measurement approaches for evaluating effectiveness

Privacy and security implications

Comparative advantages relative to existing channels

Integration with broader communication ecosystems

This research should provide both theoretical foundation and actionable insights for communication professionals seeking to understand, evaluate, and potentially implement spatial technologies within corporate communication contexts.

Here’s how Gemini 2.5 explained our piece on “Why You Still Need to Write” to a fifth grader.

Hey there! Imagine you have a super-smart robot that can write stories and emails for you really fast and sometimes even better than you can. That's kind of like generative AI!

The people who wrote this long message are thinking about what happens when we use this robot to write everything. They have some worries, like when Plato, a really old and smart guy, worried about people writing things down instead of remembering them in their heads. He thought people might forget how to actually remember things if they relied too much on writing.

These writers have three main worries about using AI to write everything:

Losing Our Writing Skills: Just like Plato worried about memory, these writers worry that if we always let the robot write for us, we might not get as good at writing ourselves. It's like if you always use a calculator, you might not be as quick at doing math in your head. They're not saying we'll totally forget how to write, but maybe we won't practice as much.

Getting "Slop": Sometimes, if you don't tell the robot exactly what to write, it can make stuff that's not very good, kind of messy, and hard to understand. They call this "slop." Imagine if all the websites you visited had messy and confusing writing – that wouldn't be very helpful!

Fake Feelings: This is a big one! Imagine your friend sent you a birthday card that was written by a robot. It might say happy birthday, but it wouldn't feel as special as if your friend wrote it themselves, right? These writers are worried that if we use robots to write personal things like thank-you notes or letters to our family, they won't feel real or sincere. People will want to know that the feelings in the writing are actually your feelings, not the robot's.

They think that for important things, especially when we want to show we care or be honest, it's still really important for us to do the writing. It's like how you want your own drawing hanging on the fridge, not a drawing a robot made!

So, while these writing robots are super helpful and can do some amazing things, we need to be careful. We still need to practice writing ourselves, make sure what's written is clear, and most importantly, make sure that when we want to share our real thoughts and feelings, we do it ourselves. They even have a funny rule: if it would feel weird for people to know a robot wrote something personal from you, then you should probably write it yourself!

Even with amazing robots that can write, being able to put your own words down with your own feelings is still going to be super important!