Confluence for 6.2.24

Cue the backlash. OpenAI for nonprofits. PwC and OpenAI announce partnership. Research on AI and employee creativity.

Welcome to Confluence. Here’s what has our attention this week at the intersection of generative AI and corporate communication:

Cue The Backlash

OpenAI for Nonprofits

PwC and OpenAI Announce Partnership

Research on AI and Employee Creativity

Cue The Backlash

We’ve entered phase two of The Hype Cycle. But don’t be fooled.

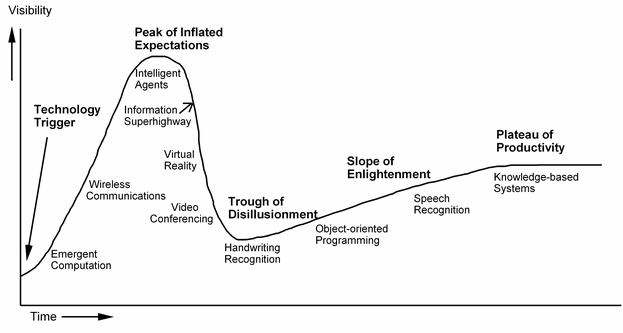

In 1995, Gartner’s Jackie Fenn published a research note titled When to Leap on the Hype Cycle, making the argument that technology adoption follows a predictable pattern. In this pattern a new technology creates inflated expectations and hype, which as an emergent technology it cannot fulfill. This in turn leads to a phase of disappointment and disillusionment as those elevated expectations are violated to the downside. Then, as the technology continues to evolve and create utility, adoption grows over time. It’s a boom-bust-growth pattern, and it’s become an aphorism in the world of technology.

We will note here that there’s another aphorism we should consider, and it’s from the world of statistics, where British statistician George Box wrote in the 1970s that “All models are wrong, but some are useful.” The Hype Cycle is not a law of science or nature, it’s a mental model, and it has its flaws and exceptions — but it’s still useful. Right now it’s particularly useful in thinking about generative AI, because folks, the “trough of disillusionment” is here.

Some of this critique is highly technical and scientific, and not really new. Cognitive scientist and AI researcher Gary Marcus has for years argued that the technical structures of the transformer technology that underlies large language models like ChatGPT, Gemini, and Claude are fundamentally prone to error, unable to create a model of the world, and unable to “think” in any meaningful way. Others argue the opposite, but Marcus’ perspectives and others like them are grounded in expertise and important to understand. Much of the rest of the new critique, though, reads more of cultural criticism rather than scientific argument.

The main threads of this critique are, and here we simplify, “The cycle of innovation is slowing down,” “It still can’t do simple math and it still makes things up,” and “These models are unaffordable to run.” One recent example of the form is this piece in the New York Times, “The New ChatGPT Offers a Lesson in A.I. Hype.”

The piece offers a fair critique of OpenAI’s new model, GPT-4o, including that its real-time voice interaction can be glitchy, and that it can hallucinate (invent information) and get math wrong, both of which are long-standing critiques of generative AI (and both of which get to the arguments Gary Marcus makes). But it also offers this statement, which we think is bad advice:

The lesson to learn from all this is that we, as consumers, should resist the hype and take a slow, cautious approach to A.I. We shouldn’t be spending much cash on any underbaked tech until we see proof that the tools work as advertised.

We’re not here to defend OpenAI or any of the other leading generative AI labs, or the technology. The labs have serious moral, ethical, and technical challenges to address, and the technology has plenty of weaknesses. But “take a slow and cautious approach” is not the lesson to learn from this technology. Any of these models, even in their free forms, offer significant utility, today, in ways we are just starting to understand. The research is clear that they make those who use them well better at what they do — and that includes whomever you might consider your professional competition.

Speaking from personal experience, those of us who have adopted them most fully in our firm cannot imagine going back to how we used to work. They have made us smarter, better, and faster. The sooner you can learn what utility they offer you and how you can integrate it into your life and work, the better. Should you know how to use them? Of course. Should you only use them for what they are good for? Naturally. Should there be strong governance in how to apply them at work? Absolutely. But to sit and watch is to leave value on the table in the short term, and to fall behind in the long term — because change, it is a-comin’, and it’s a-comin’ fast. And it is much better to ride a wave than to resist it.

It is interesting to us how people hold this technology to such a different standard than they do others. Perhaps the very frame of “artificial intelligence” evokes oracle-of-Delphi-like expectations for what they can do and how they work (which fits the hype cycle nicely). In our conversations around the office we keep coming back to our cars as an example. Someone will say, “ChatGPT is awful at making graphics with accurate text” or “Claude invented a book that doesn’t exist.” Yep. There are things they are bad at, and those are two of them. And my car doesn’t float very well … so I don’t use it as a boat. Someone will say, “It did a bad job of answering my question” or “It wrote a terrible first draft.” Yep. Knowing how to author prompts and use these tools iteratively is critical to getting great output. And I also wouldn’t just give my 13-year-old the keys to my car and expect her to get great outcomes. Training matters in learning to drive, and it matters in generative AI, too. “It doesn’t do a great job of translating language on the fly.” Yep. That’s a new technology. And while we for a long time have been promised flying cars, that promise just has not come to fruition. And some of what generative AI promises may also not come to fruition. But compare your car today to the one of just 10 years ago. Your car may not fly, but it probably has full-surround airbags, cameras, lane warning systems, GPS navigation, and the ability to play almost all the world’s music, streaming from space. Don’t let the pace of today’s innovation set an expectation for the innovation yet to come.

So, for the next while — perhaps months, perhaps years — expect the backlash on generative AI to unfold. But don’t lose sight of the pattern. While the underlying models will evolve and the architecture may change completely, generative artificial intelligence is here to stay. And as a general purpose technology, you can expect its effects to be broad, significant, and unpredictable.

We are reminded of another aphorism from the world of technology (perhaps aphorisms, like many other things, come in threes): it’s Amara’s Law, and it comes from futurist and scientist Roy Amara:

We tend to overestimate the effect of technology in the short run and underestimate the effect in the long run.

We will continue to read the negative hype articles on generative AI with curiosity, but in our view the technology offers incredible daily utility: IF you use it for what it is good for and IF you take the time to learn how to use it. And it will continue to gain capabilities, IF you wait for them. But don’t dismiss this technology, because it will likely change much in the world.

If we want a final analogue, let’s look to the dot.com bust of the late 1990s. The anti-hype cycle then was severe, in popular culture and in people’s wallets as the promise of so many nascent web-based companies went up in flames. But consider where we are with internet-enabled technology 20 years later: the end of taxi service and the rise of Uber. Amazon bringing a world of products to your door. The disaggregation of information brokerage of all kinds, from real estate to stock prices and trading, to the encyclopedia itself. The transformation of TV and video entertainment. The total restructuring of the music business. And, we will note, the transformation of media and the newspaper industry. These are just several examples of what general purpose technologies do to markets. Generative AI is one — so don’t believe the negative hype.

OpenAI for Nonprofits

ChatGPT Team and ChatGPT Enterprise are now available at discounted rates for many nonprofit organizations.

On Thursday, OpenAI introduced OpenAI for Nonprofits and shared the following details about the program:

With OpenAI for Nonprofits, nonprofit organizations can now access ChatGPT Team at a discounted rate of $20 per month per user. Larger nonprofits ready for large-scale deployment can contact our sales team to access a 50% discount on ChatGPT Enterprise. These offerings enable access to our most advanced models like GPT-4o, advanced tools and custom GPTs, a dedicated collaborative workspace, admin tools for team management, and robust privacy and security standards.

We wrote about ChatGPT Team in January when it was first introduced, and we have been using it with a growing number of pilot users in our firm since then. The standard rate for ChatGPT Team is $25 per month per user, so this represents a 20% discount for nonprofits. One thing to note from the FAQs — which the introduction post does not mention — is the types of nonprofits that are (and are not) eligible for these rates:

What types of nonprofits are eligible for a nonprofit discount?

Nonprofit discounts are available to eligible nonprofits based anywhere in the world. At this time, academic, medical, religious, or governmental institutions are not eligible for OpenAI for Nonprofits.

So, be aware of those criteria before contacting OpenAI.

In the FAQs, OpenAI shares three examples of how ChatGPT can assist nonprofits: refining grant proposals, summarizing a research paper and distilling key takeaways for colleagues unfamiliar with the topic, and conducting a sentiment analysis on a social media post. Those are three very good use cases, and each (done well) would be worth well more than the $20 cost of a monthly subscription. We asked GPT-4o to come up with 20 more ideas, with a focus on communication:

User: I'm the Executive Director of a nonprofit organization that provides transitional housing for homeless women, children, and families. We have 10 full-time staff, with the majority focused on operations and keeping our facilities running. Our budget is always extremely tight, and we decided that we cannot afford a full-time communication and marketing team member. How could I and other members of my leadership team use ChatGPT to help us with communications and marketing? Our primary goals are to 1) raise awareness in our community and with politicians and stakeholders about the important work we do, 2) deepen and expand our relationships with donors to grow our funding and support. Please give me 20 ideas for how you could help.

GPT-4o: Certainly, here are 20 ideas for how ChatGPT can help you and your leadership team with communications and marketing to achieve your goals:

Draft Press Releases: Create compelling press releases for your events, success stories, and major milestones to distribute to local media.

Social Media Content: Generate engaging posts for various social media platforms (Facebook, Twitter, Instagram, LinkedIn) to raise awareness and keep your audience informed and engaged.

Email Newsletters: Write monthly or bi-weekly newsletters to update donors, stakeholders, and community members on your organization's progress and needs.

Grant Proposals: Assist in drafting grant proposals by providing templates and tailoring content to match specific grant requirements.

Donor Thank You Letters: Compose personalized thank-you letters to donors, highlighting the impact of their contributions.

Fundraising Campaigns: Develop the messaging and content for fundraising campaigns, including emails, social media posts, and landing page copy.

Event Invitations: Create appealing invitations and promotional materials for fundraising and awareness events.

Blog Posts: Write blog posts about the success stories, challenges, and needs of the individuals and families you serve.

Website Content: Help refresh and update your website content to better reflect your mission, services, and impact.

Advocacy Letters: Draft letters to politicians and stakeholders advocating for policies and support that benefit your organization and those you serve.

Brochures and Flyers: Design and write content for informational brochures and flyers to distribute at community events and meetings.

Annual Reports: Assist in creating annual reports that highlight your accomplishments, financials, and future goals.

Testimonial Collection: Develop a structured approach for collecting and presenting testimonials from the people you serve.

Crisis Communication: Prepare templates and draft communications for crisis situations to maintain transparency and trust with your audience.

Video Scripts: Write scripts for short videos showcasing your work, success stories, and appeals for support.

Volunteer Recruitment Materials: Create compelling content to attract and retain volunteers, emphasizing the impact of their work.

SEO Optimization: Provide guidance on how to optimize your website content for search engines to increase organic traffic.

Community Outreach Emails: Draft emails to local businesses, schools, and community groups to form partnerships and increase local support.

Educational Content: Create informative content that educates the public about homelessness and the importance of transitional housing.

Impact Stories: Write detailed impact stories that illustrate how your organization is making a difference, which can be shared across various platforms to inspire support.

That’s just scratching the surface. For nonprofit leaders who have been on the fence about whether to invest in generative AI tools, OpenAI’s new discounted rates might be another reason to start exploring more seriously.

PwC and OpenAI Announce Partnership

100,000 PwC employees will soon have access to ChatGPT Enterprise. And OpenAI finds its first reseller.

This week, PwC and OpenAI announced a partnership that will provide access to ChatGPT Enterprise for over 100,000 PwC employees across the U.S. and U.K. while also making PwC the first reseller of ChatGPT Enterprise. By bringing ChatGPT Enterprise to such a large number of employees, PwC hopes to gain a deeper understanding of generative AI technology and its potential applications, so they can better advise their clients.

Our takeaway — such agreements are going to be more and more common as OpenAI and others push for organizations to bring generative AI to as many of their employees as possible. And as more companies bring this technology inside, competitive pressures will lead to even more doing the same. We’ve expected this, and the agreement between PwC and OpenAI is another signal that soon most employees in most organizations will be expected to work with AI in their day-to-day.

For communication professionals, our advice remains the same. First, work with IT and other partners in your organizations to create a set of principles to govern the use of generative AI inside your organization if you haven’t already. And second, spend time working with the technology, encourage your teams to do the same, and start developing a perspective on how generative AI fits into what you do now rather than waiting for someone inside your organization to ask the question.

Research on AI and Employee Creativity

A reminder that AI will affect how we need to think about talent and skill development.

We recently came across a study on “When and How Artificial Intelligence Augments Employee Creativity” where researchers examined the effects of AI on employee creativity in a telemarketing company.1 The company in the study implemented an AI system to assist with generating sales leads to understand the effect this would have on how sales agents engaged with potential customers once on the phone.

The top-line finding is more or less what we’d expect — by relieving agents of the mental burden associated with screening potential calls, such as evaluating caller interest and dealing with common technical issues, they could allocate more energy to the actual customer interaction and thus, be more creative. But while all agents demonstrated increased creativity when interacting with customers, top performers saw greater improvement. Follow-up interviews shed light on the reasons behind why.

In conversations with agents after the study, researchers learned that lower-skilled agents felt somewhat exposed by the AI — as a larger portion of their work became more challenging, they felt an increased pressure to perform. At the same time, they also recognized the value in observing higher-skilled agents devise more creative solutions, as it provided them with a broader range of ideas to incorporate into their own practice.

On the other hand, higher-skilled agents believed that the increased exposure to complex customers gave them more opportunities to refine their approach and improve their skills. They could accelerate their growth and development by engaging with a higher volume of challenging interactions.

Though communication teams don’t typically have as sequential of a workflow as telemarketers, this research does highlight the importance of developing higher-order skills in teams as they will become more important, not less, as we integrate AI into what we do. As much as we pay attention to the technology and what it’s capable of doing for us, we need to spend as much time, if not more, on understanding the implications of AI on how we think about talent and skill development within communication teams.

We’ll leave you with something cool: Google has a new tool, Illuminate, that will generate audio interviews based on research papers to explain their findings.

AI Disclosure: We used generative AI in creating imagery for this post. We also used it selectively as a creator and summarizer of content and as an editor and proofreader.Here is our Digest GPT’s summary of the article.

Title: When and How Artificial Intelligence Augments Employee Creativity

Authors: Nan Jia, Xueming Luo, Zheng Fang, Chengcheng Liao

This paper investigates whether and how artificial intelligence (AI) can enhance employee creativity in organizations by altering job design through a sequential division of labor. The authors draw on theories of AI-human collaboration, job design, and employee creativity, employing both a field experiment and semi-structured interviews to explore their hypotheses.

Key Findings:

Field Experiment:

Conducted at a telemarketing company where AI assisted in generating sales leads, allowing human employees to focus on higher-level problem-solving during sales persuasion.

Results showed that AI assistance increased employee creativity, particularly for higher-skilled employees. These employees were more successful in solving untrained customer questions, which led to higher sales rates.

AI-assisted employees were 2.33 times more likely to answer untrained questions creatively than those without AI assistance, with top agents showing a 2.81 times greater improvement than bottom agents.

Semi-Structured Interviews:

Interviews with 28 sales agents corroborated the experimental findings. Agents indicated that AI assistance changed job design by reducing the burden of repetitive tasks and increasing engagement with serious customers.

Higher-skilled employees benefited more, reporting improved innovative script generation and positive psychological outcomes, such as better mood and higher morale.

Lower-skilled employees experienced limited improvements and negative emotions, such as increased stress and a sense of defeat.

Skill Bias in AI-Augmented Creativity:

The benefits of AI assistance were found to be skill-biased, favoring employees with higher job skills. This skill bias aligns with economic theories of skill-biased technological change, suggesting that AI technologies enhance the productivity of skilled labor more than unskilled labor.

Implications for Organizations:

The study suggests that AI can effectively augment human creativity if tasks are divided such that AI handles repetitive, well-codified tasks while humans address higher-level, unstructured problems.

Organizations should focus on training and developing employees' skills to maximize the benefits of AI assistance.

Conclusion: The paper concludes that AI-human collaboration, when designed effectively, can enhance employee creativity and job performance, particularly for skilled employees. This augmentation is achieved through changes in job design that conserve cognitive resources and increase task complexity, thereby fostering a more creative work environment.

I've been using ChatGPT4 less and less to generate content and more as an active recall tool. I read something, upload my notes/highlights and then ask it to quiz me and provide me feedback on my written responses.